A member of the OpenAI team has indicated that the large language models of today may soon become notably smaller.

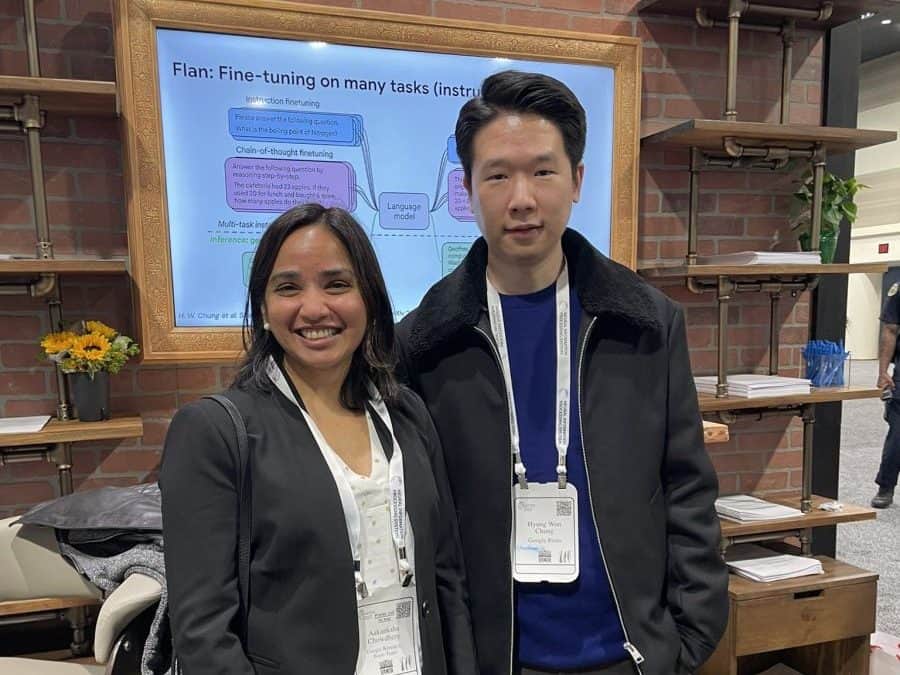

In a thought-provoking address lasting 45 minutes, AI expert Hyung Won Chung, who previously authored the significant Google paper 'Scaling Instruction-Finetuned Language Models,' explored the transformative realm of large language models in 2023. His insight underlines how these models can be fine-tuned to better adhere to instructions. Chung describes the landscape of large language models as exceptionally fluid. In this evolving domain, the guiding concepts are continuously shifting, unlike traditional fields where foundational principles often remain fixed. With advancements, what is deemed impossible today might become achievable tomorrow. He stresses the importance of framing claims regarding LLM capabilities with a clause like 'for now,' indicating that a model might still be capable of performing a task, even if it hasn't yet demonstrated that ability. The expansive models we utilize today are anticipated to transition into smaller versions within just a few years.

An essential takeaway from Chung's presentation is the critical need for thorough documentation and reproducibility in research.

Chung's talk delves into the complexities surrounding data and model parallelism. This section offers valuable insights for anyone keen to explore the technical depths of AI, emphasizing the importance of understanding these parallelism techniques for optimizing performance.

Hyung Won Chung, OpenAI

Chung argues that the Maximum Likelihood method, currently employed for pre-training large language models, acts as a significant limitation to achieving truly expansive capabilities, like scaling to 10,000 times what GPT-4 can handle. As machine learning evolves, relying on manually devised loss functions may become increasingly constraining. AI research He envisions a future where AI development's next phase involves algorithmically learned functions separate from traditional methods. Although still in its early stages, this approach could unlock scalability that overcomes current limitations. He also highlights ongoing projects like Reinforcement Learning from Human Feedback (RLHF) with Rule Modeling as progress in this direction, yet acknowledges that challenges still need addressing.

It’s important to understand that the information presented here should not be construed as financial, legal, investment, or similar types of advice. Always invest what you can afford to lose and consider seeking independent financial counsel if you're uncertain. We recommend reviewing the terms and conditions along with any support resources provided by the issuer or advertiser. MetaversePost strives for accuracy and impartiality in reporting, keeping in mind that market trends can change rapidly. large-scale model training .

Damir leads the team and functions as a product manager and editor at Metaverse Post, where he covers a range of topics including AI, machine learning, AGI, large language models, the Metaverse, and Web3. His engaging articles reach a vast audience, drawing over a million readers every month. With a decade of expertise in SEO and digital marketing, Damir is recognized as an authority, having been featured in renowned publications like Mashable, Wired, and Cointelegraph. Traveling as a digital nomad among the UAE, Turkey, Russia, and the CIS, he attributes his physics degree to equipping him with the critical thinking skills essential for navigating the dynamic world of the internet.

Blum celebrates the one-year milestone with accolades for 'Best GameFi App' and 'Best Trading App' at the Blockchain Forum 2025.

Disclaimer

In line with the Trust Project guidelines Omniston tackles the challenge of DeFi fragmentation by enhancing liquidity on the TON network.