Tracing the Path of Chatbots: From T9 Technology to the ChatGPT Era

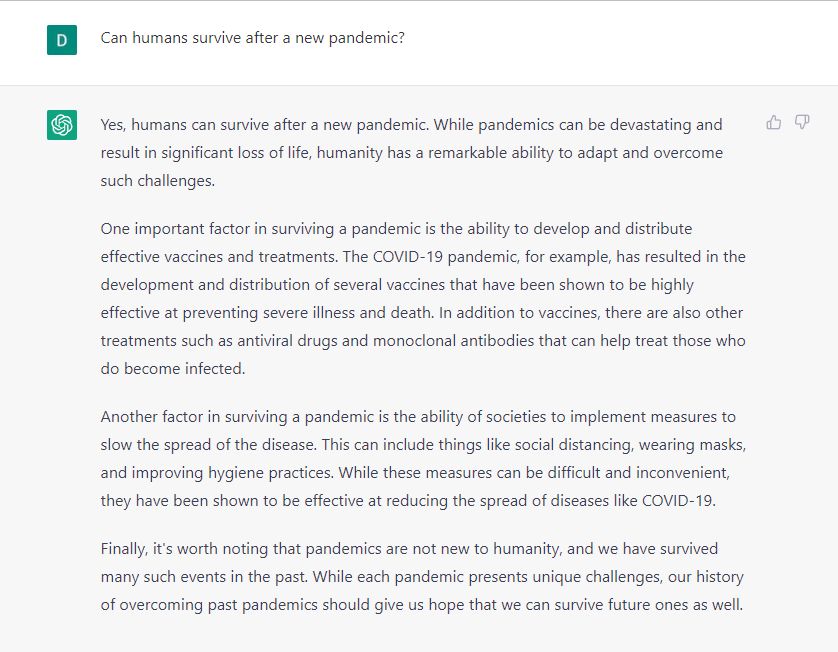

We've recently been inundated with articles highlighting all the astonishing achievements of expansive neural networks and the looming threat to job security for many. Yet, a surprisingly small number of individuals truly grasp how these systems, like ChatGPT, function behind the scenes.

Take a deep breath and don’t panic about your career prospects just yet. In this article, we’ll break down neural networks into digestible terms, ensuring everyone's on the same page.

But before we dive in, a couple of words: this article combines efforts from various contributors, including insights from a well-respected AI specialist.

Given that there's yet to be a comprehensive resource that demystifies ChatGPT using straightforward language, we took it upon ourselves to fill that gap. Our aim is to present this topic in the clearest way possible, helping readers walk away with a basic grasp of how language-based neural networks operate. We’ll take a closer look at their development, their current abilities, and the unexpected surge in ChatGPT's popularity, even among its creators. language models Let’s start with the essentials. To truly understand ChatGPT from a technical perspective, it’s crucial to clarify what it isn't. It’s not a sentient entity like Jarvis from Marvel Comics; it doesn’t possess human reasoning. Get ready for a surprise: ChatGPT is, in essence, a turbocharged version of your phone's T9 predictive text feature! That's right—scientists categorize both as

All these neural networks fundamentally do is predict which word should follow next. “language models.” Initially, T9 technology was all about hastening the process of dialing on a push-button phone by anticipating the current input rather than the forthcoming word. However, as technology progressed, particularly with the advent of smartphones in the early 2010s, it learned to account for context, recognize preceding words, include punctuation, and even suggest a range of potential words that could logically follow. It's this evolution from basic T9 to more sophisticated 'autocorrect' features that we're referencing.

Consequently, both T9 on smartphone interfaces and ChatGPT have been trained to tackle an incredibly simple challenge: forecasting the next word. This procedure is termed 'language modeling'—making predictions based on the text that already exists. Language models rely on statistical probabilities regarding which words are likely to occur next, since we'd all find it quite frustrating if our phone’s predictive text just threw out random terms without any logical connection.

For instance, imagine receiving a message asking about your evening plans: 'What are you up to tonight?' You start responding with 'I plan to...', and here's where T9 jumps in. It might suggest something illogical like 'I plan to fly to Mars,' which is a far cry from sophisticated language modeling. Advanced smartphone auto-complete systems tend to recommend far more sensible terms. How does T9 manage to determine which words are more probable to follow the text you've already typed, and which do not make sense at all? To answer this, we first need to understand the basic principles governing these elementary What drives our quest to identify the 'right' words to fit a specific text?

GPT-2: Entering the era of expansive language models

GPT-3.5 (InstructGPT): Engineered for safety and moderation neural networks .

- How AI models predict the next word

- ChatGPT API Launch: A New Gateway for Developers

- GPT-1: Blowing up the industry

- Let’s take a more straightforward approach. How do you figure out the interrelation of one thing with another? Picture attempting to teach a computer to forecast a person's weight based on their height—what's the first step? You’d need to determine your focal points, gather relevant data, and explore the links among various factors to establish key relationships.

- GPT-3: Smart as Hell

- In simpler terms, both T9 and ChatGPT operate through a series of meticulously chosen algorithms designed to predict

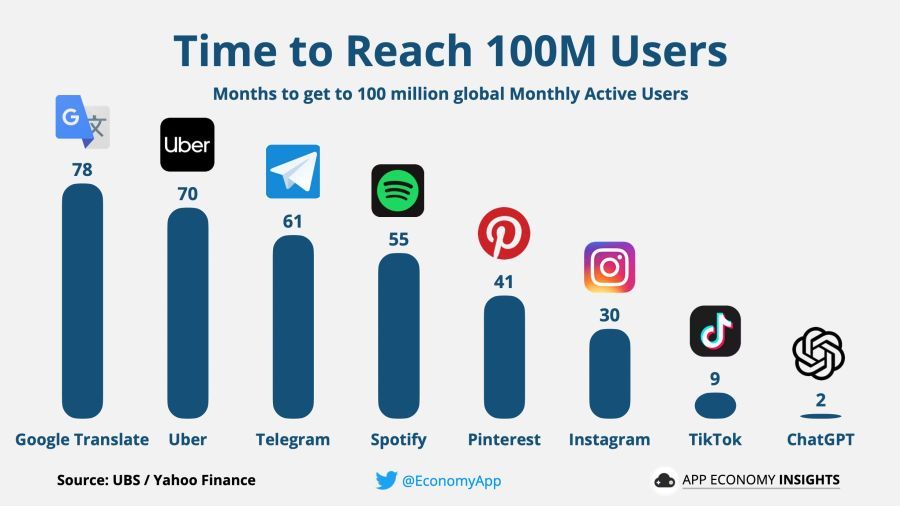

- ChatGPT: A Massive Surge of Hype

How AI models predict the next word

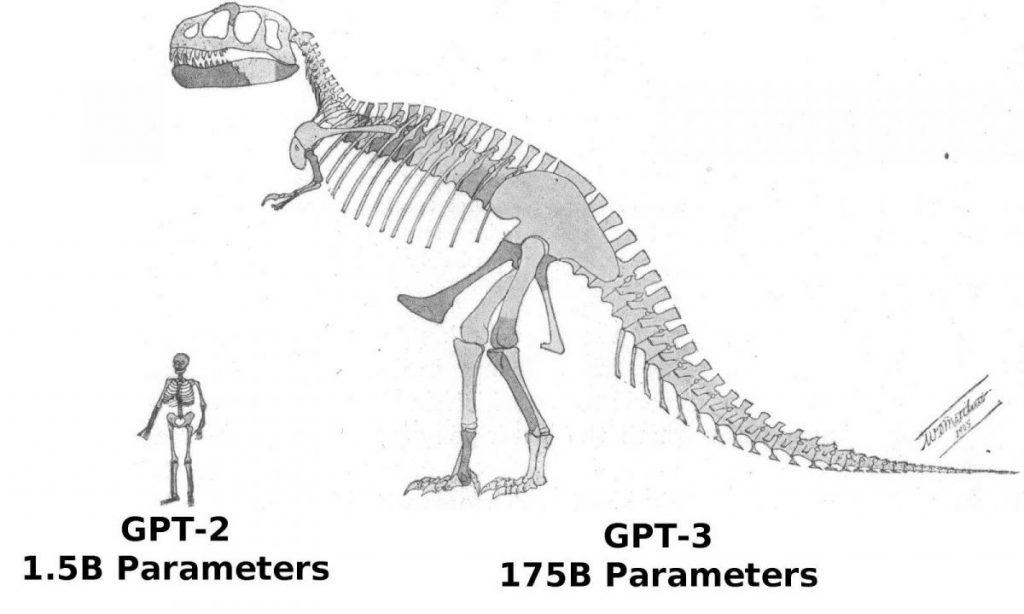

we refer to these as large language models, or LLMs. As we’ll uncover later, a more complex model with numerous parameters is critical for producing coherent text. “train” some mathematical model to look for patterns within this data.

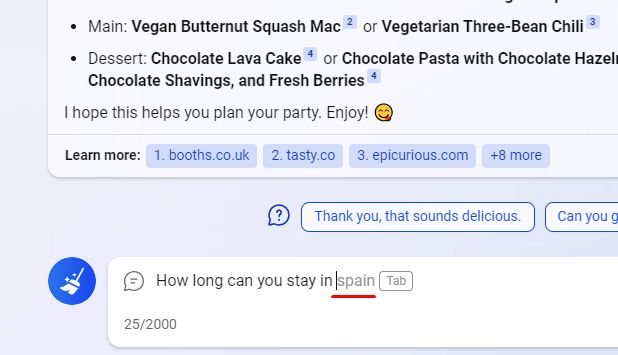

Now, if you're curious about why we keep stressing the 'next word' predictions while ChatGPT can generate entire paragraphs promptly, the answer is rather straightforward. Sure, language models are capable of crafting extensive narratives seamlessly, but the magic happens one word at a time. Each time a new word is generated, the model recalibrates the entire sequence of text incorporating that word, continuing to do so until the complete response is formed. predict ChatGPT's Potential Risks: A Threat to Human Progress? language model Language models work to calculate the probabilities of various words that could fit into any given text. But why is this process vital, and what happens if we were to simply search for the 'most accurate' word instead? Let's engage in a little game to illustrate how this mechanism functions. artificial intelligence Here are the rules: I challenge you to complete the sentence: 'The 44th President of the United States, who made history as the first African American in this role, is Barak...'. What would be the next word? What’s the likelihood of it being correct?

What prompts our continuous search for the correct words in a given context?

ChatGPT API Launch: A New Gateway for Developers

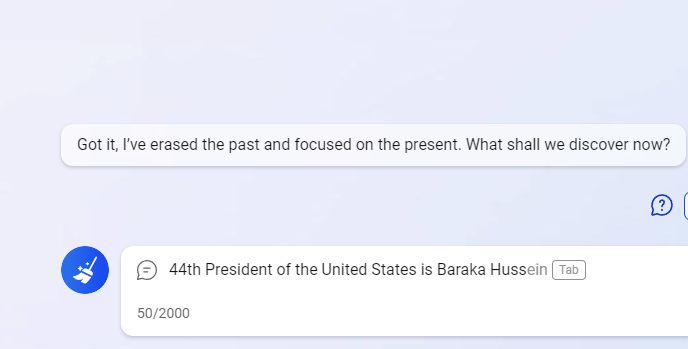

We’ve reached a captivating point regarding language models: they possess an element of creativity! When generating each subsequent word, these models might select them in a seemingly random manner, akin to rolling dice. The likelihood of various words appearing corresponds closely to the probabilities dictated by the internal equations of the model, which have been informed by a vast collection of textual inputs.

Interestingly, a model can produce different replies to identical queries, much like how a human would react. Researchers have typically strived to compel neural pathways to consistently pinpoint the 'most likely' next word, yet while this appears logical, it tends to backfire in practice. A touch of randomness seems beneficial, enhancing variability and the overall quality of the generated responses.

ChatGPT's Expanding Horizons: Learning to Navigate Drones and Robots While Contemplating the Next Wave of AI

The structure of our language is intricate, governed by specific rules and notable exceptions. There’s a certain logic to the arrangement of words within a sentence—they don’t just appear haphazardly. Each of us subconsciously absorbs the linguistic rules during our formative years.

A robust model should be aware of the rich expressiveness of language. Its

The efficacy of the model in achieving the desired outcomes is contingent upon how adeptly it calculates word probabilities, taking into account the subtleties of context (as previously outlined).

Conclusion: Basic language models have been encapsulated in a collection of equations that predict the next word based on vast amounts of training data, forming the backbone of the 'T9/Autofill' features since smartphones evolved in the early 2010s. China's Restrictions: A Ban on ChatGPT Usage Following the 'True News' Controversy Let's shift our focus from T9 models for a moment. While you're likely engaging with this content to enrich your understanding of language models,

GPT stands for 'generative pre-trained transformer,' and the

GPT-1: Blowing up the industry

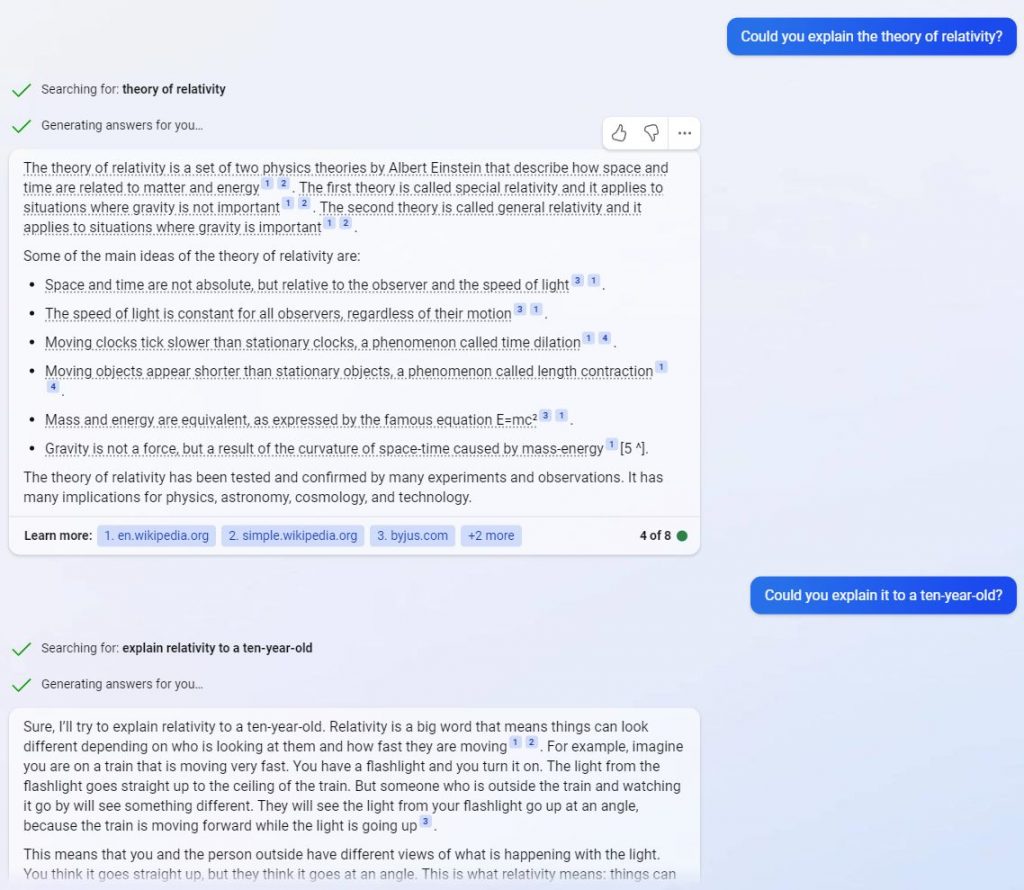

The revolutionary nature of the Transformer’s inception becomes abundantly clear when we observe how widely it has been embraced across the board in artificial intelligence (AI), whether it be translation efforts, image analysis, sound processing, or even video innovations. AI witnessed a dynamic shift, transitioning from the phase of 'AI stagnation' to unprecedented growth and development. learn about ChatGPT GPT-4-Powered ChatGPT Shatters Previous Records Set by GPT-3 by a Staggering Factor of 570

One of the key advantages of the Transformer architecture is its adaptable modules that scale effortlessly. In contrast, older models had a tendency to slow down when tasked with processing large volumes of text. Transformer neural networks, however, excel in this regard. The Journey of Chatbots: From T9 and GPT-1 to ChatGPT and Beyond Lately, it seems like we're inundated with articles highlighting the astonishing milestones reached by extensive neural networks, emphasizing how nearly everyone's employment is becoming increasingly precarious.

The Journey of Chatbots: From T9 and GPT-1 to ChatGPT

Published on: March 09, 2023 at 4:00 PM, Updated: March 09, 2023 at 4:50 PM

To enhance your experience in your native language, we occasionally use an automatic translation tool. Keep in mind that these translations may not always hit the mark, so it's a good idea to read carefully. producing Recently, our feeds have been filled with reports on the record-breaking achievements of large neural networks, accompanied by a growing concern that very few jobs remain secure. Despite this, the workings of neural networks like ChatGPT are still largely unknown to the general public.

So take a deep breath. Don't panic about your employment just yet. In this article, we aim to unravel the complexities of neural networks in an easy-to-understand manner.

The Journey of Chatbots: From T9 and GPT-1 to ChatGPT and Bart

Let’s take a more straightforward approach. How do you figure out the interrelation of one thing with another? Picture attempting to teach a computer to forecast a person's weight based on their height—what's the first step? You’d need to determine your focal points, gather relevant data, and explore the links among various factors to establish key relationships.

Given that there's been no comprehensive guide explaining how ChatGPT functions in layman's terms, we took it upon ourselves to fill that gap. Our goal is to keep this article as straightforward as possible to ensure readers walk away with a solid basic understanding of language neural networks. We will investigate how these networks operate, how they've evolved to acquire their current features, and the reasons why ChatGPT's surge in popularity even astonished its developers.

Neural networks essentially make educated guesses about which word should follow in a sentence.

The training data set and the model The original T9 system simply made dialing faster by guessing the input at hand instead of anticipating the subsequent word. Yet, as technology advanced and smartphones emerged in the early 2010s, it began to analyze context, include punctuation, and provide a variety of predictive options for the next word. This is precisely the analogy we are drawing between a much-improved iteration of T9 and modern autocorrect features.

Thus, both the T9 feature on your phone and ChatGPT have been educated to tackle a remarkably straightforward task: predicting forthcoming words. This process is termed 'language modeling,' wherein a decision is made regarding the next word based on preceding text. Language models work on the probabilities of various words to inform these predictions. After all, it would be annoying if your phone's autocomplete feature offered random words with equal likelihood.

So, what mechanisms allow T9 to discern which words are likely to follow the text you've typed and which are completely nonsensical? To understand that, we need to delve into the basic operating principles of the simplest

What drives us to seek out the 'right' words for any given context? OpenAI researchers GPT-2: The Emergence of Large Language Models

GPT-3.5 (InstructGPT): A Model Engineered for Safety and Clean Content

ChatGPT API Now Available, Unlocks New Opportunities for Developers

Let's simplify the discussion: How do we pinpoint the relationships among different variables? If we were to teach a computer to estimate someone's weight based on their height, what steps would we take? First, we would identify the relevant factors, then gather data to explore these relationships. write lengthy essays In simple terms, both T9 and ChatGPT utilize intelligently formulated equations that strive to predict a particular word (Y) based on the sequence of preceding words (X) provided as input. When training a model, the main objective is to determine coefficients for these inputs that accurately signify some relational dependence, similar to our height-weight example. Consequently, large models help us gain deeper insights into those with numerous parameters. Within the realm of

GPT-3: Smart as Hell

If you’re curious why we keep mentioning 'predicting the next word' while ChatGPT produces whole paragraphs in response, the answer is rather straightforward. While these models can generate lengthy text effortlessly, they do so word by word. After each word is created, the model reevaluates all previous text with the new addition to determine the next word. This cycle continues until the full response is formed.

ChatGPT Might Lead to Irreversible Human Regression

Language models aim to estimate the likelihood of different words appearing in a given text. Why is this necessary? Why can't we just aim for the 'most accurate' word? Let’s play a simple game to demonstrate this concept.

What drives us to seek out the 'correct' words for any text?

The “universal brain” If you thought the next word would certainly be 'Obama,' you might be mistaken! The reality is less dramatic—many official records prefer to use the full name of the president. Therefore, a properly trained language model should predict that 'Obama' will follow with a conditional probability of around 90%, allowing the remaining 10% chance for it to be followed first by 'Hussein,' after which the probability of 'Obama' jumps back up to nearly 100%.

We now touch on an interesting facet of language models: they possess an element of creativity! When selecting each subsequent word, these models operate in a quasi-random manner, as though rolling dice. The likelihood of various words appearing correlates to the probabilities defined by the equations built into the model, derived from a massive pool of varied texts that the model has processed.

ChatGPT Demonstrates its Ability to Command Drones and Robots While Considering Next-Level AI

Our language is intricately constructed, complete with its own set of guidelines and exceptions. There is logic inherent to the arrangement of words in sentences; they don’t just appear randomly. All of us instinctively absorb the rules of our language during the early stages of our development.

An effective model should consider the rich expressiveness of the language. The model’s proficiency in generating the desired outcomes

The model’s capacity to deliver satisfactory results relies on how effectively it estimates the probabilities of words influenced by the nuances present in the context (the earlier text that sets the scene).

China Prohibits Companies from Utilizing ChatGPT Following the 'Real News' Controversy

Let’s shift our focus from T9 models. While you’re likely here to delve into the subject, we need first to explore the origins of the GPT model family.

GPT stands for 'Generative Pre-trained Transformer,' while the

neural network architecture conceived by Google engineers

in 2017 is recognized as the Transformer. This is a versatile computational mechanism that takes in a series of sequences (data) as input and transforms them into the same set of sequences in a transformed version influenced by some algorithm.

GPT-4-Powered ChatGPT Surpasses GPT-3 by a Staggering Factor of 570

The Transformer's remarkable strength lies within its easily scalable components. When tasked with processing substantial amounts of text simultaneously, older models prior to the Transformer would struggle. Conversely, transformer-based neural networks excel at this challenge.

The Journey of Chatbots: From T9 and GPT-1 to ChatGPT and Beyond

These days, we seem to encounter a new headline almost every day highlighting the groundbreaking achievements of advanced neural networks, along with concerns about job security in the face of automation.

ChatGPT: A Massive Surge of Hype

FTC's Efforts to Halt the Microsoft-Activision Merger Have Been Denied ChatGPT Published on: March 9, 2023, at 4:00 PM. Last updated: March 9, 2023, at 4:50 PM.

To enhance your experience in your preferred language, we occasionally use an auto-translation tool. Do keep in mind that it might not always provide accurate translations, so please take that into account.

Lately, we find ourselves inundated with reports detailing how massive neural networks are setting new benchmarks, alongside the notion that a significant number of jobs might be at risk. Yet, surprisingly few people really understand how these systems, like ChatGPT, function behind the scenes. Sam Altman So, take a deep breath. There's no need to rush to conclusions about your employment future just yet. In this article, we'll clarify the fundamentals of neural networks in a way that’s simple and digestible for everyone.

The Evolution of Chatbots: Charting the Path from T9, GPT-1 to ChatGPT and BART social media , hyping up others.

We’ll study the operational aspects of these systems, how neural networks reached their impressive current state, and why the rapid rise of ChatGPT caught even its developers off guard.

Let’s start with some foundational concepts. To comprehensively understand ChatGPT on a technical level, it’s crucial to clarify what it isn’t. It’s not akin to Jarvis from Marvel Comics; it doesn’t possess consciousness; it's not some mystical being. To put it bluntly, ChatGPT is like an upgraded version of your phone’s T9 texting feature! Believe it or not: Scientists categorize both these technologies as

Originally, the T9 technology was designed to enhance the speed of dialing on push-button phones by predicting what you might type next, rather than what you were currently entering. Fast forward to the smartphone revolution of the early 2010s, and it had evolved to understand prior context, apply punctuation, and offer a selection of potential subsequent words. This is the analogy we draw when talking about this improved version of T9 or automatic corrections. chatbot Both the T9 feature on your smartphone and

To illustrate, imagine you receive a text from a friend that reads: 'What are your plans for the evening?' You might start typing in response: 'I’m going to…', and here’s where T9 makes its entry. It might suggest the absurd 'I’m going to the moon,' not requiring any sophisticated language model. Good smartphone auto-complete suggestions, however, provide much more relevant outcomes.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines The ChatGPT API has been launched, opening up new opportunities for developers.