StyleDrop: Google’s Cutting-Edge Neural Network for Visual Style Replication

In Brief

StyleDrop is a neural network engineered to replicate and convey any visual aesthetic, capturing its subtleties and specific details.

Google has unveiled StyleDrop It’s a groundbreaking neural network that can imitate and transition any visual style into new generations. This innovative technology, driven by Muse’s fast text-to-image model StyleDrop empowers users to generate images that truly reflect a specific aesthetic, preserving its nuances and distinctive features.

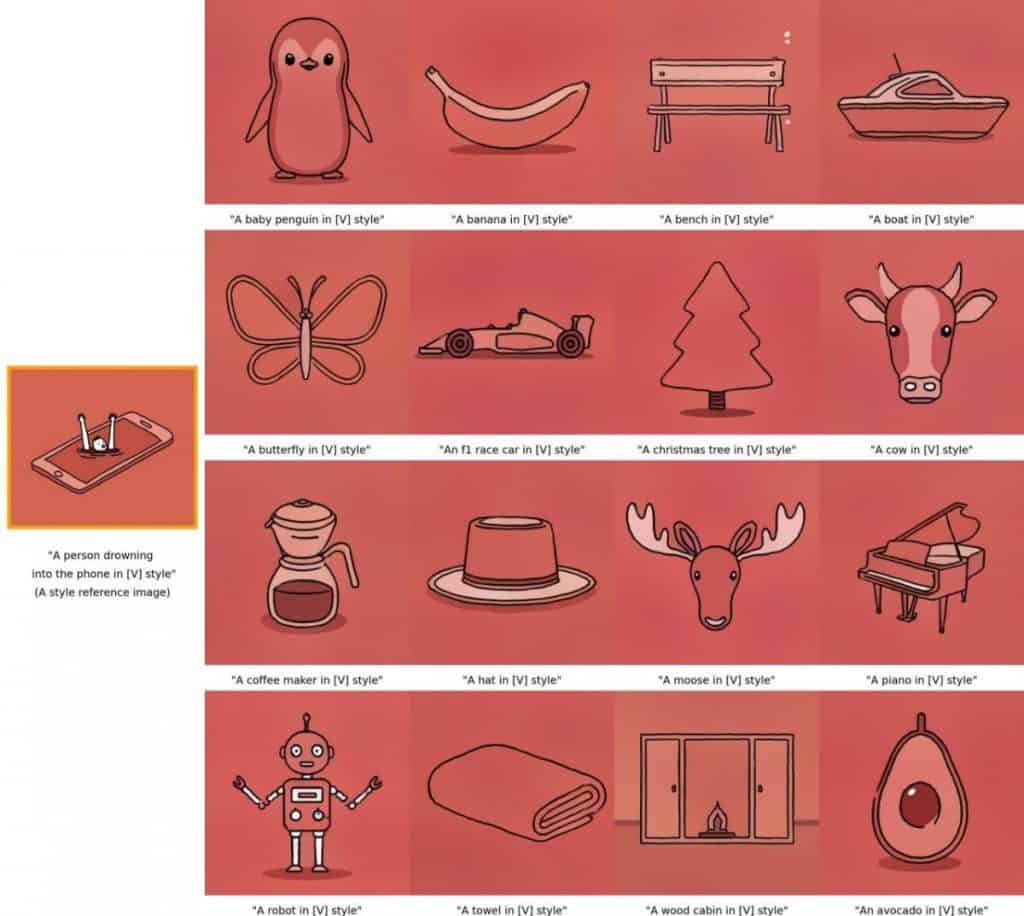

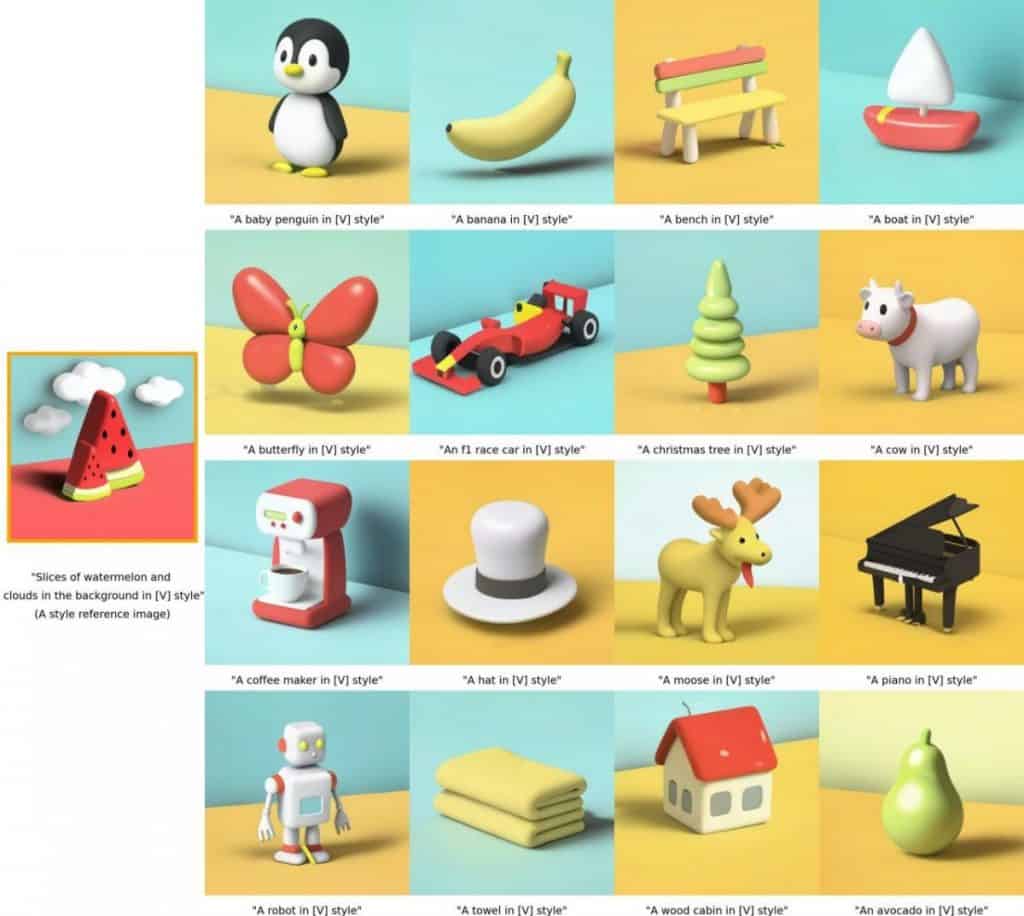

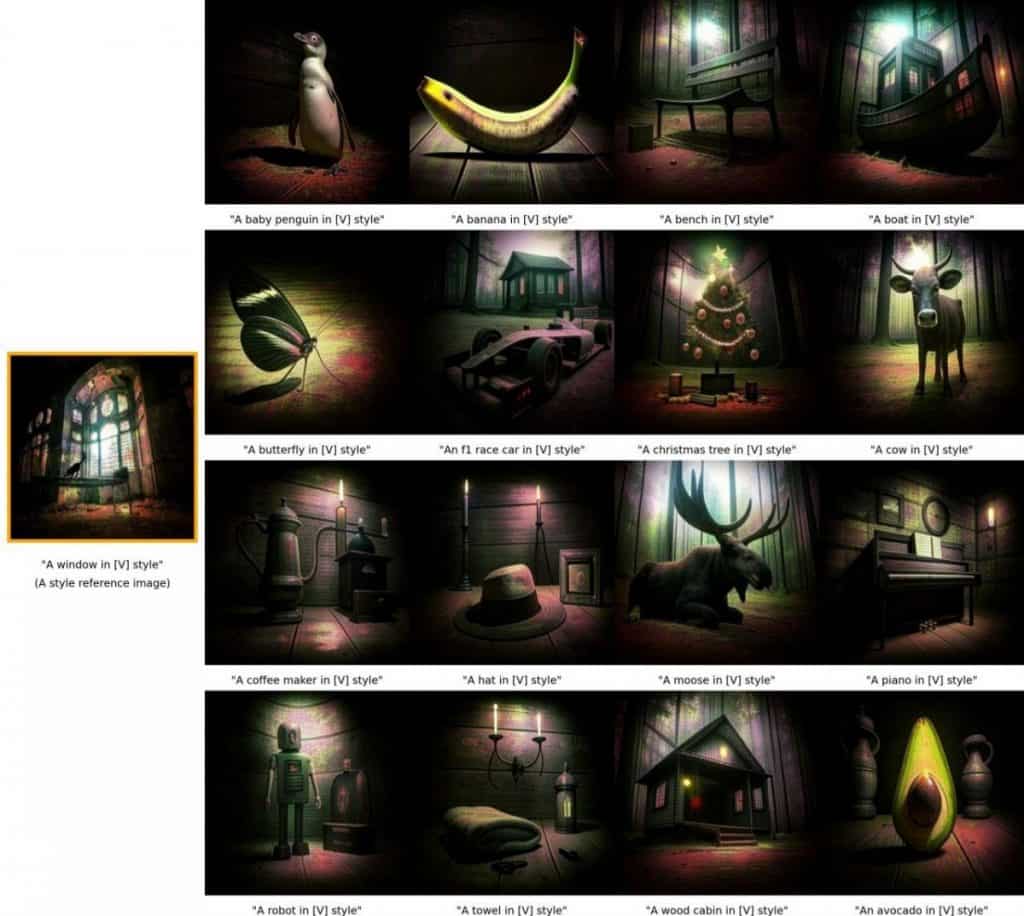

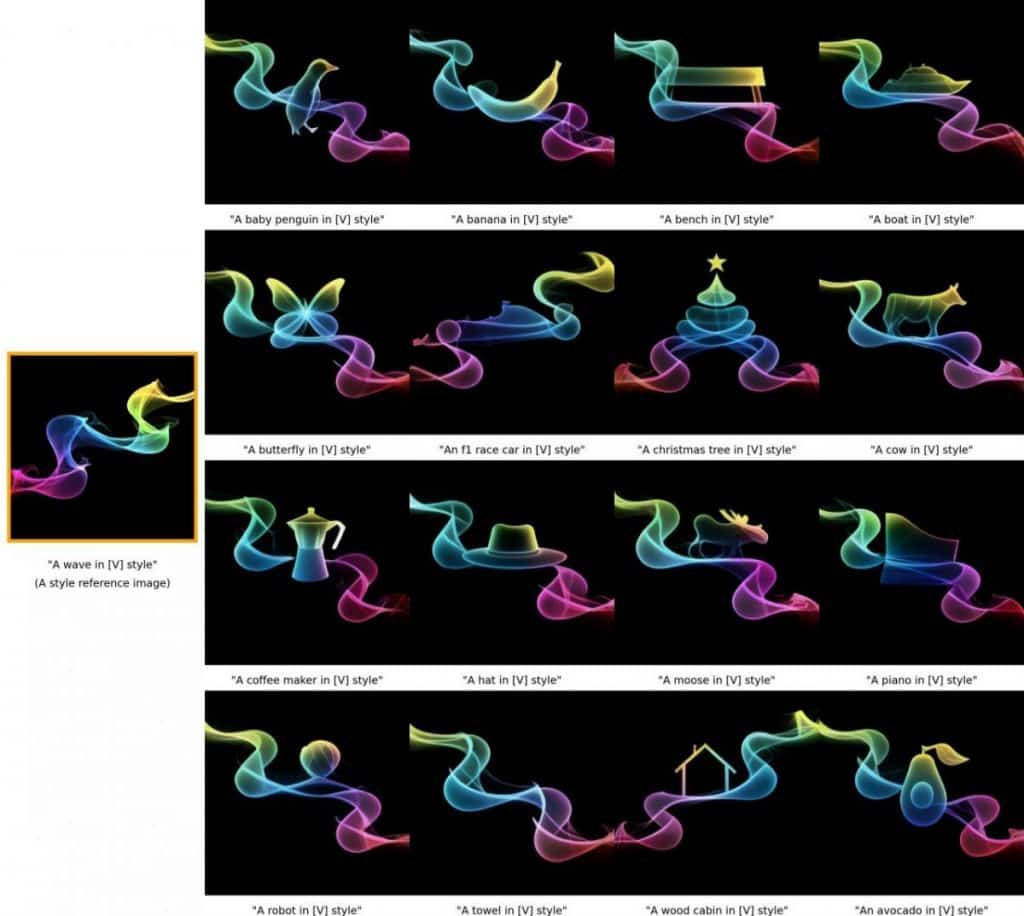

With StyleDrop, users can choose an original image that embodies the desired style and seamlessly apply that aesthetic to new images, while maintaining all distinct characteristics of the selected style. The application brilliantly adapts images that may seem entirely different from one another. For instance, users could take a child's simple drawing as inspiration to create a stylized logo or character.

Utilizing Muse’s sophisticated generative vision transformer, StyleDrop is trained with a blend of user input, generated images and Clip Score. This neural network is optimized with a minimal set of adjustable parameters, which account for less than 1% of the overall model parameters. Through a cycle of training iterations, StyleDrop consistently refines the quality of the images it produces, achieving impressive results in mere minutes.

The adaptability of StyleDrop makes it an essential resource for brands aiming to define their unique visual language. With this tool, brands can quickly prototype concepts in their chosen style, thus becoming an invaluable asset for creative teams and designers alike.

An extensive analysis of StyleDrop’s performance in refining text-to-image models demonstrated its dominance over alternative methods, including DreamBooth , Textual Inversion on Imagen , and Stable Diffusion StyleDrop has consistently surpassed these competitors, producing high-fidelity images that align closely with the style dictated by the user.

User-provided text prompts play a pivotal role in the functionality of StyleDrop. By appending descriptive style elements in natural language (like “a golden melting 3D rendering style” or “an abstract design reminiscent of flowing rainbow smoke”) to the content descriptors during both training and image generation, StyleDrop accurately translates the desired aesthetic. image generation process Moreover, StyleDrop enables users to enhance their own brand’s visuals by easily integrating their unique identity. By adding a natural language style descriptor to the content descriptors during both training and image generation, brands can swiftly prototype ideas that reflect their own distinctive aesthetics. content The image generation process with StyleDrop is strikingly efficient, requiring no more than three minutes to complete. This speedy process allows users to

explore a multitude of creative avenues train the neural network and experiment with various styles rapidly.

While StyleDrop showcases remarkable potential for brand enhancement, it’s crucial to note that the application hasn’t been made publicly available just yet. The Google team is diligently working to resolve copyright issues and ensure they meet legal requirements before a smooth and secure launch. This dynamic tool empowers brands and individuals to unleash their creativity and develop captivating visual identities amidst a highly competitive digital arena by effortlessly duplicating any visual style. With StyleDrop, brands have an invaluable resource at their fingertips to craft their own visual narratives with unmatched ease and precision. Midjourney and Dall-E Artist Styles Collection with Examples: 130 Renowned AI Art Techniques

GPT-4 vs. GPT-3: Discovering Features of the New Model

uz uz VToonify: An AI Model for Crafting Artistic Portrait Videos in Real-Time

vi

New Report

News Report Technology DeFAI Must Address Cross-Chain Challenges to Realize Its Full Potential