Stability AI Unveils SDXL Beta Model

In Brief

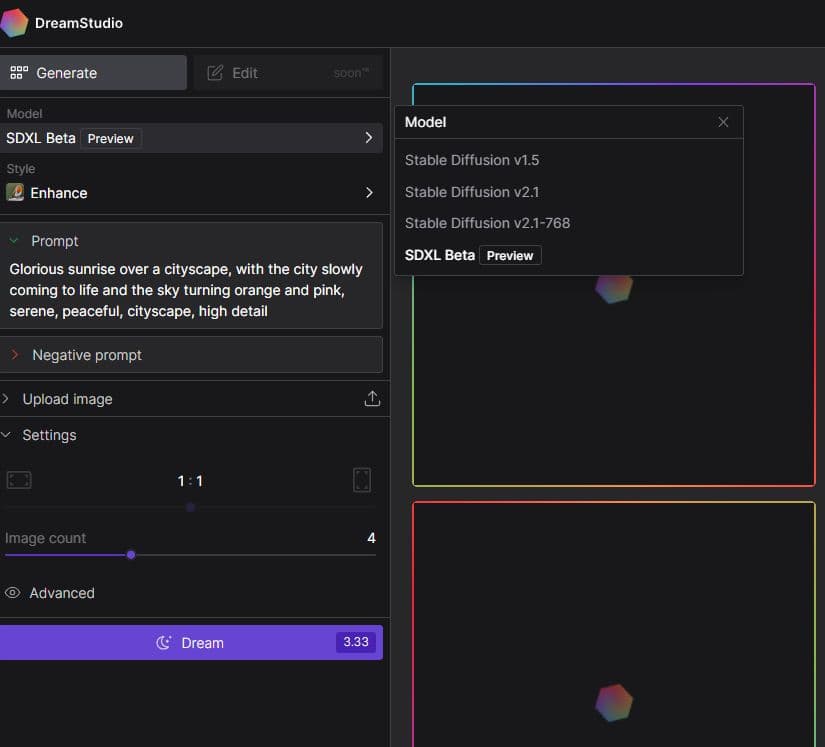

Stability AI has rolled out a brand new model known as SDXL Beta ( Stable Diffusion XL Beta). This model is more substantial than its predecessors, boasting additional parameters alongside some yet-to-be-disclosed upgrades. It can be accessed via DreamStudio, the official image generator from Stability AI, and utilizes cutting-edge algorithms and deep learning methodologies to produce breathtaking visuals.

Stability AI has introduced a sneak peek of its SDXL Beta model, short for Stable Diffusion XL Beta. Although specifics are limited at this point, eager users can begin testing it. What makes this SDXL model unique in the realm of stable diffusion? What benefits does it offer? Let's dive deeper.

| Read more: Exploring Artistic Styles from Midjourney and Dall-E: Examples of 130 Renowned AI Art Techniques |

What exactly is the SDXL model?

Currently, the SDXL model represents the next step in development. being trained It's important to note that the model is still in flux, and by the time it officially launches, many features could transform; for all we know, it might even receive a different name altogether. What is evident is that it possesses greater magnitude with added parameters and undisclosed improvements. This is a v2 iteration, not v3 (whatever implications that carries).There is a chance that the modifications seen in this v2 setup could enhance operational efficiency, but without clearer insight, it's challenging to gauge the true extent of these advancements. Furthermore, knowing about any specific settings that have been modified or enhanced in this version would be beneficial.

The SDXL model is readily available on DreamStudio, the official platform for Stability AI. Simply choose SDXL Beta from the model options to start experimenting. It appears to employ sophisticated algorithms and deep learning techniques to craft visually stunning creations that cater to a broad spectrum of uses.

Improvements

Clearly Readable Text. One of the standout capabilities of SDXL is its ability to generate coherent text, a feature that was lacking in the previous v1 and v2.1 iterations. Although the produced text may not always be flawless, as illustrated in the Stable Diffusion Text displayed below, SDXL is a significant improvement over v2.1, not to mention v1. The underlying reason is that SDXL utilizes a more advanced deep learning model that enhances its comprehension and generation of intricate language patterns. With ongoing enhancements, it holds great promise for becoming even more precise and dependable.

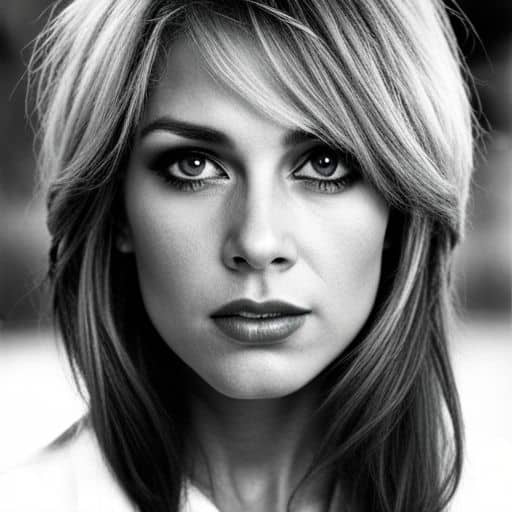

Human Anatomy. Accurately generating anatomically correct human figures has been a historical hurdle for AI. Often, you see characters with superfluous or absent limbs. Traditional inpainting techniques are typically employed to resolve these issues, or, more recently, the Open Pose feature of ControlNet can be utilized to replicate a pose from a reference image. We are pleased to report that this is an area where the SDXL Beta model shows significant improvement. The model has significantly enhanced its ability to accurately replicate poses from reference images. This can be invaluable for various fields, including animation and virtual reality development. stable diffusion Image of a woman in sports attire balancing a ball in her right hand.

Duotone. The term duotone previously resulted exclusively in black-and-white outputs in the v1.5 model. However, SDXL Beta diversifies this, producing duotone images in a range of colors. It's evident that compared to v1 models, there has been a noticeable enhancement in the ability to

generate images that are both accurate and relevant to the inquiries raised, marking a significant improvement over the v2 models, making them a more reliable asset for natural language processing endeavors. interpret the prompt duotone portrait of a young African woman.

SDXL: Old Forest by Christopher Balaskas.

Conclusion

- Stable Diffusion SDXL generates more visually compelling images than both v2.1 and, to a lesser degree, v1.5 models.

- This new model yields images with greater accuracy.

- The necessity for negative prompts has decreased compared to v2.1.

- Human anatomy has improved.

- Several quirks within the model are set to be resolved prior to its launch.

- It can create realistic portraits.

- Stability AI secures $101 million in funding, achieving a valuation of $1 billion.

Read more related articles:

Disclaimer

In line with the Trust Project guidelines Damir serves as the head of the team, product manager, and editor at Metaverse Post, focusing on areas such as AI/ML, AGI, LLMs, the Metaverse, and Web3 initiatives. His contributions draw a significant readership, exceeding one million visitors each month. With a decade of expertise in SEO and digital marketing, Damir is a recognized figure in the field, having been featured in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other well-regarded publications. As a digital nomad, he traverses between the UAE, Turkey, Russia, and the CIS. He holds a bachelor’s degree in physics, which he attributes to developing critical thinking essential for thriving in the ever-evolving digital landscape.