Phi-1: The Compact Language Model that Outshines GPT in Efficient Code Creation

In Brief

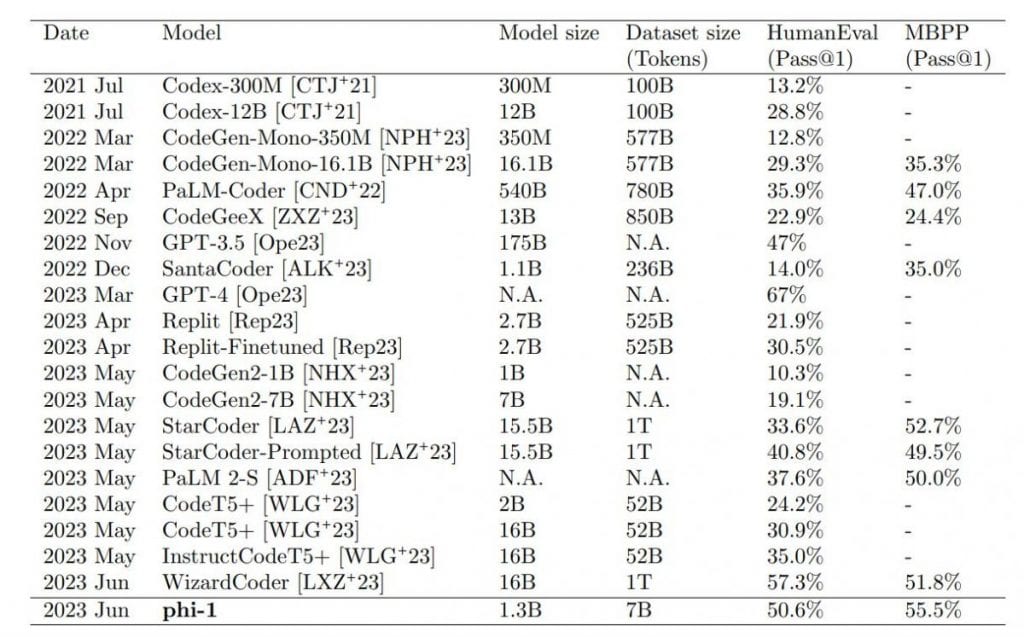

Researchers developed Phi-1, a compact language model for effective code generation, employing 1.3 billion parameters alongside a reduced training data set.

Even though it has a smaller footprint, Phi-1 showcases outstanding performance, achieving a pass@1 accuracy of 50.6% on the HumanEval benchmark and 55.5% on MBPP.

Phi-1 This compact yet formidable model is specifically engineered for tasks centered around code generation. In contrast to its predecessors, Phi-1 excels in coding and other associated tasks while leveraging significantly fewer parameters and a more concise training dataset.

| Recommended: 12 Best AI Coding Tools 2023 |

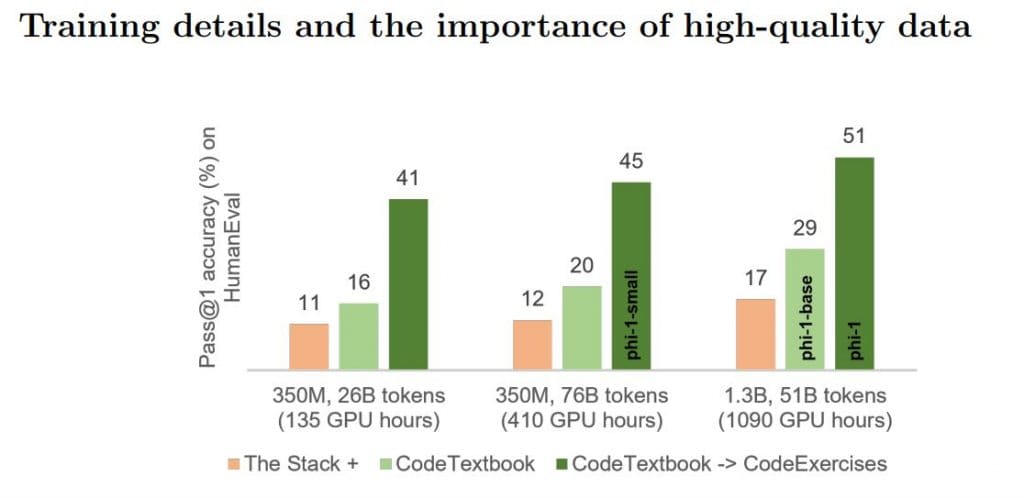

Phi-1 is a Transformer-based architecture that impressively operates with only 1.3 billion parameters, which is a small fraction compared to its competitors. Notably, it underwent training in just four days utilizing eight A100 GPUs, with the training data comprising meticulously curated 'textbook-quality' resources sourced from the internet (6 billion tokens), in addition to synthetic textbooks and exercises generated with support from GPT-3.5 (1 billion tokens).

Despite its smaller configuration, Phi-1 produces commendable results, reporting a pass@1 accuracy of 50.6% for HumanEval and 55.5% for MBPP benchmarks. Furthermore, when compared with the earlier Phi-1-base and Phi-1-small models (with 350 million parameters), it exhibits surprising emergent capabilities, maintaining a respectable 45% accuracy on HumanEval.

The exemplary performance of Phi-1 is largely due to the high-quality data utilized during its training phase. Just as an excellent textbook aids learners in grasping complex topics, the researchers prioritized creating 'textbook-quality' data to boost the model's learning efficiency. language model This strategy yielded a model that outperforms the majority of open-source models across coding benchmarks like HumanEval and MBPP, despite its smaller parameter count and narrower dataset.

Nonetheless, we must recognize certain limitations of Phi-1 when placed alongside larger models. Firstly, its specialization in Python coding means it lacks the adaptability of multi-language frameworks. Moreover, it does not possess the domain-specific insights that more extensive models offer, particularly in areas like API interaction or utilization of niche packages. Lastly, due to the rigid structure of the training data and the limited diversity in communication style, Phi-1 is less adaptable to variances in stylistic formats or prompt inaccuracies.

Researchers are cognizant of these constraints and have suggested that further developments could address them effectively. They recommend employing GPT-4 for generating synthetic data instead of GPT-3.5, as the latter's outputs manifested a notable error rate. Regardless of these imperfections, Phi-1's coding capabilities remain striking, paralleling a previous study where a model language model produced accurate outputs even while being trained with data that had a 100% error rate.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines It's crucial to highlight that the information on this page shouldn't be construed as legal, financial, investment, or advisory guidance of any kind. Always ensure you only invest what you can afford to lose and seek independent financial counsel when uncertain. For more details, please refer to the terms and conditions or the support pages offered by the issuer or advertiser. MetaversePost strives to provide accurate and impartial reporting, but market conditions may fluctuate without prior notice.