OpenFlamingo: A State-of-the-Art Open-Source Framework for Converting Images to Text, Crafted by Meta AI and LAION

In Brief

OpenFlamingo represents the open-source iteration of DeepMind's original Flamingo model, built upon the LLaMA large language model .

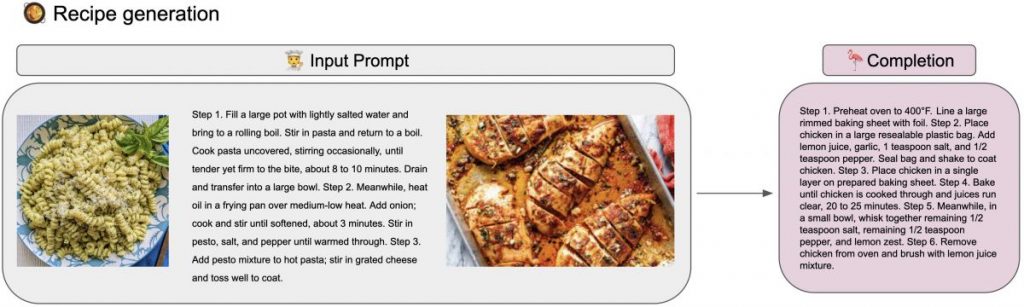

The team's aim is to develop a multimodal framework adept at tackling complex vision-language tasks, aspiring to match the capabilities and versatility of GPT-4 in processing both visual and textual information.

The newly launched OpenFlamingo framework is essentially an open-source take on DeepMind's Flamingo model, OpenFlamingo A Step-by-Step Guide to Utilizing Midjourney for Free Indefinitely

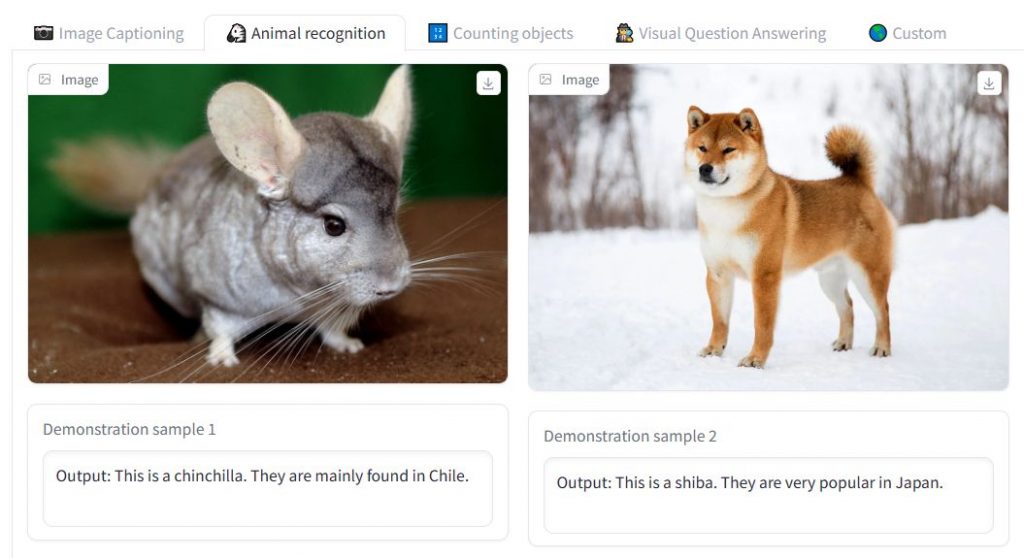

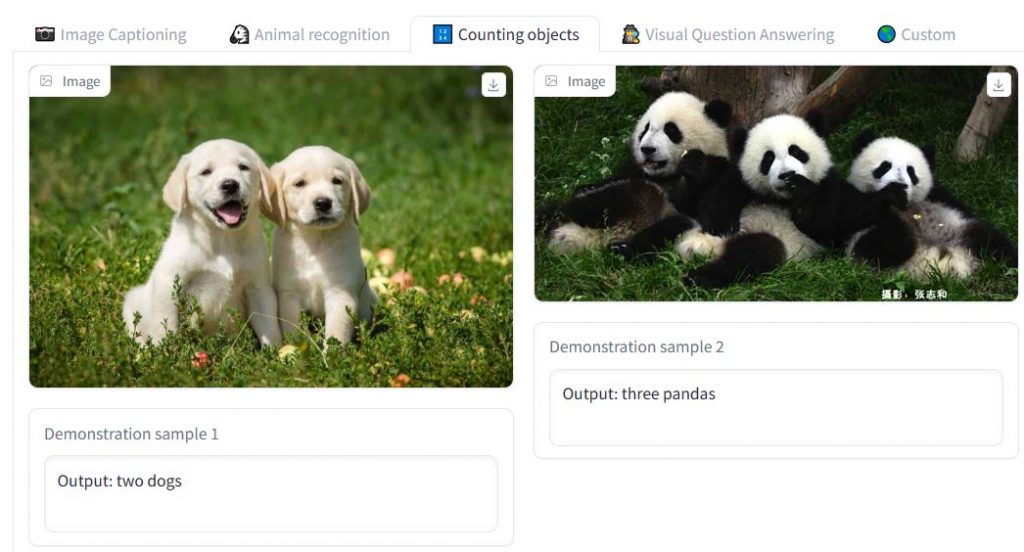

A standardized evaluation platform for in-context learning tasks spanning both visual and linguistic domains.

- An early prototype of our LLaMA-based OpenFlamingo-9B model.

- With OpenFlamingo, the ambition is to construct a versatile multimodal system capable of tackling a broad spectrum of vision-language challenges. The ultimate goal is to reach the same level of performance and agility as GPT-4 when dealing with visual and textual inputs. Developers are focused on creating an open-source variant of DeepMind's Flamingo model, which is designed to comprehend and reason about images, videos, and text. Their commitment to open-source development underscores the importance of transparency in fostering collaboration, expediting innovation, and making advanced LMMs widely accessible.

- They have made the initial checkpoint of our OpenFlamingo-9B model available. While still in the early stages of optimization, it showcases considerable potential for the project. Through community collaboration and feedback, developers plan to refine and improve LMM capabilities. They're inviting the public to contribute insights and enhancements to the repository, encouraging active participation in the ongoing development process.

The architecture for OpenFlamingo closely mirrors that of the original Flamingo. To ensure models acquire few-shot learning abilities in context, Flamingo models require training on expansive web datasets containing interlaced textual and visual content. OpenFlamingo adopts the same structural elements proposed in the initial Flamingo research, including Perceiver resamplers and cross-attention layers. However, due to the unavailability of Flamingo's training datasets to the broader community, the developers are utilizing openly accessible datasets for their training purposes. The recently introduced OpenFlamingo-9B checkpoint has specifically been trained on a combination of 10 million samples from LAION-2B and an additional 5 million from the newly formed Multimodal C4 dataset.

As part of this release, developers are also featuring a checkpoint from our under-development LMM OpenFlamingo-9B, which utilizes the LLaMA 7B and CLIP ViT/L-14 as its foundation. Although still in progress, this initiative already presents significant advantages for the community.

Anticipated Costs for AI Model Training are Projected to Surge from $100 Million to $500 Million by 2030 graphics DeepMind Introduces Ada: An AI Agent with Near-Human Intelligence

DeepMind has launched Dramatron, an AI tool capable of generating complete movie or television script drafts.

To get started, look at the GitHub source and demo .

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Vitalik Buterin Urges the Community to Discuss Ethereum's Decentralization Objectives and Gas Limit Strategy