Introducing the Prompt Engineering Guide with Six Strategies to Maximize GPT-4 Performance

In Brief

OpenAI recently launched a comprehensive prompt engineering guide specifically for GPT-4, detailing effective methods to bolster the performance of large language models.

This innovative research organization in the field of artificial intelligence has put forth a Prompt Engineering guide aimed at refining the performance of Language Models (LLMs). OpenAI This guide elaborates on various strategies and techniques that can be synergistically utilized for enhanced effectiveness, along with examples of prompts, presenting six essential strategies for users to optimize model performance.

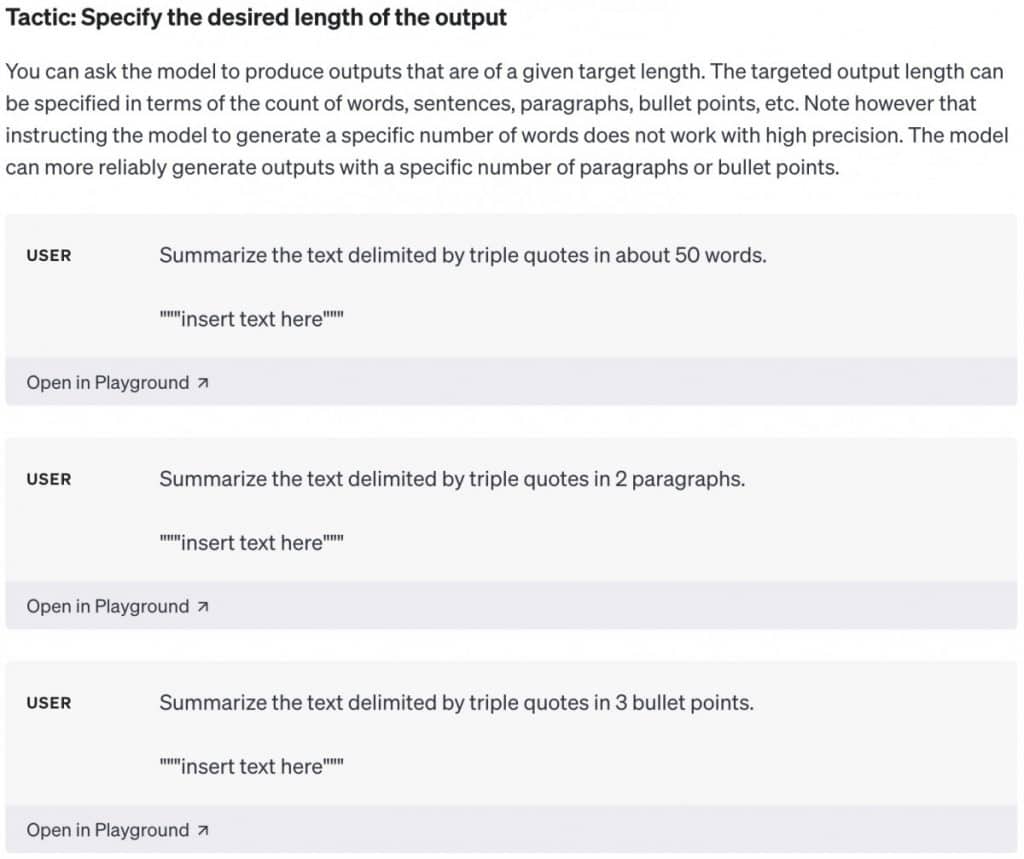

Since language models do not possess intuition, if the outputs are either overly detailed or too simplistic, users should ask for responses that are more concise or at an expert level. Clearly articulated instructions from users significantly increase the chances of getting desired outcomes.

Clear Instructions

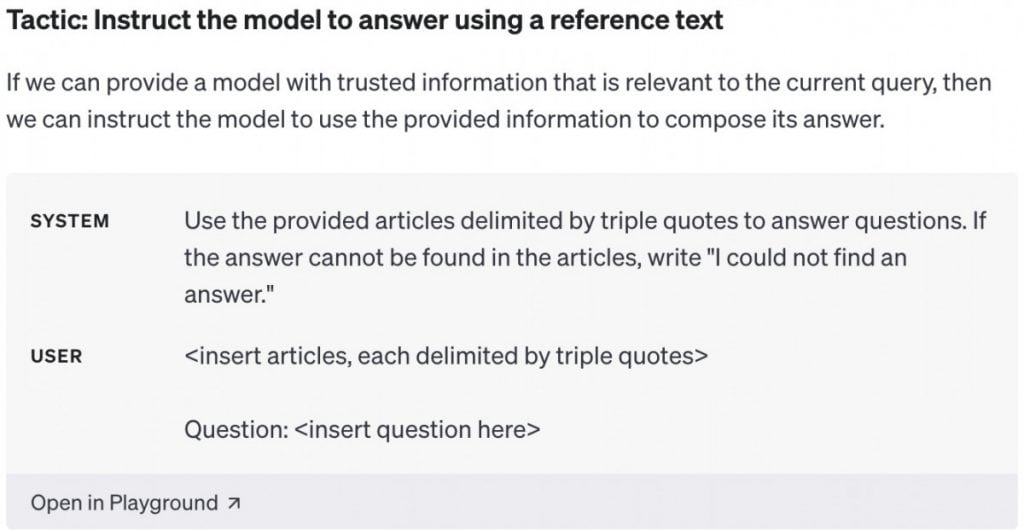

Language models sometimes yield incorrect information, particularly on niche topics or when asked to provide citations and links. Providing reference materials can improve the model's accuracy, similar to how students use notes. Users are encouraged to direct the model to incorporate such reference texts or cite them directly.

Provide Reference Texts

Simplifying Complex Instructions

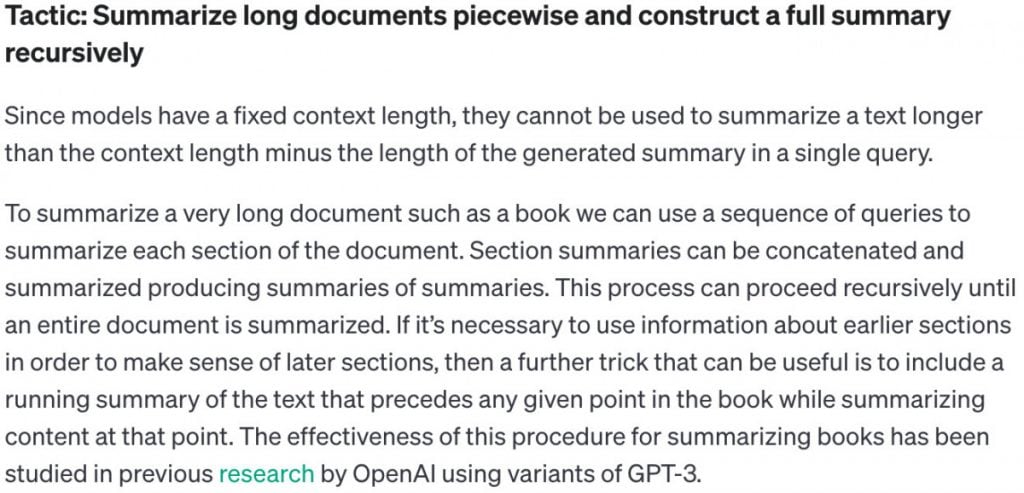

For improved performance, users should decompose intricate systems into smaller, manageable parts. Typically, complex tasks see a higher rate of errors compared to simpler ones. Additionally, complex tasks can often be reframed as a series of simpler workflows, where outcomes from earlier phases serve as inputs for subsequent tasks.

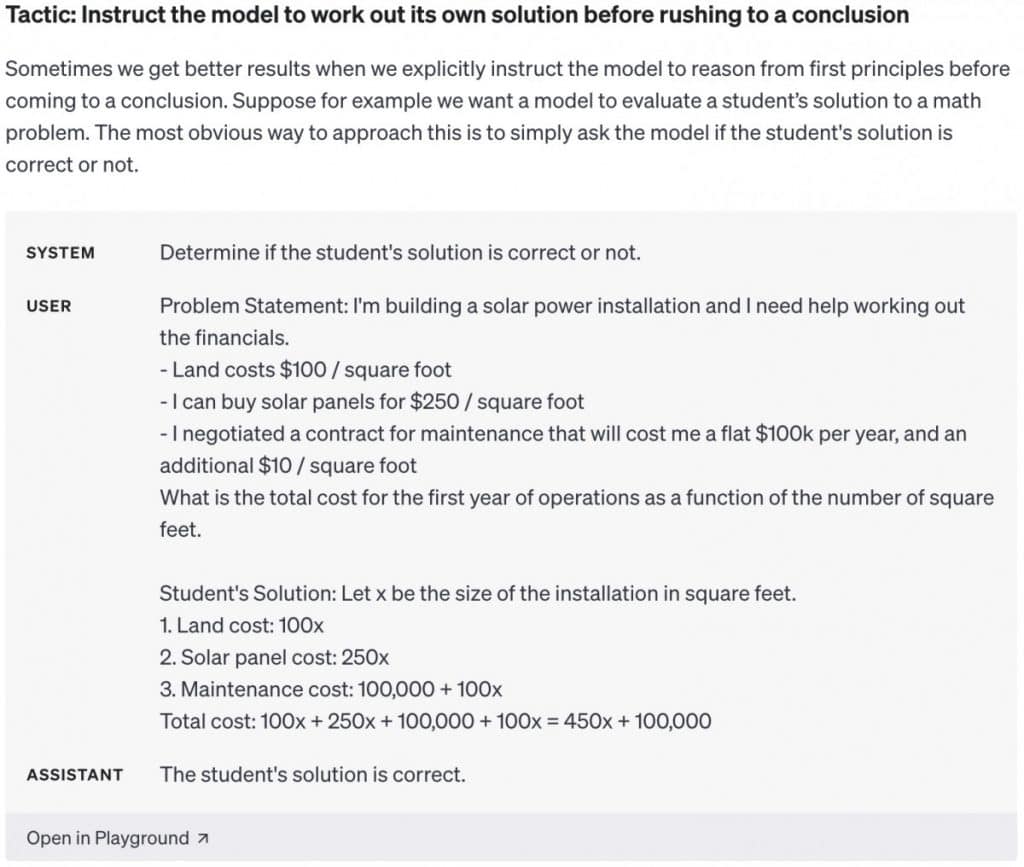

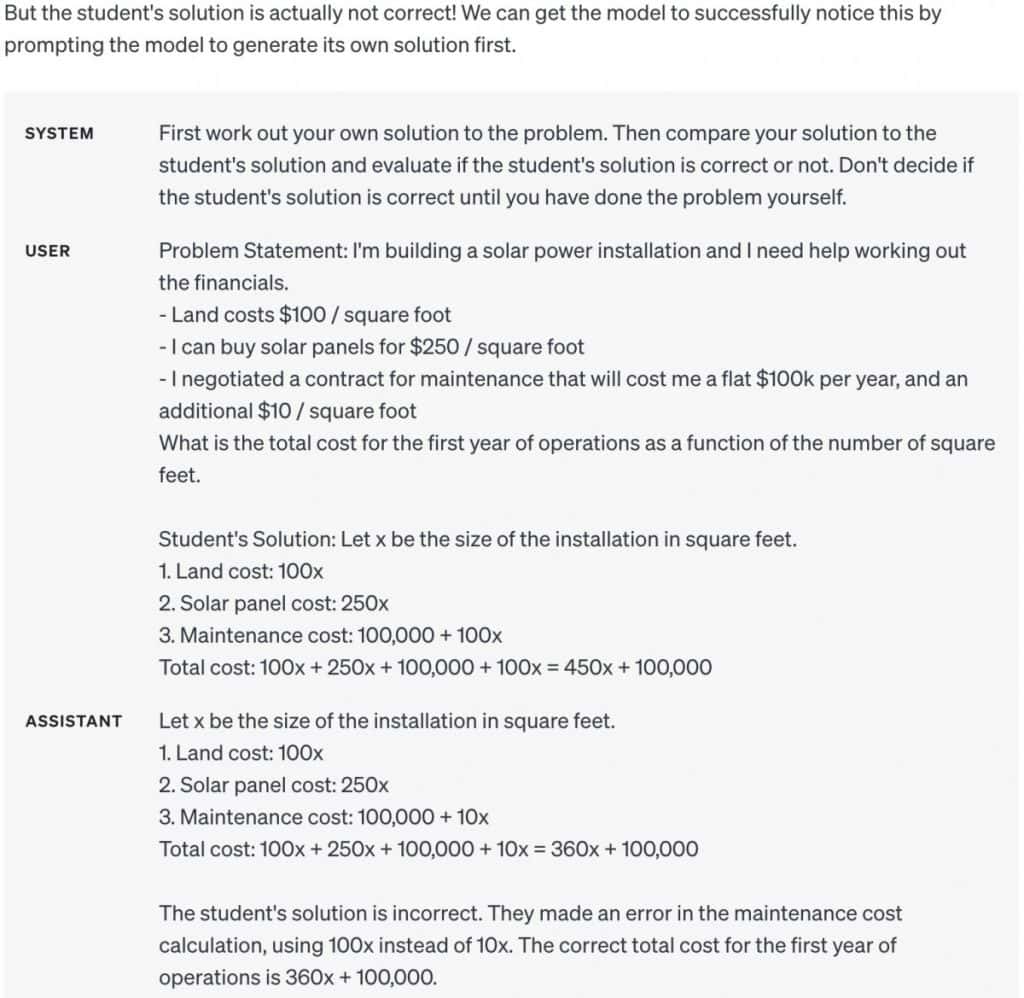

The Model Needs Time to Analyze

LLMs are more prone to make reasoning errors when they are expected to deliver quick replies. Encouraging the model to articulate its “train of thought” before presenting an answer can lead to more dependable and precise responses.

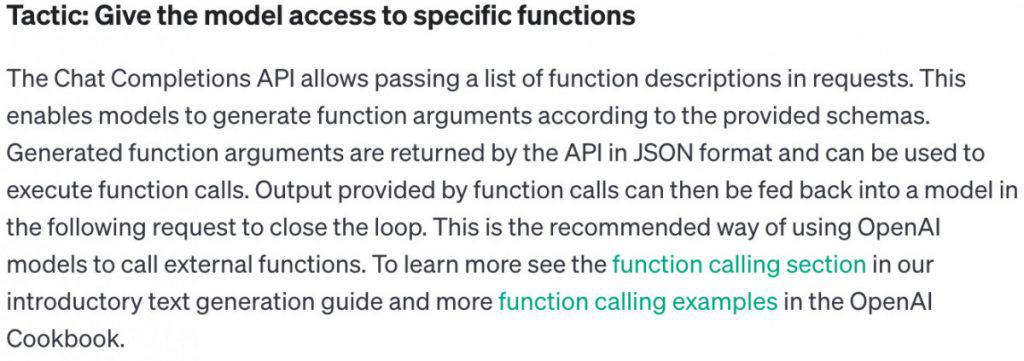

Employing External Tools is Beneficial

To alleviate some of the model’s limitations, users can provide results from alternative tools. Implementing a code execution tool like OpenAI’s Code Interpreter can be invaluable for tasks like executing code or performing calculations. If there’s a task better suited for another tool, consider delegating it for improved results.

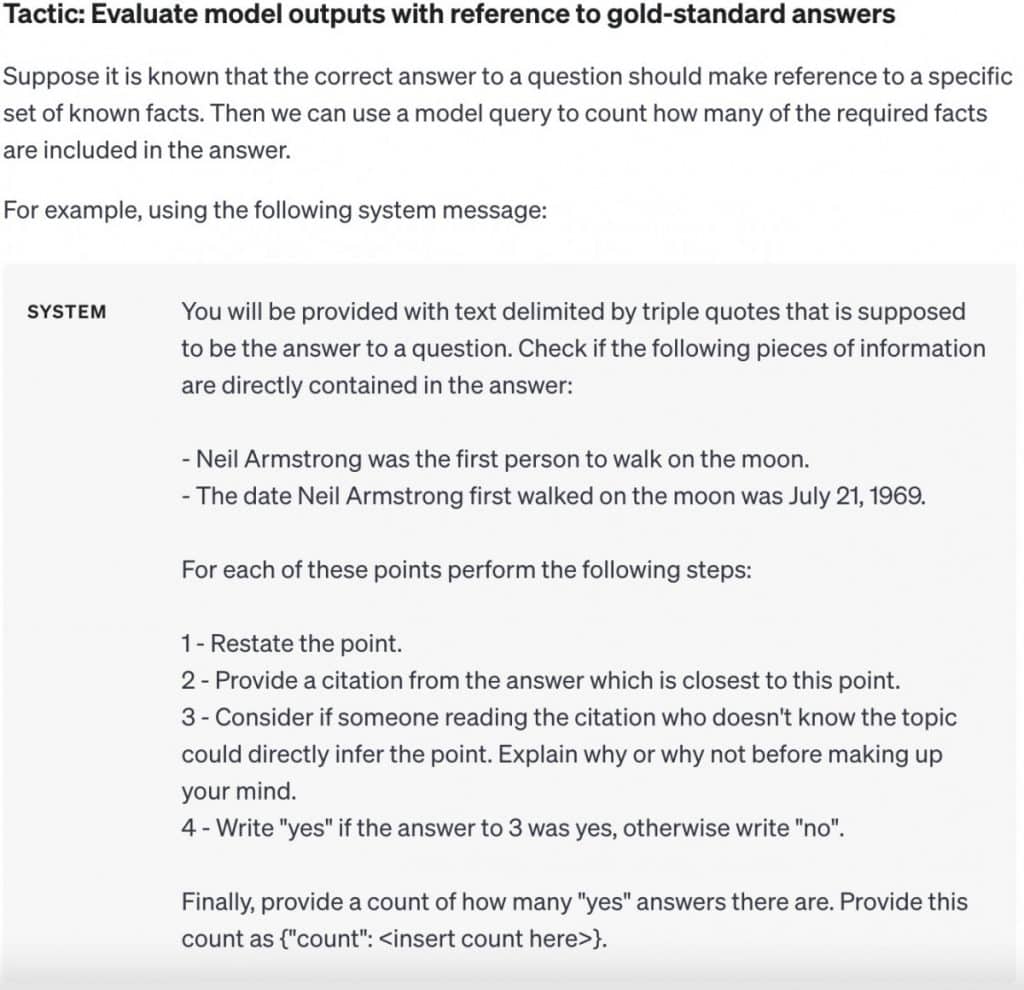

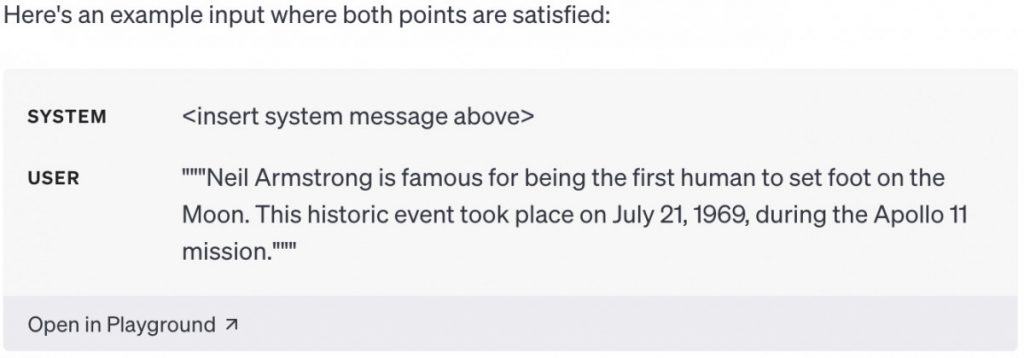

Performance enhancement can happen through careful measurement. While tweaking prompts might yield improved outcomes in certain scenarios, it could adversely affect overall performance. To verify that any modifications are beneficial, it could be essential to construct a comprehensive suite of tests.

Test Changes Systematically

By utilizing the insights from the Prompt Engineering guide for GPT-4, users can effectively elevate the efficiency of LLMs through well-defined techniques and strategies, ensuring they perform optimally across varying contexts.

Please note that the information shared on this page is not intended to serve as legal, tax, investment, or any form of professional advice. It’s vital to invest only what you can afford to lose, and to seek advice from a financial advisor if you have any uncertainties. For more details, we recommend checking the terms, conditions, and support resources provided by the issuer or advertiser. MetaversePost strives to deliver accurate and impartial reporting, but market conditions may shift without prior notice.

Disclaimer

In line with the Trust Project guidelines Alisa, a passionate journalist at Metaverse Post, focuses on the realms of cryptocurrency, zero-knowledge proofs, investments, and the expansive domain of Web3. With a sharp eye for emerging trends and technologies, she offers insightful coverage that engages and informs readers amid the constantly changing landscape of digital finance.