OpenAI: How New Process-Supervised Reward Modeling Enhances AI Reasoning

In Brief

OpenAI's process-supervised reward modeling (PRM) seeks to assess the reasoning and intermediate steps of AI models, which leads to better performance metrics and overall effectiveness.

OpenAI's recent advancements have once more turned heads in the AI sphere by introducing process-supervised reward modeling (PRM). This fresh perspective is centered on evaluating the model's reasoning and the intermediate steps involved, all geared towards boosting performance and effectiveness. PRMs OpenAI: How New Process-Supervised Reward Modeling Enhances AI Reasoning

To address this challenge, OpenAI selected complex mathematical problems requiring a series of actions. A distinct model was employed as a critic, thoroughly assessing the intermediate steps and pinpointing misjudgments made by the primary model. This dual-layered approach not only boosts overall performance but also refines the metrics used to gauge the model's capabilities. RLHF OpenAI has made noteworthy progress in this domain, releasing a carefully assembled dataset composed of unique judgements. Each judgement represents a step in solving mathematical tasks, showcasing OpenAI's commitment to high-standard datasets.

This dedication raises intriguing questions about the volume and quality of data gathered across other areas, such as programming or open-ended inquiries. model The training phase for GPT-4, the latest version of OpenAI's GPT series, is in full swing. While traditional RLHF isn't integrated into current test runs, the model is purely language-driven. Interestingly, OpenAI notes that multiple configurations of this model exist, with even the most compact version demanding significantly fewer training resources.

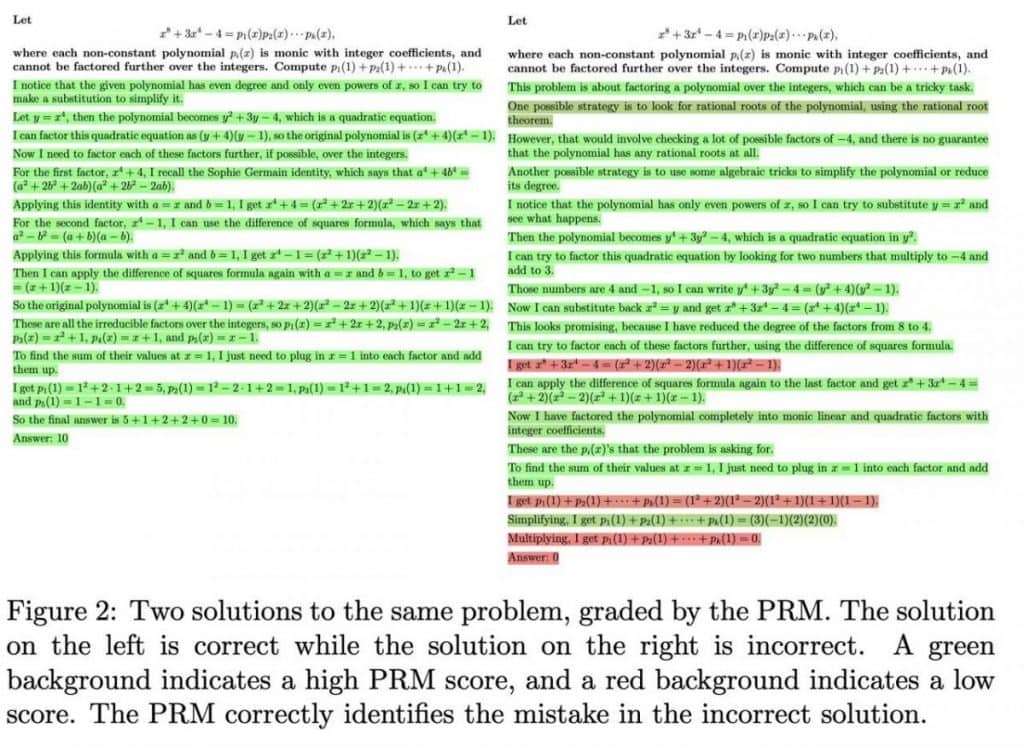

A compelling illustration from OpenAI demonstrates how the model scrutinizes each decision step. In one of the visual aids shared, mistakes in the logic are highlighted in red, receiving low correctness scores, providing clear feedback on the model’s reasoning process. 800,000 marked judgments OpenAI presents how the model examines each critical step in the decision-making process. One particular screenshot in the post draws attention to errors marked in red, indicating their lowest correctness scores. This visual representation showcases the reasoning depth of the model and provides essential insight into its decision-making framework. OpenAI has also outlined guiding principles for markups, inviting crowdsourcers to engage and benefit from their initiatives. As OpenAI relentlessly advances the frontiers of AI research, their commitment to refining model reasoning through process-supervised reward modeling opens exciting new avenues for enhanced AI functionality. This groundbreaking achievement highlights their ongoing dedication to model enhancement and reveals potential for further progress in the field. Reportedly, Apple has put restrictions on employee access to ChatGPT and other AI-driven chatbots over privacy issues. According to The Wall Street Journal, workers are also not permitted to use GitHub’s AI tool, Copilot, which facilitates automated software coding.

ChatGPT, crafted by OpenAI, is an AI-powered chatbot that has faced considerable criticism surrounding privacy breaches. GPT-4 The AI Revolution Is Only Beginning: Will GPT-5 Reach Artificial General Intelligence?

An intriguing example shared by The ChatGPT App Surpasses Half a Million Downloads in Under a Week It’s crucial to mention that the information on this page isn't meant to be legal, tax, investment, financial, or any sort of advice. Make sure to only invest what you can afford to lose, and seek independent financial guidance if you're uncertain. For more details, we suggest checking the terms and conditions along with the help and support resources provided by the issuer or advertiser. MetaversePost is devoted to delivering accurate and unbiased reporting, yet market circumstances may change without prior notice.

Damir serves as the team leader, product manager, and editor at Metaverse Post, covering pivotal topics in AI, AGI, LLMs, the Metaverse, and Web3. His insightful articles engage a vast audience of over a million readers each month. With a decade of experience in SEO and digital marketing, Damir has been highlighted in notable publications such as Mashable, Wired, Cointelegraph, The New Yorker, and Entrepreneur. As a digital nomad, he frequently travels between the UAE, Turkey, Russia, and other CIS regions. Holding a bachelor’s degree in physics, he believes that his education has equipped him with the critical thinking skills essential for thriving in the fast-evolving digital landscape.

- Recently, Cryptocurrencylistings.com Launches CandyDrop, Aiming to Simplify Cryptocurrency Acquisition and Boost User Engagement with Promising Projects DeFAI Must Resolve the Cross-Chain Challenge to Reach Its Full Potential dRPC Launches NodeHaus Platform to Assist Web3 Foundation in Enhancing Blockchain Accessibility Raphael Coin Introduces Itself, Merging a Renaissance Masterpiece with Blockchain Technology

Read more about AI:

Disclaimer

In line with the Trust Project guidelines AI influences healthcare in numerous ways, from uncovering hidden genetic links to empowering sophisticated robotic surgical systems...