OpenAI Debuts Its 'Preparedness Framework' Aiming to Mitigate Risks in AI Development

In Brief

In an effort to enhance safety, OpenAI has introduced its 'Preparedness Framework', a comprehensive toolkit designed to mitigate worries surrounding the responsible development of AI models.

Microsoft-backed AI company OpenAI The company has rolled out its 'Preparedness Framework', a strategic set of tools and methods that aim to alleviate mounting concerns about the safety issues surrounding advanced AI model development.

The Sam Altman OpenAI made this announcement through a detailed plan available on its website, showcasing the organization’s dedication to managing risks that emerge from its innovative technologies. They also shared more insights about the framework through social media channels. platform X .

OpenAI’s framework grants the board the authority to overturn safety-related decisions made by executives, implementing an oversight layer for the rollout of their latest technologies. Additionally, the company specified rigorous requirements for technology deployment, concentrating on safety evaluations in critical fields like cybersecurity and nuclear risks.

A key component of OpenAI's strategy involves forming an advisory committee responsible for scrutinizing safety reports. The results from this committee will be relayed to the company's leaders and board, emphasizing a collaborative method for addressing concerns. safety concerns .

Responsible Strategy Towards Industry Challenges

This initiative by OpenAI is a response to the increasing worries within the AI sector about the risks that come with more capable models. Back in April, a coalition of industry leaders and experts called for a six-month halt on the development of systems exceeding certain capabilities. OpenAI’s GPT-4 , citing potential societal risks.

Led by Sam Altman, OpenAI is enhancing internal safety protocols to proactively manage the evolving risks associated with dangerous AI applications. A specialized team will be overseeing technical operations and safety-related decision-making, with a structured operational framework to ensure rapid responses to emerging threats.

The framework entails developing risk 'scorecards' to monitor various potential harm indicators, triggering assessments and actions if specific risk levels are crossed.

We plan to conduct assessments and continuously update the 'scorecards' for our models. All advanced models will undergo evaluations, especially prior to every doubling of effective computational capacity during training. We'll stress-test models to their limits,” the representative stated. ChatGPT maker.

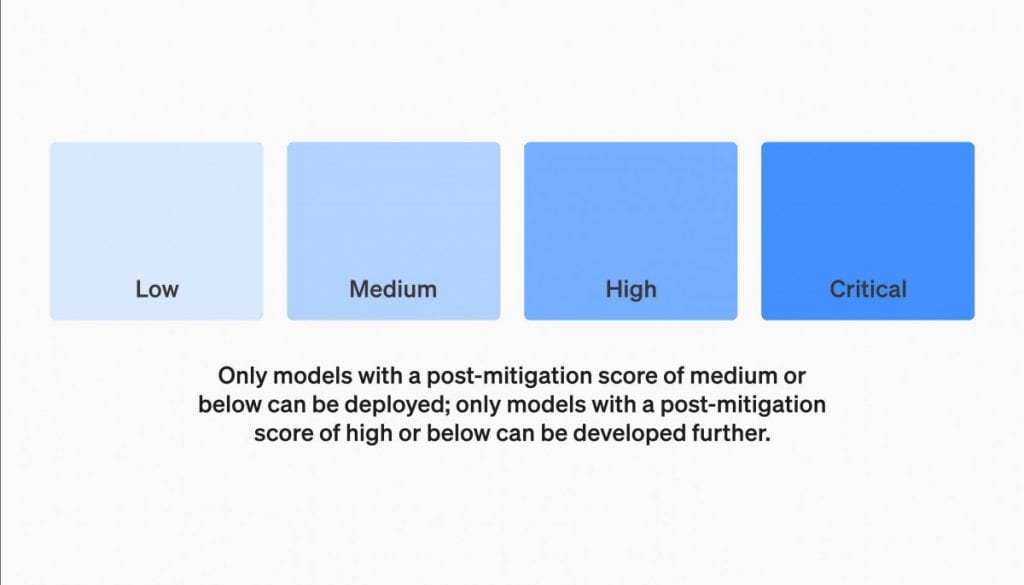

As per our safety standards, only models that achieve a post-mitigation score categorized as medium or lower can be deployed, and only models with a post-mitigation score of high or lower can proceed to further development. We also enhance security measures commensurate with the level of risk associated with the models,” it remarked.

Moreover, the company underscores the adaptable nature of its framework, committing to ongoing updates based on new information, feedback, and research. They also pledge to work closely with both external and internal entities to monitor real-world misuse, reflecting a dedication to accountability and transparency. responsible AI development.

As OpenAI takes significant steps to tackle safety issues, it sets a standard for responsible and secure AI development in a time where the capabilities of generative AI technologies both captivate and raise critical concerns about their societal implications.

tg

twitter Kumar Gandharv Please be aware that the information provided here is not intended as legal, tax, investment, or financial advice. It is crucial to only invest what you can afford to lose and to seek independent financial counsel if you have any uncertainties. For further information, we recommend checking the terms and conditions along with the help and support pages offered by the issuer or advertiser. MetaversePost strives for precise and unbiased reporting, but market conditions can change unexpectedly.