Announcing its latest breakthrough, Nvidia has introduced eDiff-I, a cutting-edge generative AI that excels in synthesizing text and images with rapid style transfers.

In Brief

Nvidia rolls out eDiff-I, aimed at assisting enterprises in crafting striking and high-quality imagery.

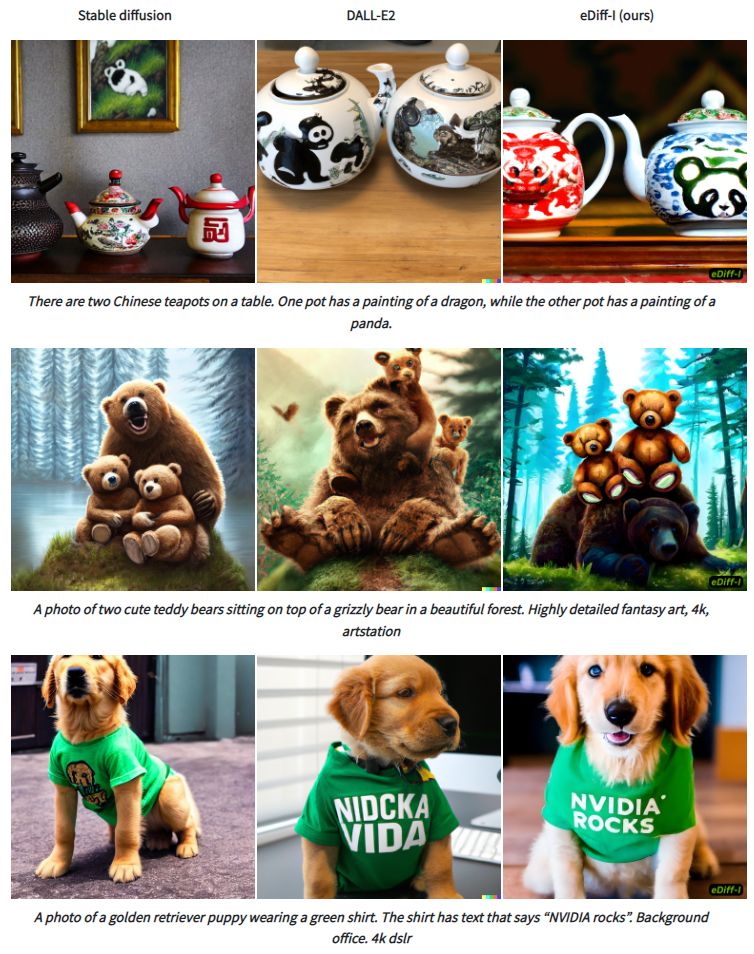

The innovative eDiff-I approach consistently offers superior synthesis quality compared to alternatives like DALL-E2. Stable diffusion

eDiff-I serves as an advanced content generation platform that facilitates... provides This technology stands as a game changer for marketing professionals and businesses alike, as recently confirmed by Nvidia. Nvidia With eDiff-I, businesses gain the ability to swiftly and effectively create attractive visuals without needing high-end equipment or specialized expertise. Leveraging natural language processing (NLP), it deciphers user inputs to bring forth relevant images. The AI evaluates these images to select the one most fitting for the context, resulting in visuals that look polished and professional, applicable for various uses including promotional materials, social media campaigns, and email marketing.

eDiff-I is a next-generation generative AI This content generation tool offers remarkable features... text-to-image It excels in creating visuals based on textual descriptions while enabling rapid style transfers and a unique 'paint with words' function. Through a diffusion model designed for translating text into imagery, eDiff-I proposes a collection of expert denoising networks, each tailored to operate across specific noise ranges, reflecting the nuanced behaviors of diffusion models throughout various sampling stages.

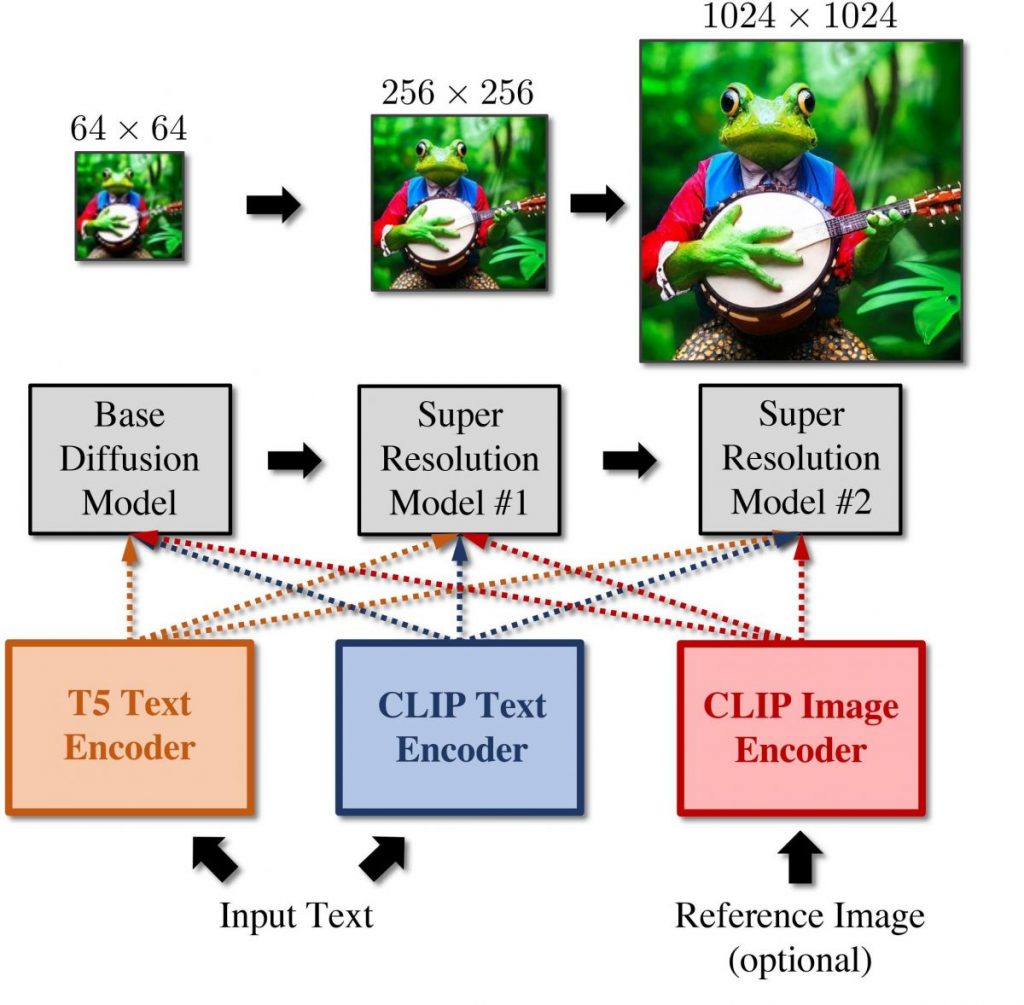

The foundation of eDiff-I is built on T5 text embeddings, CLIP image embeddings, and CLIP text embeddings, allowing it to generate photorealistic images based on any textual request.

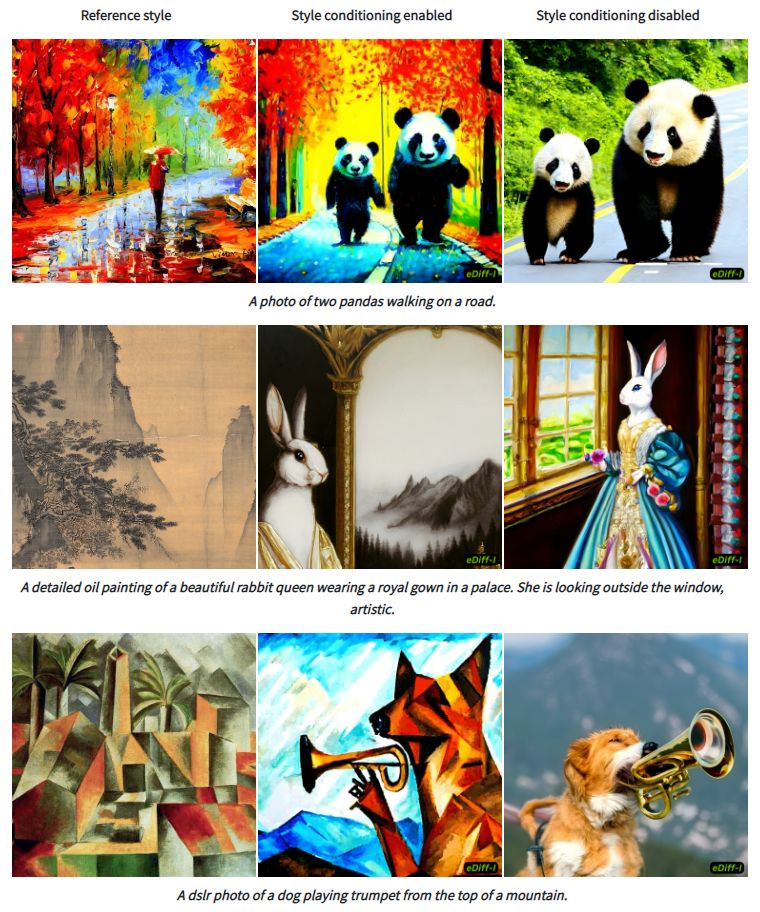

Besides text-to-image generation, it enhances functionalities by including: (1) style transfer for customizing the aesthetic of generated images using a reference image, and (2) a feature dubbed 'Paint with Words,' which lets users render images by sketching segmentation maps directly on a canvas.

The framework comprises a sequence of three diffusion models: a foundational model capable of producing visuals at 64×64 resolution, partnered with two super-resolution modules that incrementally enhance the images to 256×256 and 1024×1024 resolutions, respectively. The models utilize T5 XXL alongside text embeddings after receiving a textual caption. These image embeddings can then be treated as stylistic vectors. Finally, the results are produced through a progressive pipeline that creates images sized 1024 x 1024. diffusion models The eDiff-I methodology consistently outperforms publicly available text-to-image models.

Using CLIP image embeddings allows eDiff-I to effectively manage style transfer. Initially, the model retrieves image embeddings from a provided reference style image, which acts as a guide for stylistic influence. A stylistic reference can be illustrated in the left panel of the accompanying figure. The effects with activated style conditioning are showcased in the center, whereas those without such conditioning are displayed in the right panel. When style conditioning is employed, the eDiff-I model tailors outputs closely aligned with the original caption's style, while outputs without this conditioning yield more natural-looking images. Stable diffusion ) and (DALL-E2).

Users of the eDiff-I framework can manipulate the arrangement of objects referenced in the text prompt by selecting words and sketching their desired placements over the image. Subsequently, the model utilizes these selections to generate imagery that aligns with both the text and the provided map. CLIP A showcase of AI artistic styles, featuring the works of Midjourney and DALL-E with 130 notable AI painting techniques.

Here are the 7 leading online creators for anime characters powered by AI, updated for 2022. prompt and the maps to create images Blizzard plans to leverage AI to design personalized gaming experiences for each player.

Read related articles:

Disclaimer

In line with the Trust Project guidelines Omniston addresses the challenge of DeFi fragmentation by enhancing liquidity within the TON ecosystem.