The innovative GigaGAN text-to-image model is capable of generating 4K images in just 3.66 seconds.

In Brief

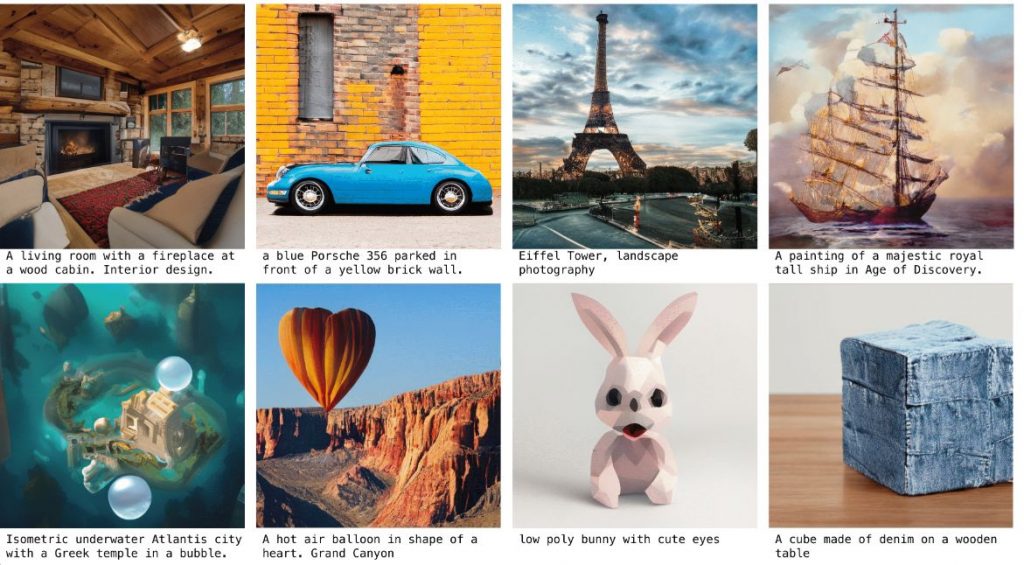

The researchers have launched GigaGAN, an advanced text-to-image model that can create 4K images in only 3.66 seconds.

This model is built upon the generative adversarial network (GAN) architecture, which is a specific category of neural networks that excel in learning how to recreate data that closely resembles the data it was trained on. neural network GigaGAN can produce 512px images in just 0.13 seconds, outpacing the previous best models by a factor of ten. Additionally, it boasts a disentangled, continuous, and easily controllable latent space.

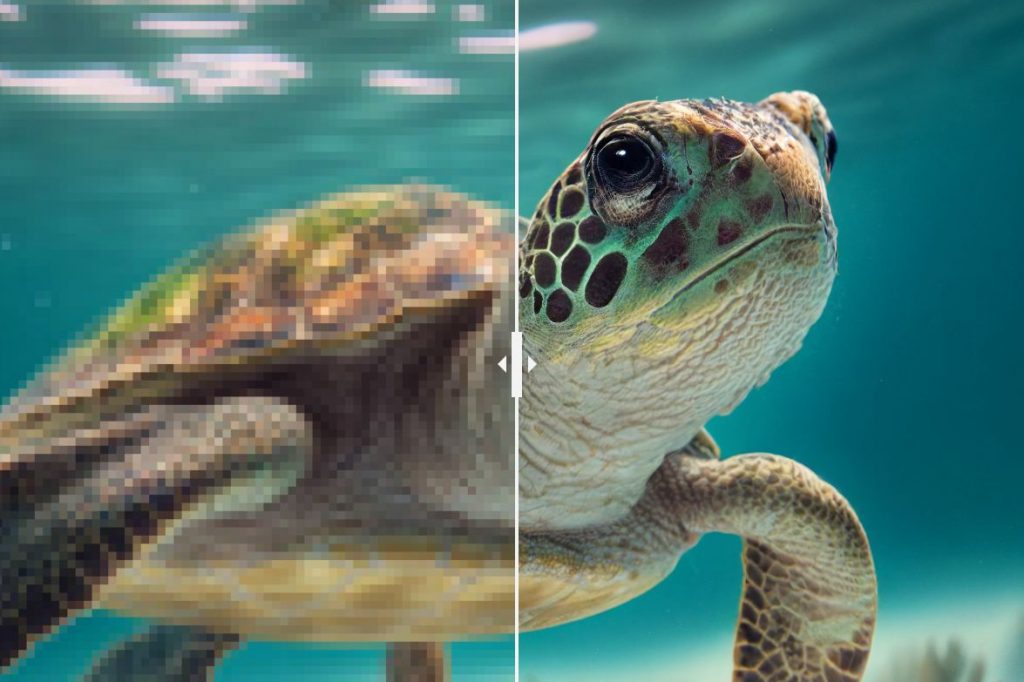

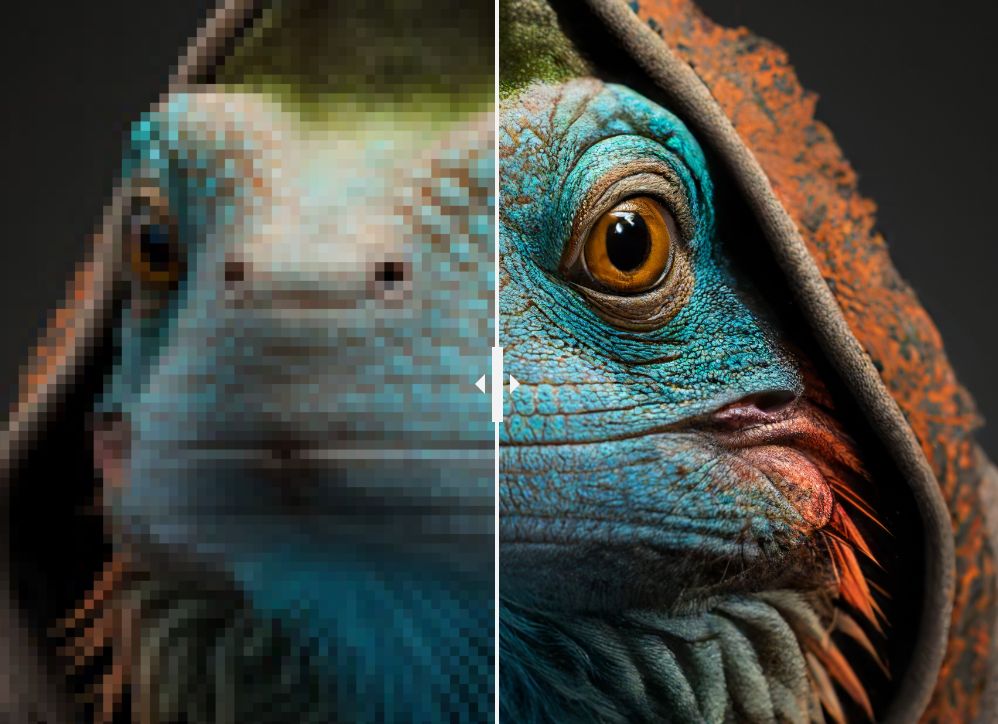

It also has the functionality to train an advanced upsampling tool that elevates image quality.

The text-to-image model, GigaGAN, operates within the remarkable timeframe of 3.66 seconds to generate images. This outperforms earlier text-to-image models, which often require several minutes or even hours for single image creation. GigaGAN that can generate 4K images The GigaGAN model shines as it consistently generates 4K images at lightning speed of 3.66 seconds.

Top 5 Exciting Text-to-Image AI Models to Watch in 2023

Moreover, GigaGAN features a disentangled, continuous, and controllable latent space, providing it the versatility to create a wide array of styles while allowing for some degree of input control. Notably, GigaGAN can maintain the layout of input text, which is crucial for tasks such as rendering product designs from textual descriptions.

Additionally, GigaGAN serves as an effective training ground for a high-quality upsampler, applicable to either real-world images or outputs from other models.

The GigaGAN generator incorporates components like a text encoding branch, style mapping network, and a multi-scale synthesis network, optimized with stable attention and adaptive kernel selection techniques. Developers initiate the process by extracting text embeddings using a pre-trained CLIP model, incorporating learned attention layers. text-to-image models .

Similar to the generator's structure, the style mapping component takes the embedding and crafts the style vector w. The synthesis network then utilizes this style vector in tandem with the text embeddings to create an image pyramid. Notably, developers introduce adaptive kernel selection that changes convolution kernels based on the specific text input conditions. StyleGAN The discriminator of GigaGAN, mirroring the generator’s structure, consists of dual branches that process both image and text information. Its text branch handles input text, while the image branch processes a pyramid of images, making independent predictions for each scale and across all layers of downsampling. Moreover, supplementary losses are employed to promote effective convergence.

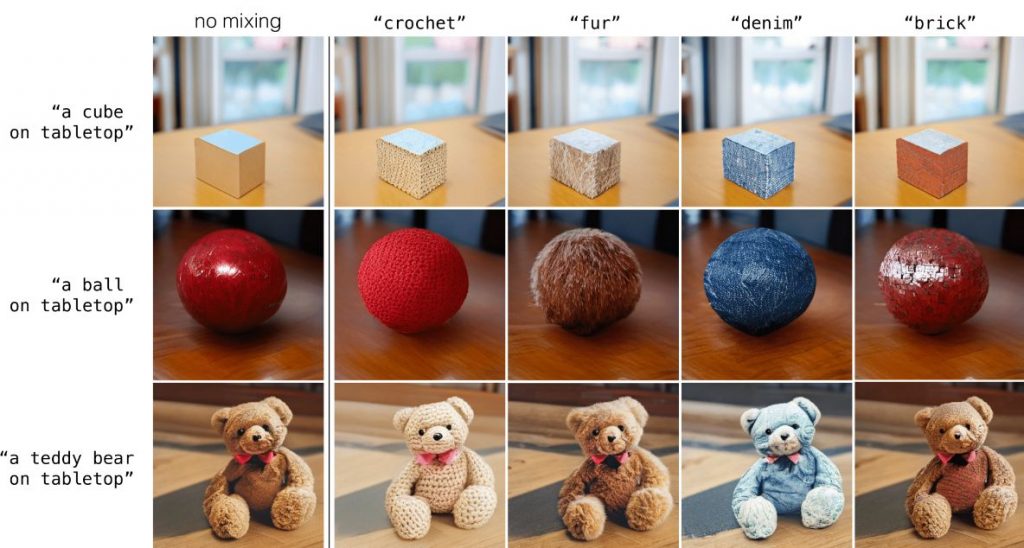

As evidenced by the interpolation grid, GigaGAN encourages seamless transitions between prompts. The four corners of this grid showcase similarities derived from the same latent z yet differing text prompts.

Thanks to its highly organized latent space, GigaGAN enables developers to merge the general style of one example with the intricate style of another. Furthermore, it offers direct control over style through textual prompts.

Midjourney and Dall-E Artistic Styles Overview: Showcasing 130 Distinct AI Art Techniques

Read more related articles:

Disclaimer

In line with the Trust Project guidelines Damir serves as the team lead, product manager, and editor at Metaverse Post, focusing on topics like AI/ML, AGI, LLMs, the Metaverse, and Web3. His captivating articles draw an impressive audience exceeding one million readers monthly. With a solid decade of experience in SEO and digital marketing, Damir has been featured in prominent platforms like Mashable, Wired, Cointelegraph, and more. As a digital nomad, he travels across the UAE, Turkey, Russia, and the CIS. He holds a bachelor's degree in physics, a background he argues bolsters his analytical skills necessary for navigating the dynamic digital landscape.