The Falcon 180B Unveils Itself, Standing Proudly Next to GPT-4 and Google’s PaLM 2.

In Brief

Falcon 180B is an advanced AI language model featuring a groundbreaking 180 billion parameters, establishing a new paradigm within the technology sector.

With a training dataset of 3.5 trillion tokens, this model outshines rivals such as Meta's LLaMA 2.

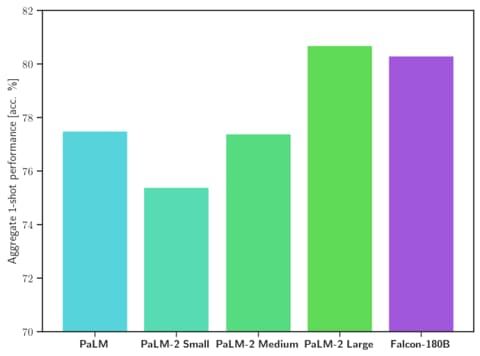

The Falcon 180B The launch of Falcon 180B marks its emergence as a powerful player in AI, thanks to its vast 180 billion parameters. Fine-tuned on an astonishing 3.5 trillion tokens, this model showcases outstanding abilities in a variety of tasks including logical reasoning, software development, and comprehensive knowledge evaluations. Falcon 180B doesn’t just compete but frequently excels over its rivals, notably Meta’s LLaMA 2.

Falcon 180B is on the same level as OpenAI’s GPT-4 and matches the performance of Google’s PaLM 2 Large. This accomplishment highlights the extraordinary capabilities of this model. language model .

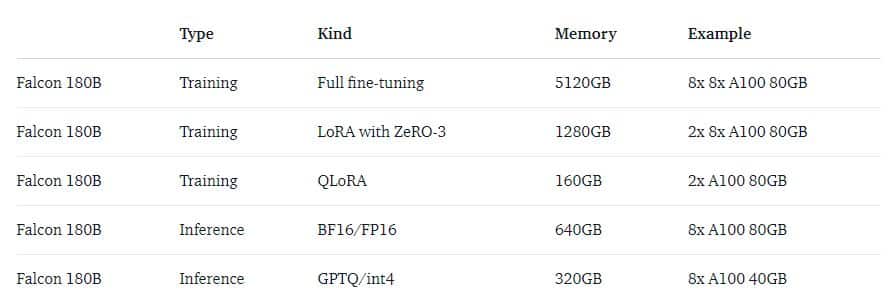

For in-depth details about Falcon 180B, please visit the dedicated page where you’ll find both a demo and user agreement. this link It’s crucial to acknowledge that Falcon 180B requires significant computational resources, which may not be manageable on typical hardware. Enthusiasts eagerly look forward to the advent of compatible hardware like the anticipated NVIDIA GH200 Grace Hopper, featuring a remarkable 282 GB of VRAM. The announcement of this robust hardware took place at SIGGRAPH 2023.

Notable Features of NVIDIA GH200 Grace Hopper

The GH200 Grace Hopper is set to transform the Generative AI landscape, incorporating not just a high-performance GPU but also an integrated ARM processor.

- The base model is outfitted with 141 GB of VRAM and 72 ARM Neoverse cores, along with 480 GB of LPDDR5X RAM.

- Utilizing NVIDIA NVLink technology, it allows for the integration of dual 'superchips', resulting in a staggering 480√72 GB of high-speed memory.

- This dual-chip setup yields an impressive 282 GB of VRAM, backed by 144 ARM Neoverse cores and achieving 7.9 PFLOPS in int8 performance, equating to the dual H100 NVL.

- Moreover, the latest HBM3e memory technology boasts a remarkable 50% speed improvement over its predecessor, HBM3, providing an astounding 10 TB/s of combined bandwidth.

- This technological breakthrough is expected to open up new avenues in AI research and practical applications, facilitating intricate and data-heavy tasks more efficiently.

Google Introduces PaLM API for Businesses to Train AI Models and Chatbots Together.

Read more about Ai:

Disclaimer

In line with the Trust Project guidelines Damir leads the team, managing products and editing at Metaverse Post, focusing on topics such as AI/ML, AGI, LLMs, the Metaverse, and Web3. His work engages a vast audience of over a million users monthly, showcasing his expertise backed by a decade of experience in SEO and digital marketing. Featured in notable publications like Mashable, Wired, and Cointelegraph, he navigates between the UAE, Turkey, Russia, and the CIS as a digital nomad. With a bachelor’s degree in physics, he believes this foundation has equipped him with critical thinking skills crucial for thriving in the constantly evolving digital landscape.