Meet MusicLM, the cutting-edge AI model from Google designed to convert both text and images into a musical experience.

In Brief

Google has rolled out MusicLM, a revolutionary model that generates high-fidelity music from textual inputs.

One remarkable feature of MusicLM is its ability to take both text and melodies as inputs, allowing it to adjust whistled or hummed tunes based on the descriptive style provided.

The model can generate music This versatility extends across numerous musical styles, including classical, jazz, and rock genres.

MusicLM leverages a hierarchical sequence-to-sequence approach to create music, producing tracks at an impressive 24 kHz that can flow seamlessly for extended durations. It generates sounds based on detailed text prompts like 'serene violin accompanied by a crunchy guitar riff,' showcasing its advanced capabilities.

According to Google’s research, MusicLM surpasses prior music generation systems in terms of both sound quality and fidelity to input descriptions. Additionally, it showcases its ability to adapt whistled and hummed tunes to the requested style. To facilitate further innovation in music, Google has released MusicCaps, a publicly available dataset containing 5,500 music-text combinations enriched by expert text descriptions.

| Related article: Text-to-3D: Google has created an advanced neural network capable of generating three-dimensional models directly from text descriptions. |

The MusicLM model has been trained on an extensive library of musical scores, which enables it to grasp the arrangement and structure of music well. This allows the AI to create not just variations, but entirely new and original musical pieces. generate music This advancement in the MusicLM model marks a milestone in AI-driven music production, providing capabilities far beyond earlier versions that were restricted to short tunes or basic melodies. The potential here is vast; this model could be used to compose intricate, lengthy musical works suitable for films, video games, and other creative avenues.

This new AI model is designed to craft extensive musical arrangements, pushing the boundaries of what's possible in music composition.

Stable Diffusion technology can generate fresh music by creating spectrograms based on textual descriptions. generations of up to five minutes .

| Related article: The AI can also pull inspiration from dialogue in films and video games to create music. |

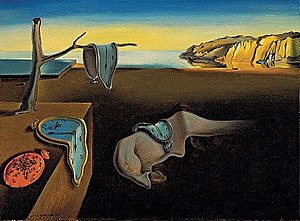

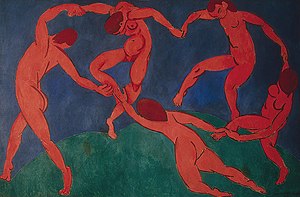

Moreover, the model is capable of generating compositions inspired by images.

Discover more about AI's impact on the music world:

Check out the top 20 examples of AI-driven music creation, featuring prompts from Mubert.

Disclaimer

In line with the Trust Project guidelines Damir leads the team, serves as a product manager, and is an editor at Metaverse Post, focusing on subjects like AI/ML, AGI, LLMs, and the realms of Metaverse and Web3. His writing reaches an audience of over a million readers each month. With a decade of experience in digital marketing and SEO, Damir's insights have been featured in prominent outlets such as Mashable, Wired, Cointelegraph, and The New Yorker. He travels as a digital nomad between locations like the UAE, Turkey, Russia, and other CIS countries. With a bachelor's degree in physics, he applies his analytical skills to navigate the rapidly evolving digital landscape effectively.