MIT and Google Brain Introduce Innovative Strategy for Enhancing LLMs through Multi-Agent Debates

In Brief

The research team at MIT and Google Brain has initiated a fresh concept known as Multi-Agent Debates. This strategy capitalizes on the communication between models to uplift the reasoning quality and output generation of Large Language Models (LLMs). The implications of this advancement could reshape how we leverage language models across various applications.

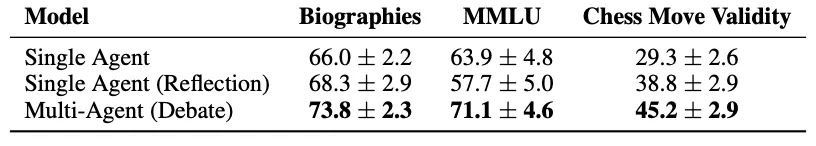

The Society of Mind framework proved to be more effective than other alternatives in every assessed area, showcasing its potential to amplify the reasoning capabilities of LLMs.

A team from MIT and Google Brain is pioneering a unique strategy called Multi-Agent Debates which utilizes inter-model communication to elevate reasoning quality and output generation in LLMs. This breakthrough has the potential to drastically change our engagement with and application of language models in multiple fields.

Drawing inspiration from the work of Marvin Minsky , a significant proponent of agent-based AI and the author of “Society of Mind,” aims to bolster LLM capabilities by integrating a model of agents collaborating for intelligent outcomes. Minsky’s theory suggests that intelligence stems from multiple smaller processes, referred to as 'agents,' that work together to exhibit intelligent behavior.

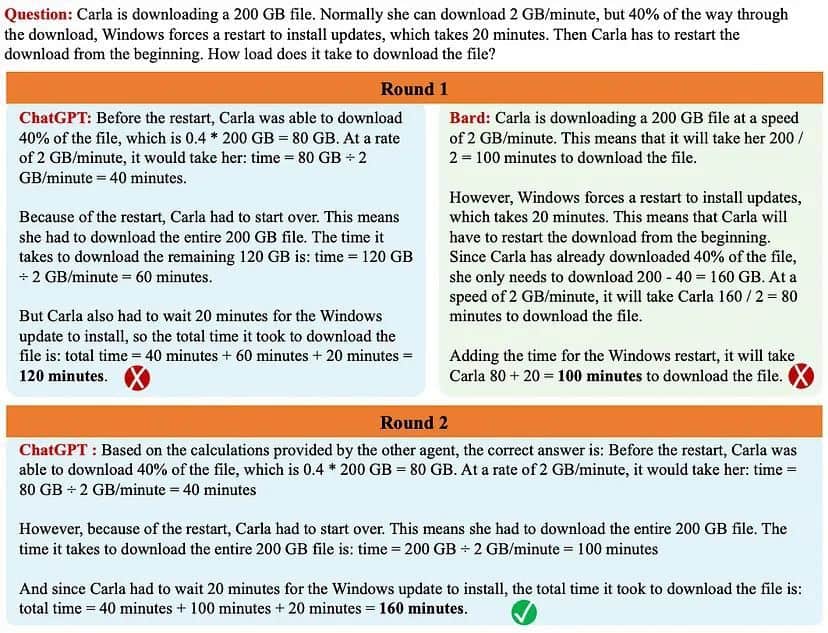

The Multi-Agent Debates method brings this idea to LLMs, forming a space where several agents can engage in discussions. Each agent receives a prompt and shares their responses with the entire group. The context each LLM has in a given moment consists of its own logic as well as that of its peers. Through numerous rounds of debate, the models reach an agreed output, similar to how humans find consensus in conversations.

To simplify the algorithm:

- Multiple instances of language models produce distinct candidate answers for a specific query.

- Each model evaluates and critiques the responses from all others, using this feedback to refine its own answer.

- This iterative process continues until a conclusive response emerges.

This methodology pushes models to formulate responses that are not only aligned with their critiques but also take into account the insights gained from other agents. The agreement reached among the models allows for various reasoning pathways and opens up numerous potential answers before a final response is established.

However, when each agent's answers are fed to the next one, something remarkable occurs: ChatGPT is able to provide the correct answer by utilizing the context of its previous response along with new insights. Bard’s prior response .

The MIT AI Lab undertook studies to assess the effectiveness of this approach across a range of scenarios. These evaluations included accurately presenting information about notable computer scientists, confirming factual queries, and predicting optimal moves in chess. The results, illustrated in Table 1 below, demonstrate that the Society of Mind strategy outstripped other methods in every assessed area, validating its strength in boosting LLMs’ reasoning abilities.

Although this innovation presents great potential, there are still relevant questions concerning when to conclude these debates. Establishing a clear stopping point for discussions is crucial. Researchers are currently investigating metrics like perplexity or BLEU generation to define this stopping criterion and assure peak performance.

The amalgamation of the Chain of Thoughts method with the Society of Mind concept brings us closer to a future where language models demonstrate superior reasoning skills and create more accurate and sophisticated responses.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Please be mindful that the information on this page is not meant to serve as, nor should it be taken as, legal, tax, investment, financial, or any other type of advice. It is vital to invest only what you can afford to lose and to seek independent financial counsel if you have any concerns. For additional details, we recommend checking the terms and conditions along with the help and support pages provided by the issuer or advertiser. MetaversePost is dedicated to delivering precise and unbiased information, but market conditions may evolve without prior notice.