Meta has introduced an innovative open-source LLaMa-2-Chat model that promises to reshape the AI landscape with its exceptional performance.

In Brief

Meta has officially rolled out its LLaMa-2-Chat models, marking a significant advancement in the realm of open-source artificial intelligence.

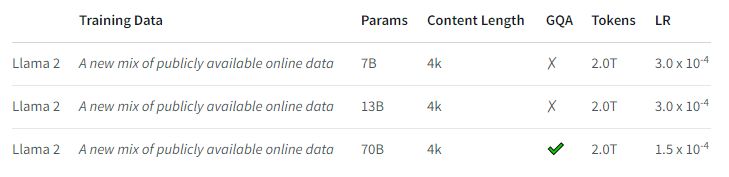

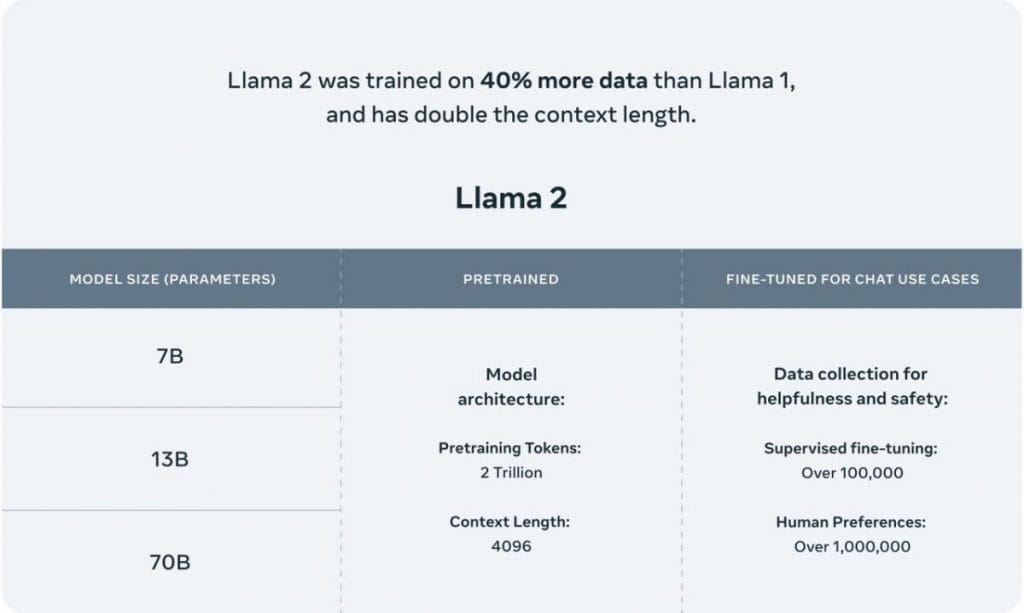

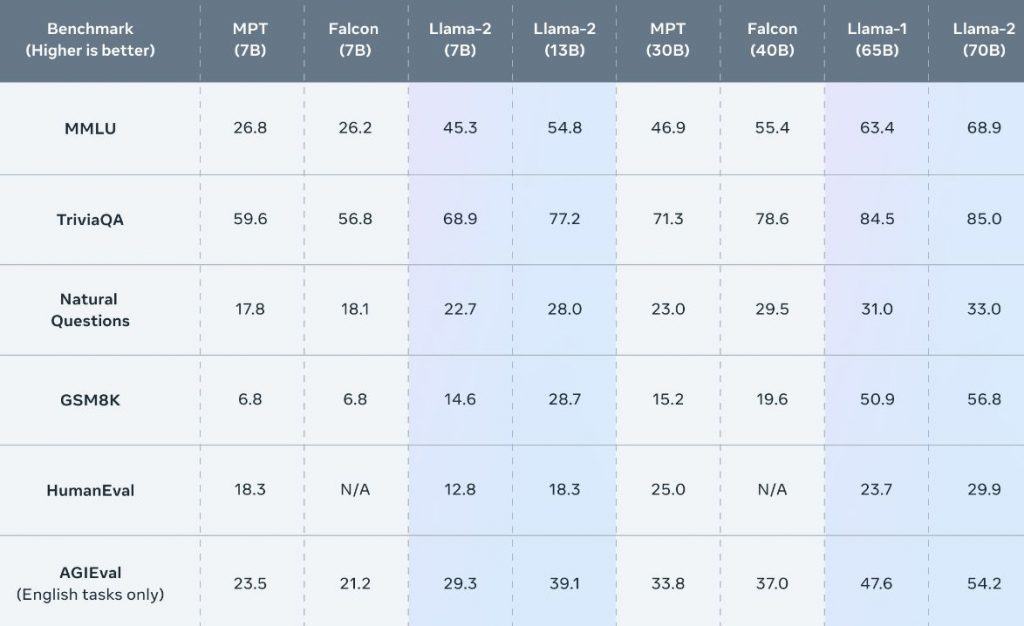

With a staggering 70 billion parameters, these models rival GPT-3.5 while also surpassing several industry benchmarks.

The models have been finely tuned using reinforcement learning from human feedback (RLHF), delivering tailored ChatGPT-like interactions, comprehensive human evaluation metrics, and advanced problem-solving abilities.

Meta has recently A collection of LLaMa-2-Chat models has been launched in different configurations, generating considerable excitement in the tech community. Meta's LLM team at GenAI has truly delivered something noteworthy.

LLaMa-2-Chat stands out as an extraordinary accomplishment by the skilled professionals at GenAI's LLM department. With its impressive 70 billion parameters, it not only matches but also exceeds the renowned GPT-3.5 in performance. The model achieves remarkable scores on difficult reasoning tasks, showcasing its strong capabilities. on certain benchmarks.

Highlights:

- Commercial friendly

- Pretrained on 2T tokens

- What sets LLaMa-2-Chat apart is its pioneering status as the first model of this magnitude fine-tuned through RLHF. In an unprecedented gesture, Meta has made this model available for free commercial use, inviting users to request download links through their official platform.

- 4K context

- (Extendable) RoPE embeddings

- Coding performance is meh

- SFT/RLHF chat versions

One of the key benefits of LLaMa-2-Chat is its ability to facilitate the development of alternatives without requiring any data sharing with OpenAI. This empowers innovators and researchers to leverage the model's capabilities while maintaining full control over their information.

EXCITING AI NEWS!!!🔥 LLaMa 2 has just launched! And the best part? It’s completely open-source and ready for commercial use! It supports parameter counts from 7B to 70B. ChatGPT They've also unveiled variants specifically optimized for conversational applications (LLaMa 2-Chat)! download new model here.

When it comes to human evaluation scores, LLaMa-2-Chat rivals ChatGPT-3.5 in terms of overall quality. It particularly excels at solving mathematical problems, outperforming several other models in this area.

— Aleksa Gordić 🍿🤖 (@gordic_aleksa) July 18, 2023

LLaMa is a comprehensive large language model tailored for AI researchers. With different sizes available (7B, 13B, 33B, and 65B parameters), LLaMa is structured

The paper looks super detailed – 76… pic.twitter.com/yZahl7Jzya

to explore a diverse range of applications. This model is finely tuned for specific tasks and utilizes a vast amount of unlabelled data. However, it's important to note that LLaMa also poses risks, including potential biases, inappropriate content, and hallucinations. The model is distributed under a research-focused non-commercial license, with access considered on a case-by-case basis.

- In February, Meta has released LLaMA model With 7 billion parameters, the LLaMa model researchers to test new approaches has demonstrated ultra-fast inference speeds on a MacBook equipped with the M2 Max chip. This breakthrough is credited to Greganov's effective deployment of model inference using the Metal GPU, a specialized component in Apple's latest processors. The LLaMa model showcases zero CPU usage, maximizing the performance of all 38 Metal cores. This vision of personalized AI assistance and localized experiences on individual devices has the potential to create a future where AI seamlessly integrates into daily life, offering tailored support and streamlining everyday tasks.

- Video-LLaMA: A groundbreaking audio-visual language model designed for video comprehension. DeFiLlama has decided against pursuing the fork and announced that there won't be a token launch. Insights into GPT-4's leaked specifications reveal its vast scale and advanced architectural design.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Addressing the challenges of DeFi fragmentation: How Omniston enhances liquidity on the TON blockchain.