Meta AI has introduced a novel algorithm that empowers robots to acquire skills through the observation of YouTube content.

In Brief

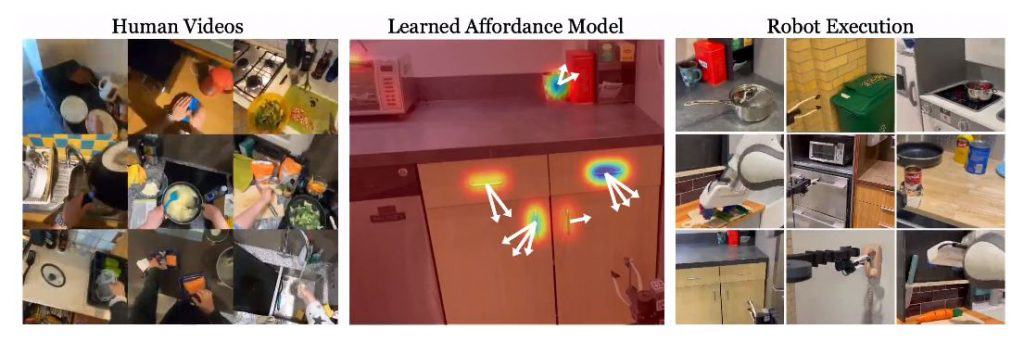

Researchers are pushing boundaries with a new visual affordance model that trains robots by analyzing internet videos of human actions to tackle complex tasks.

This method effectively links traditional datasets with the practical applications of robotics, paving the way for more advanced machine learning.

To extract useful affordances, researchers rely on large human video datasets like Ego4D and Epic Kitchens, leveraging computer vision alongside robotic actions.

The concept of the Vision-Robotics Bridge (VRB) illustrates how robots can learn valuable skills from observing human activities in videos, enhancing their ability to execute intricate tasks.

In a recent study titled 'Affordances from Human Videos as a Versatile Representation for Robotics,' the authors discuss how human video interactions can be crucial for training robots. The aim of this research is to link the gap between static data sets and the real-world functionality of robots. While existing models have shown promise with static data, transferring this knowledge to robotic applications has presented challenges. The team proposes a visual affordance model that utilizes online videos depicting human behavior to potentially solve this issue, helping robots learn where and how to interact effectively. At the core of this approach is the idea of 'affordances'—the potential interactions that a given object or environment presents. By deciphering these affordances from human videos, robots can gain versatile representations that empower them to tackle a range of complex challenges. The researchers enhance their affordance model, applying it to four distinct types of robot learning: offline imitation, exploration, goal-oriented learning, and action parameterization.

The study titled 'Affordances from Human Videos as a Versatile Representation for Robotics' was presented at CVPR 2023.

Here are the top 100+ terms identified by AI detectors as of 2023. reinforcement learning .

Is it correct to say that GPT-4 can answer MIT exam questions with absolute accuracy? Researchers suggest that's a misconception. Ego4D and Epic Kitchens The model processes the human-agnostic frame as input and generates two essential outputs: a contact heatmap that shows likely contact points and wrist waypoints that forecast movements following the contact, which can be utilized during inference, employing sparse 3D data like depth and kinematic details from the robot.

Meta AI is dedicated to enhancing computer vision and intends to release both the code and dataset associated with its project. This initiative will assist other researchers and developers in exploring and expanding upon this technology further. With wider access to the core materials, the prospect of creating self-learning robots capable of acquiring new competencies from various sources becomes more attainable. AGI ) as advocated by AI researcher Jan LeCun .

Venture Fund a16z invests in GenML to tackle Eroom's Law. YouTube videos will continue to progress.

Damir, the product manager and editor at Metaverse Post, leads a team focused on AI/ML, AGI, LLMs, the Metaverse, and Web3 topics. His insights attract a significant readership, exceeding a million users every month. With a decade of expertise in SEO and digital marketing, Damir’s work has been featured in notable platforms like Mashable, Wired, and Cointelegraph. As a digital nomad, he travels between locations in the UAE, Turkey, Russia, and the CIS. His background in physics enriches his analytical capabilities, enabling him to navigate the dynamic landscape of online media.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Solv Protocol, Fragmetric, and Zeus Network team up to reveal FragBTC: Solana’s native yield-generating Bitcoin product.