I-JEPA: A Groundbreaking Advancement in AI, Bringing Us Closer to Artificial General Intelligence

In Brief

I-JEPA introduces a self-supervised learning technique focused on image comprehension. It empowers the model to grasp semantic elements without depending on established invariances or intricate pixel details.

In addition, it boasts computational efficiency, making it a practical option for developers.

Yann LeCun and his team at Meta has presented a new AI architecture known as I-JEPA. This groundbreaking framework aspires to elevate the study of artificial intelligence by interpreting abstract concepts and navigating the complexities of the world around us. Its mission is clear: to expedite learning, promote forward-thinking, and adapt to diverse environments.

The conventional methodology in AI, referred to as GenML, is seen by some as inadequate in the pursuit of genuine artificial general intelligence (AGI). With the launch of I-JEPA, Meta is taking a divergent approach, prioritizing vision as the essential pathway to AGI instead of language. has faced criticism from LeCun Unlike traditional techniques that heavily depend on manually crafted data modifications,

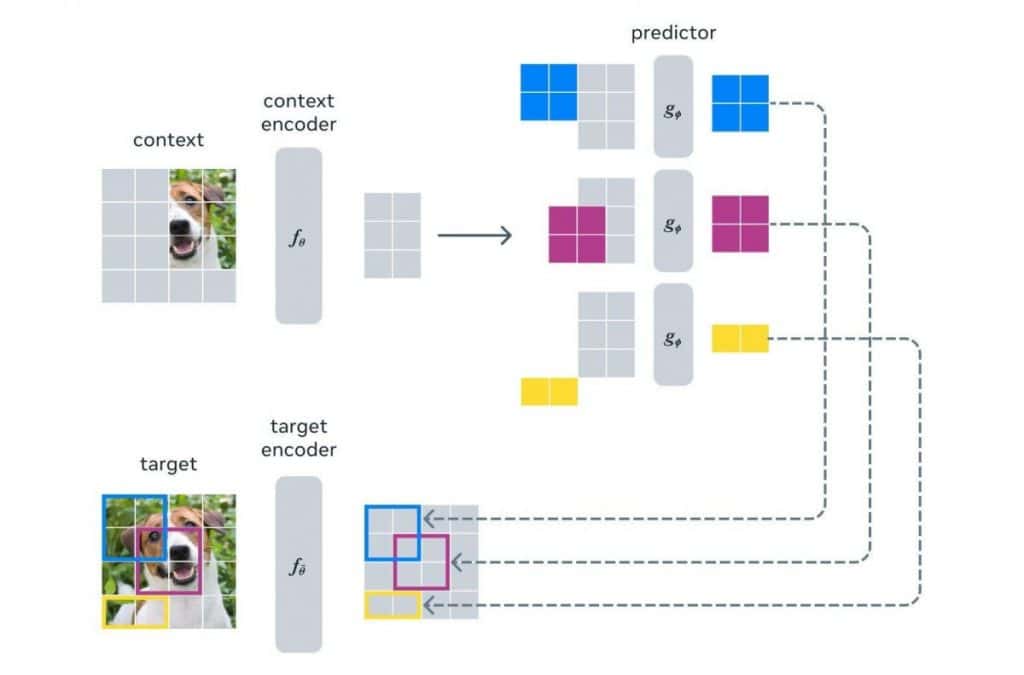

I-JEPA liberates itself from biases and restrictions. By avoiding reliance on predetermined invariances, it escapes the confines of task-specific biases. Moreover, it eliminates the necessity of pixel-level completion, leading to representations that are far more meaningful and endowed with semantic richness. A key highlight of I-JEPA is its predictive capability. Instead of using a pixel decoder, it incorporates a predictor that functions within a latent space. You could think of this predictor as a rudimentary world model, adept at capturing spatial uncertainties in a still image. It forecasts high-level details about unseen segments of the image, with a focus on semantics rather than specific pixel data.

To showcase what I-JEPA can achieve, its team trained a stochastic decoder that translates the predicted representations back into pixel space in the form of sketches. The outcomes were stunning, effectively capturing positional uncertainties and producing accurate renderings of high-level object components, like a dog’s head or the front legs of a wolf.

Beyond its prowess in semantic image interpretation, I-JEPA stands out for its computational efficiency. Unlike other methods needing multiple perspectives or complex data enhancements, I-JEPA yields strong semantic representations straight off without such demanding requirements. This positions it as a manageable and effective solution.

The I-JEPA initiative marks a pivotal milestone in self-supervised learning for image comprehension. Its capacity to learn semantics devoid of biases and pixel-level constraints unveils a realm of opportunities for AI exploration and implementation. single view of the image As the AI landscape keeps an eager eye on future developments, I-JEPA is on the verge of unlocking the full potential of self-supervised learning, setting the stage for transformative advancements in the sector. Initial strides reveal that I-JEPA is trained to grasp the overarching picture within images rather than fixating on each pixel.

Meta’s daring vision has driven them to open-source

the code and checkpoints, welcoming involvement from developers and enthusiasts alike. Anticipation is mounting as the AI community looks forward to the presentation of I-JEPA at an upcoming AI conference. Could this herald a new era in AI innovation? Stay connected for updates as I-JEPA reshapes the narrative of artificial intelligence, promising to narrow the divide between current AI functionalities and the dream of AGI.

Yann LeCun, Meta’s Chief AI Scientist, addresses concerns regarding AI dominance.

David Shapiro Predicts AGI Emergence within 1.5 Years.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Enso, LayerZero, and Stargate Collaborate to Enable One of Ethereum’s Most Significant Liquidity Transitions to Unichain.