How to Produce Image Morphing Animations with ControlNet

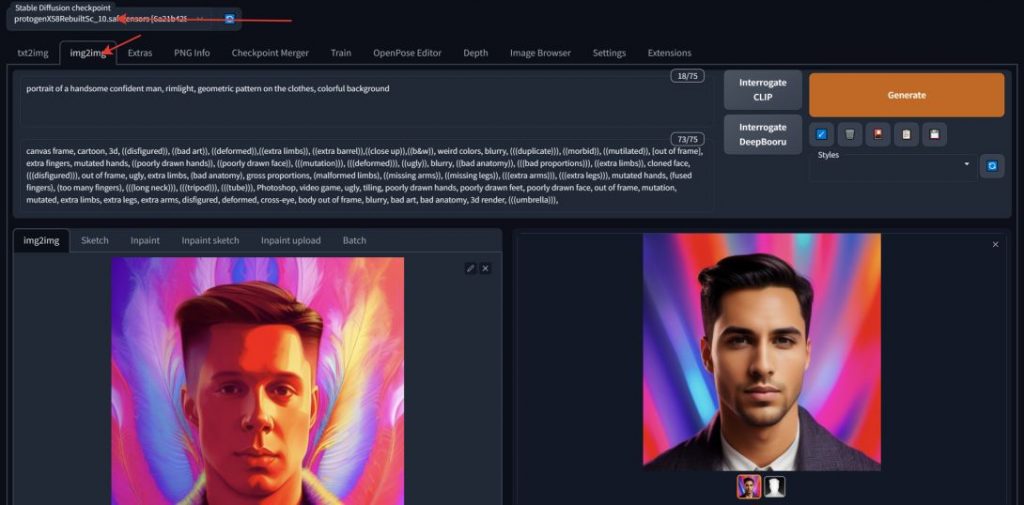

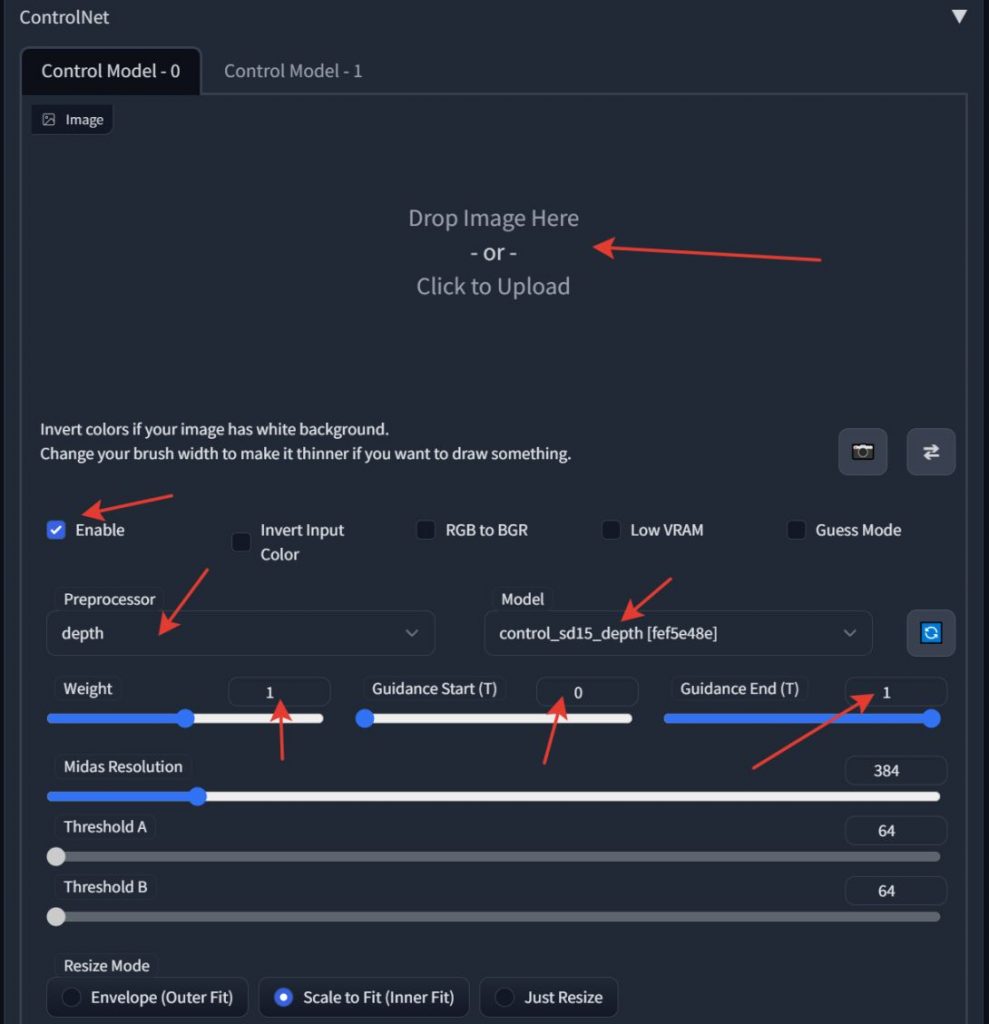

Due to an earlier experiment conducte We initially thought to implement ControlNet for morphing but eventually opted for the depth2img model, which constructs images based on depth maps as opposed to leveraging the ControlNet exclusively. Notably, ControlNet's functionality extends beyond just depth manipulations. This post For additional details regarding this technique, explore the information provided.

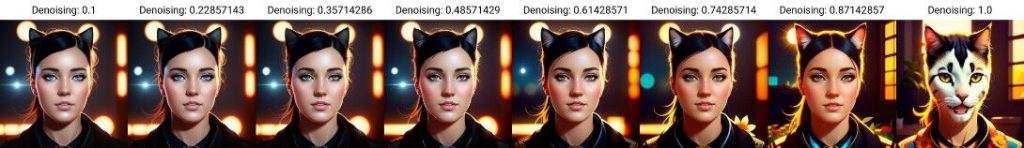

Let me quickly summarize the entire process for you. The denoising strength parameter, which significantly influences the number of steps involved in generating an image, is accessible when we work with img2img. For instance, setting steps to 30 and denoising strength to 0.5 gives us only 15 steps for generation. This principle can also be applied to the animation of the morph. You are not limited to just portraits; any image can undergo this morphing process.

Start with your base image. Activate ControlNet but leave the image input empty. Set the seed to -1 to conduct random trials; the batch count will determine how many trials you run. Feel free to experiment with the guidance parameters, the weight of the received output, and the operational modes of ControlNet. If you find a generation that clicks with you, drag it over to img2img and repeat the process to uncover what the second image will transform into. As each image is produced, you may wonder how to seamlessly create an animation. (As a tip, set your batch count to 1 initially to prevent extra images from being generated.)

We’re about to dive into a fascinating method now, and while I’ll touch on its limitations later, let's focus on the fun part.

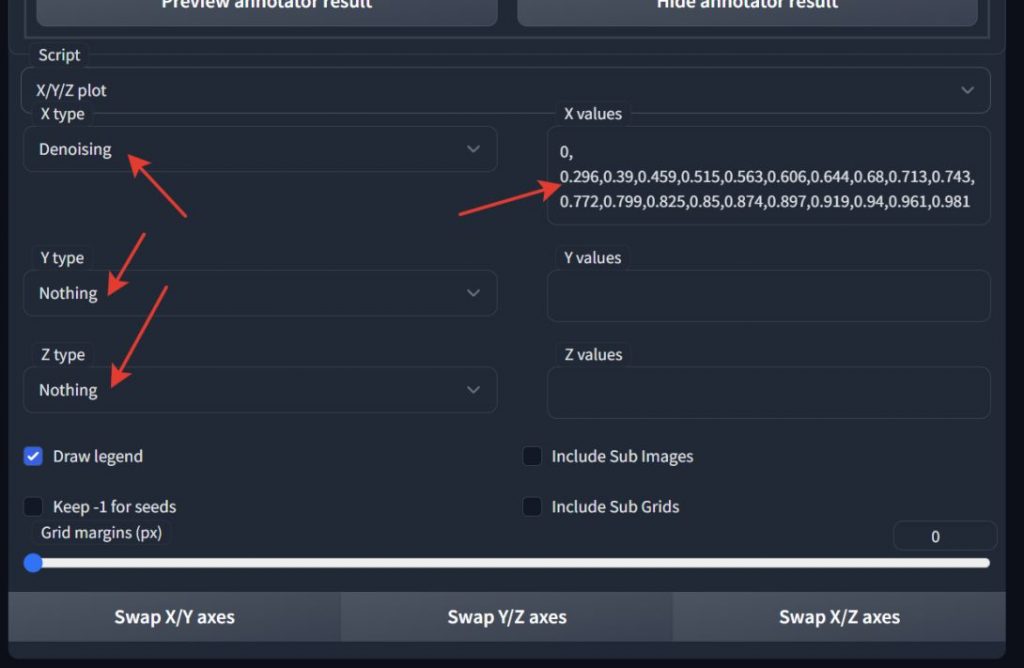

We will utilize the XYZ plot; you need to select this from the available scripts. This enables us to generate not just the end image but the smooth transition from the starting image as well.

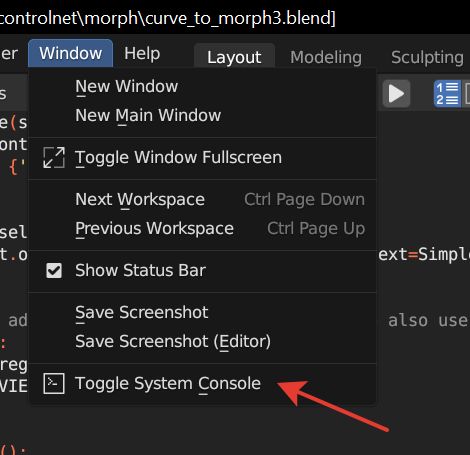

Input the X values on the line that states:

0.0.296.0.39.0.459.0.515.0.563.0.606.0.644.0.68.0.713.0.743.0.772.0.799.0.825.0.85.0.874.0.897.0.919.0.94.0.961.

The reason this line exists is that when we start generating, we'll end up with 21 images instead of just one (this corresponds to the number of values separated by commas). Why is this important? It might yield different results, demonstrating varying outputs.

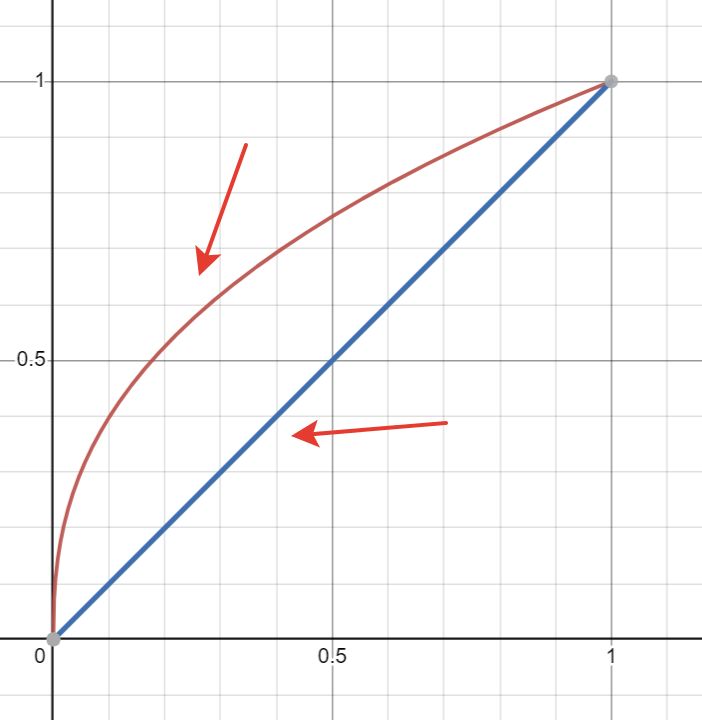

This setup simplifies things: you're essentially asking for 20 images and investigating the effects of denoising across values ranging from 0 to 1. However, the downside here is that this leads to a linear transition, resulting in morphing that lacks realism. Close to zero values have minimal impact on the outcome, while settings above 0.5 begin to produce noticeable effects.

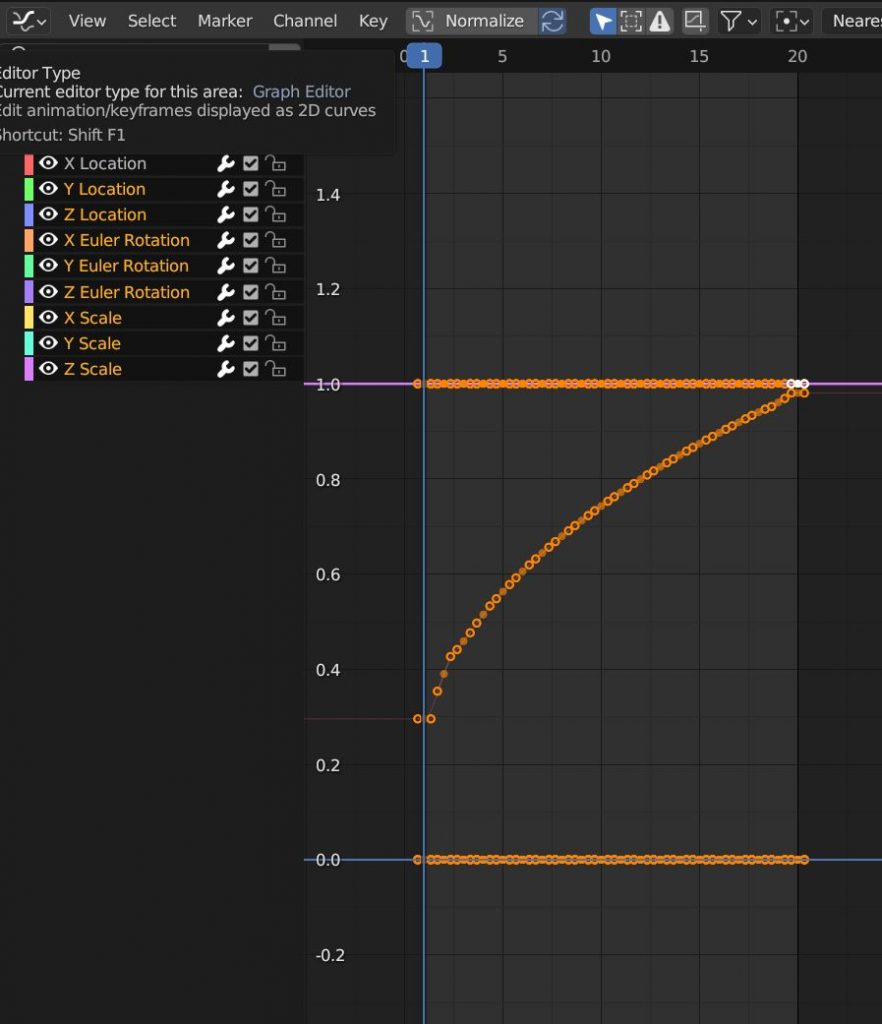

So essentially, the first line represents a curve, described mathematically as x to the power of 0.4. You can see how a straight linear function contrasts with a curve, as the latter yields a more authentic result, which you can create using a blender.

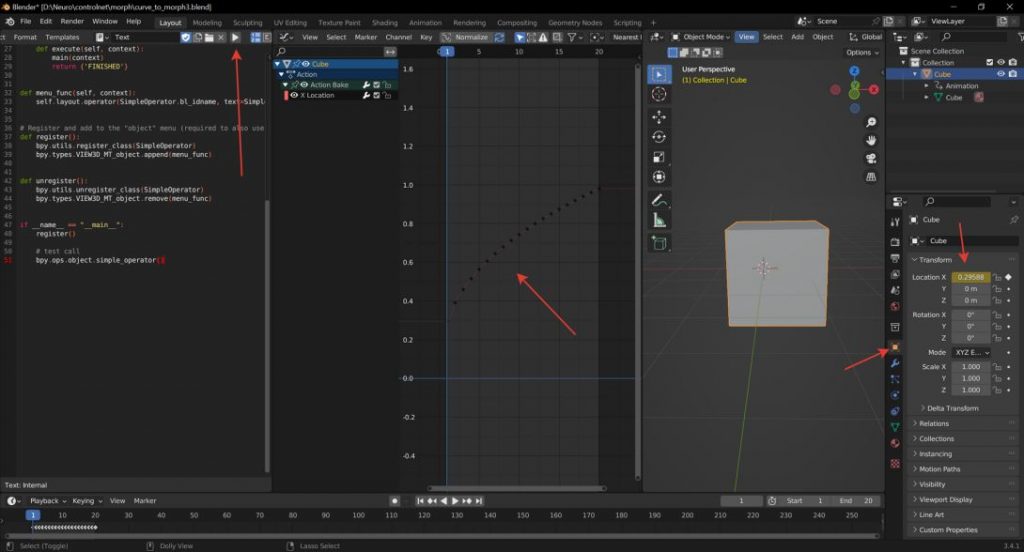

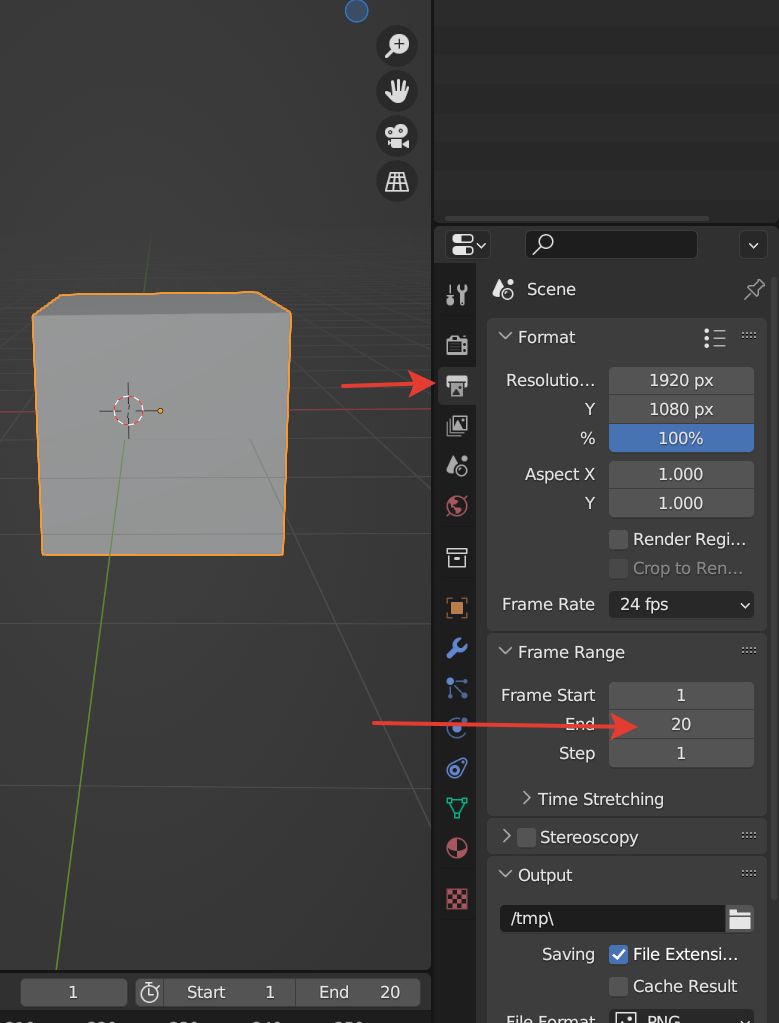

Prior to generating your line, you need to define the number of frames for the transition, which can be adjusted in the project settings.

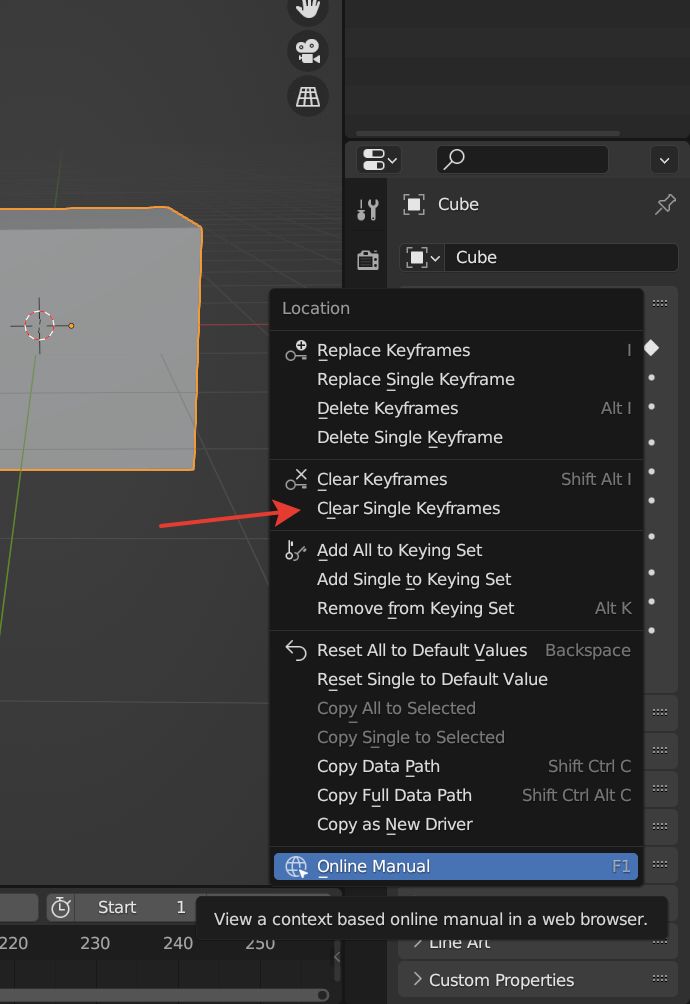

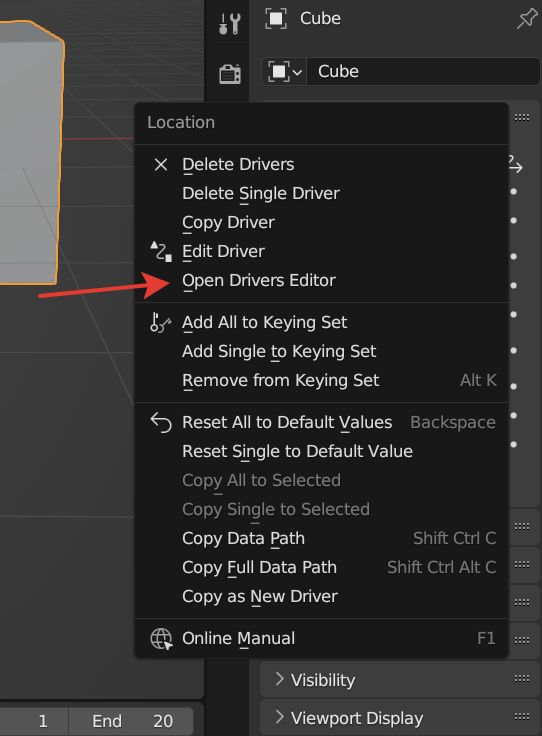

You will go through several phases to produce a line. Start by experimenting with the curve's shape. To do this, right-click on the animation parameter and select 'Delete Keyframes.'

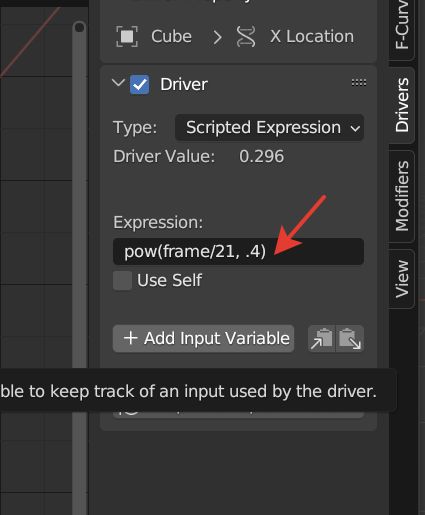

The following formula will help you achieve your curve; you'll notice the parameter turning blue, indicating that the driver is now active.

You can tweak the value here to your liking. Aim for a range that doesn't go too low. The formula frame/21 implies a shift from 0 to 1 between frame 0 and frame 21. This means you are only left with values that fall within the first 20 frames.

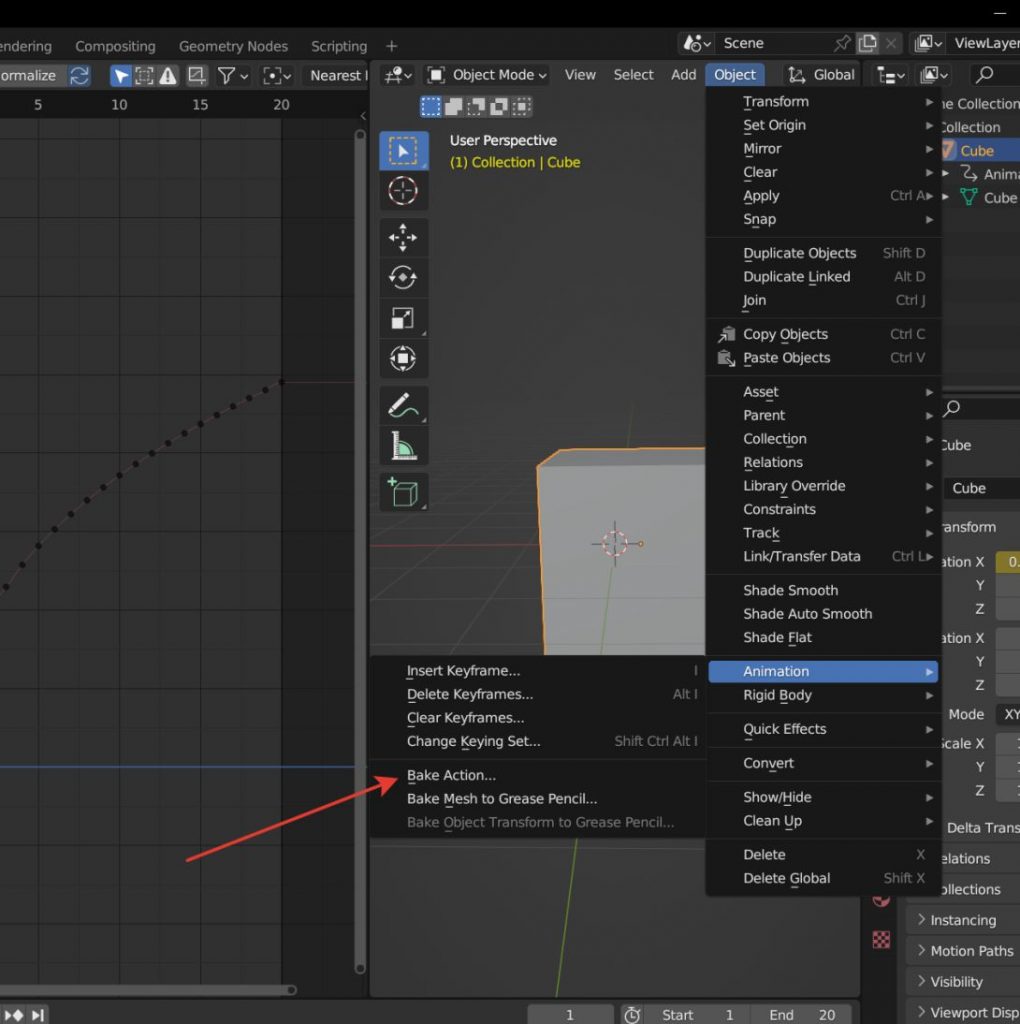

However, the keys are not your driver just yet. They need to be prepared first. For this, navigate to Object > Animation > Bake Action in the 3D window, and then simply hit OK.

Keep in mind, this will affect all potential keys of the object, so those should be cleared out first. Select everything except for the very first line and hit X on your keyboard (make sure your mouse is positioned under the list).

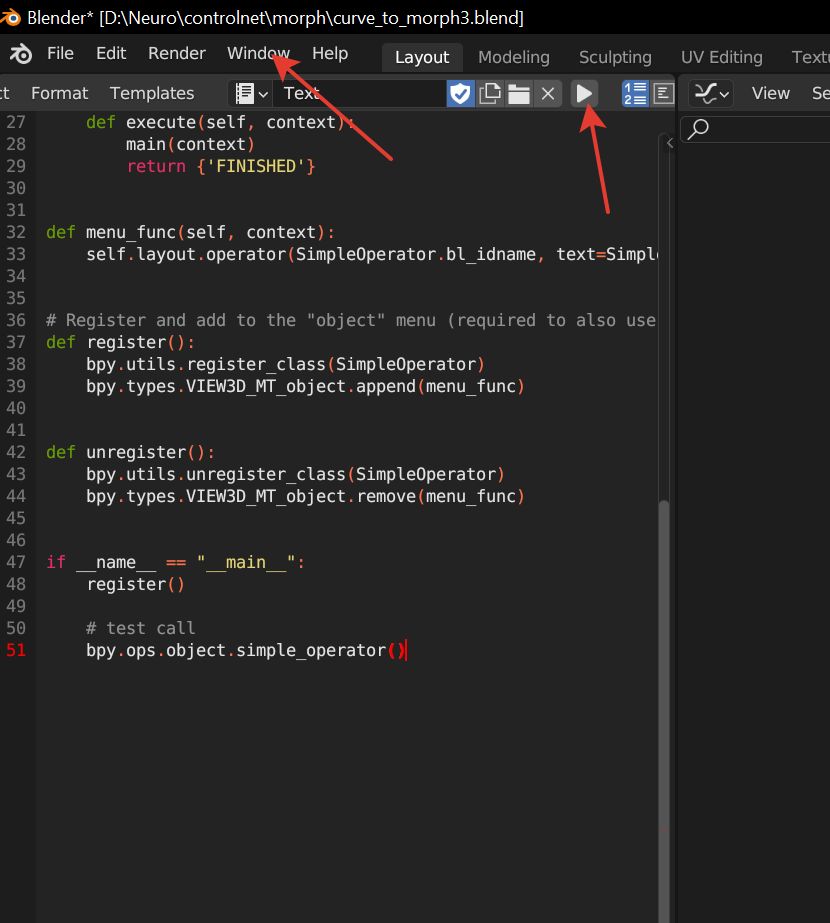

Now, to get the line displayed on the left of your screen, press play and open the console window.

Got a string that can be copied.

To create the animation, this process needs to be repeated for each image position that was generated initially before piecing them together using a video editing software.

While the automation isn't fully realized yet, you still have the flexibility to customize everything to your preferences. You can modify the generation model, switch up the ControlNet model and mode, enable or disable preprocessing—whatever suits you best. The key is to retain good results and repeat the processes accurately to craft the animation.

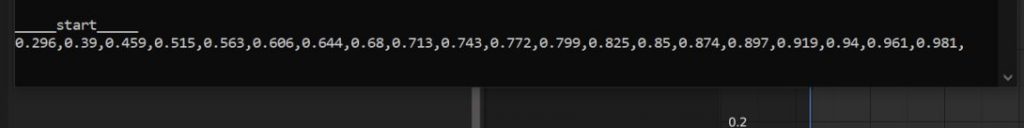

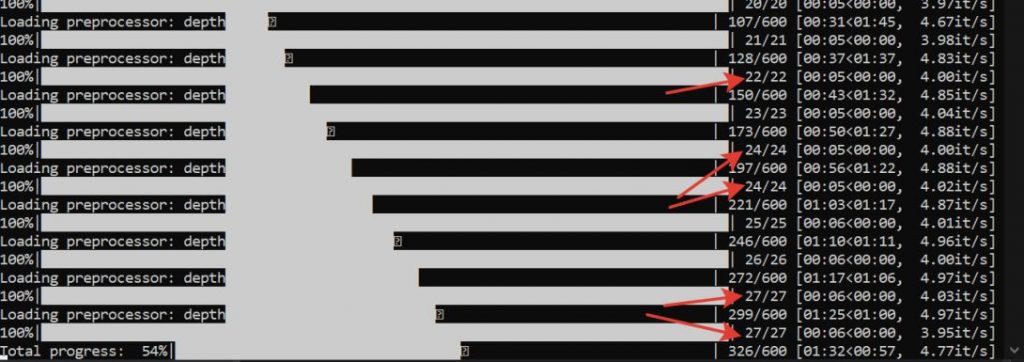

Technology's challenges—steer clear of over-prepping the transitions. We discussed earlier how denoising affects the number of steps. There’s a chance the output resembles duplicated images as several denoising values may fall within identical step counts. You can monitor their count through the automatic console.

This technology can be paired with interpolator retarders, meaning you can channel the animation towards filling in the missing frames, which results in smoother transitions.

Read more related articles:

Disclaimer

In line with the Trust Project guidelines , please be aware that the information on this webpage is not intended as, nor should it be construed as legal, tax, investment, financial advice, or any other kind of guidance. It’s crucial to only invest funds that you can afford to lose, and if you're in doubt, always seek independent financial counsel. For more details, we recommend reviewing the terms and conditions as well as the help and support resources made available by the issuer or advertiser. MetaversePost is dedicated to providing accurate and impartial reporting; however, market conditions may alter without prior notice.