GPT-4 vs. GPT-3: What Innovations Are Found in the Latest Model?

In Brief

OpenAI has officially introduced GPT-4, which boasts a larger parameter set and is designed to tackle a wide range of natural language processing tasks.

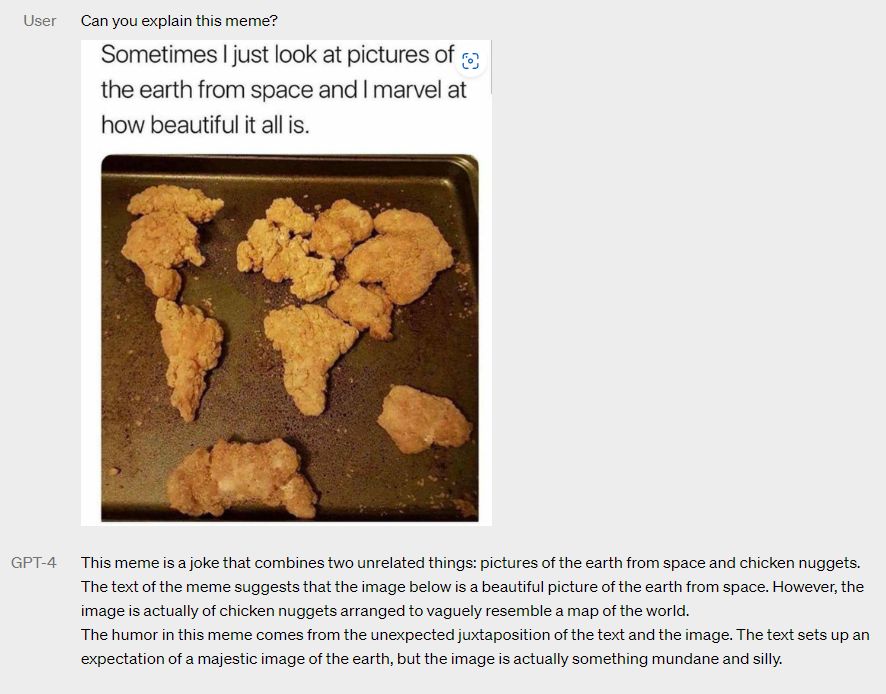

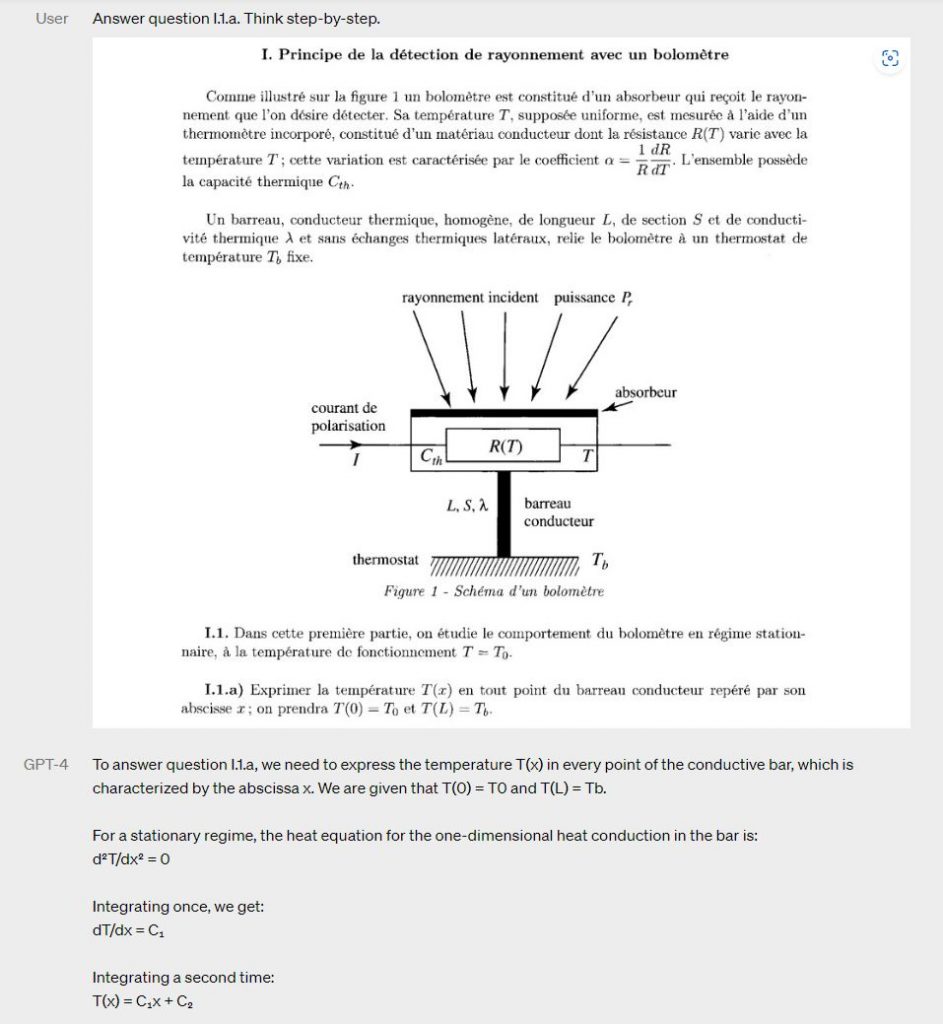

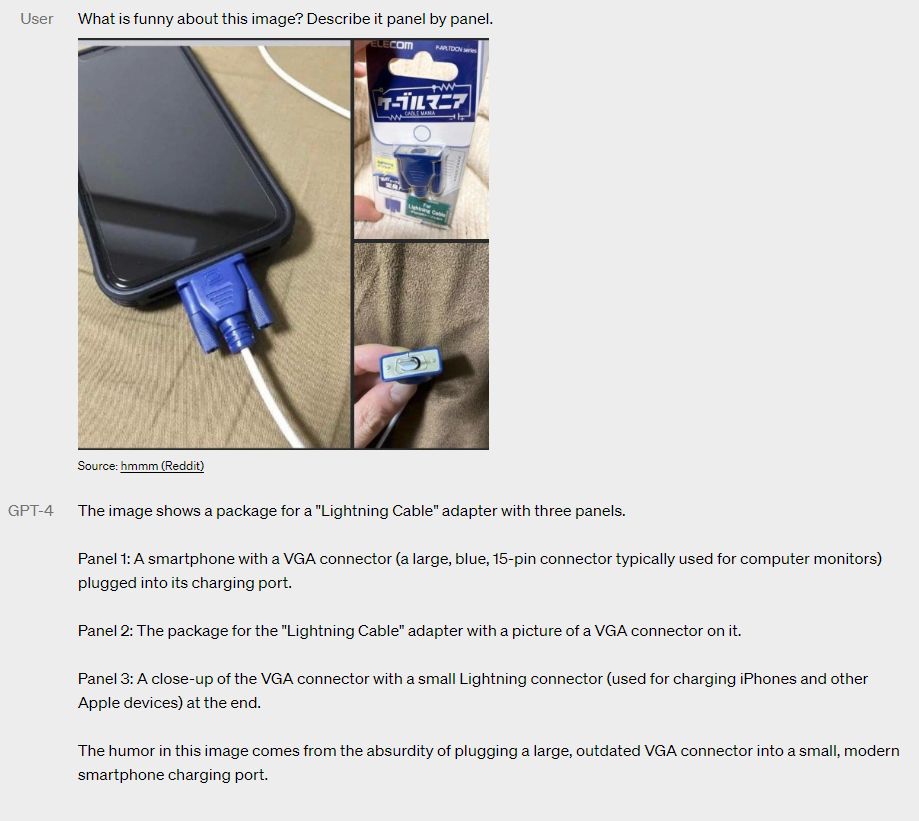

The latest model, GPT-4, is capable of understanding both textual and visual data, positioning it as a powerful ally in tasks like image captioning, answering visual questions, and generating content.

With an impressive memory capacity of up to 64,000 words, GPT-4 enables the generation of more coherent and contextually appropriate responses.

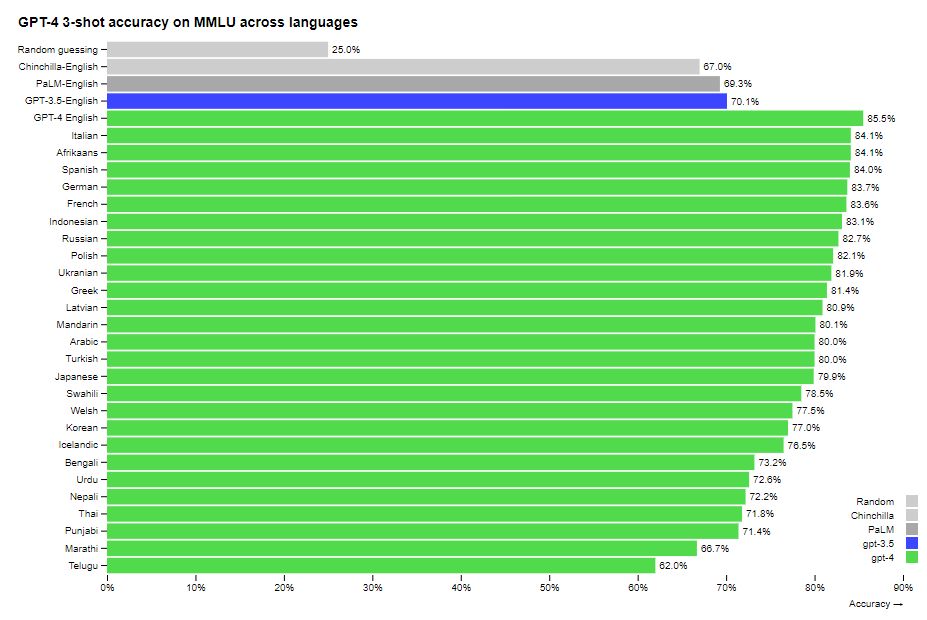

Now proficient in 26 languages, GPT-4 outperforms GPT-3.5 and other large language models in 24 of those languages assessed, particularly excelling in non-mainstream languages.

This advancement in natural language processing is set to significantly improve communication across different languages and enhance accessibility.

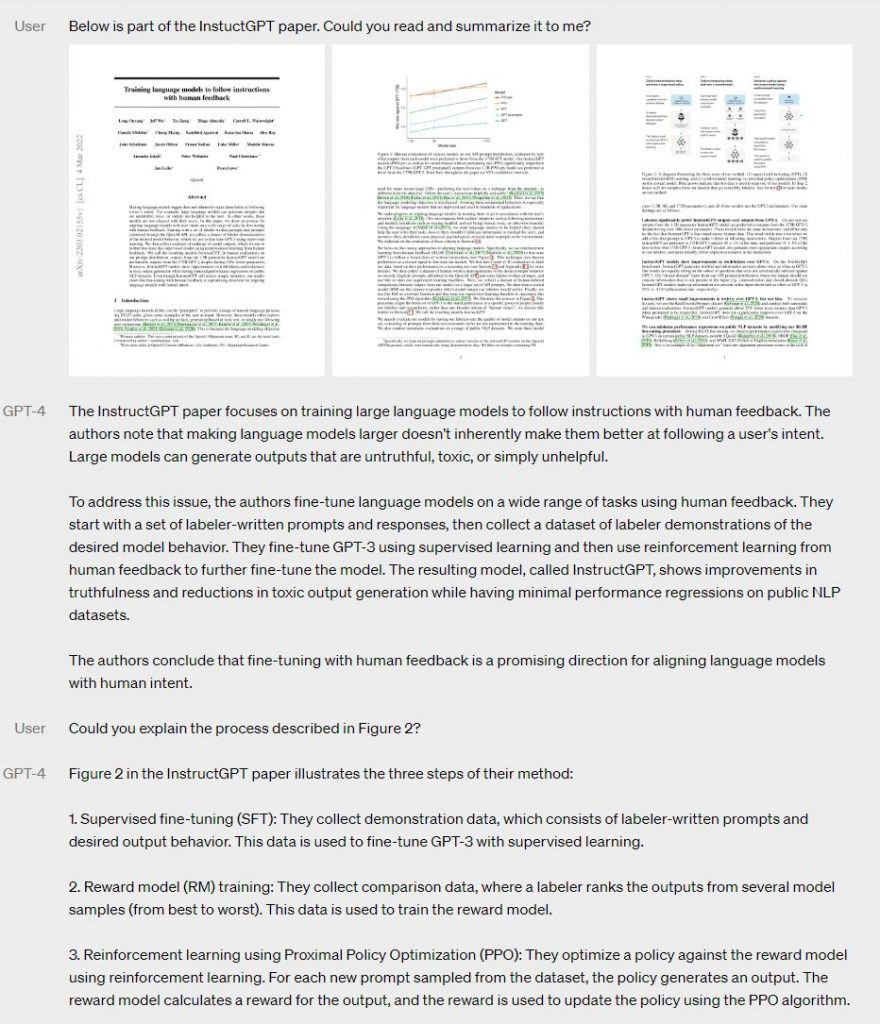

As promised by Microsoft, on March 14, OpenAI officially presented OpenAI’s next-tier language model, GPT-4, is said to have dramatically increased parameters, which could result in exceptional advancements in language generation capabilities. Furthermore, GPT-4 is anticipated to excel in various natural language applications like chat interactions and text generation tasks. GPT-4 vs. GPT-3: OpenAI has rolled out its latest iteration of the model.

GPT-4 understands not only text Currently, picture input capabilities are only in the research preview stage and aren’t available to the public yet.

GPT-4 is designed to respond to inquiries in 26 different languages. It showcases superior performance over GPT-3.5 and other models like Chinchilla and PaLM in 24 of these languages, including those that are less commonly spoken, such as Latvian, Welsh, and Swahili. This progress in natural language processing is pivotal for promoting multilingual dialogue and wider accessibility, bridging communication gaps in various areas, including education, healthcare, and commerce.

With its improved proficiency in less widely used languages, GPT-4 creates chances for broader access to information and services in native languages. This trend contributes to a more inclusive and diverse digital environment, encouraging cultural exchange and better understanding across communities.

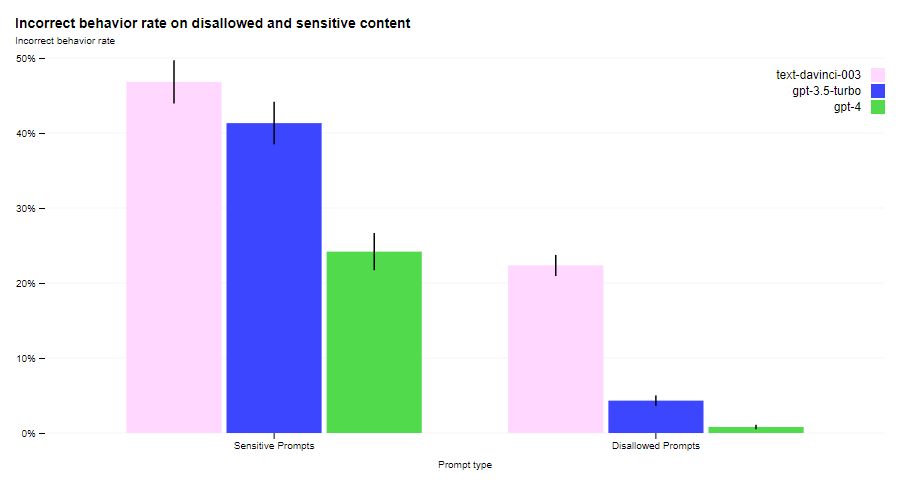

Compared to GPT-3.5, several of GPT-4's safety mechanisms are significantly more robust thanks to developer interventions. In fact, the model's likelihood of yielding forbidden content has been reduced by 82% compared to GPT-3.5, while improper responses to sensitive queries—such as those involving medical suggestions or self-harm—have diminished by 29%.

could prove to be a revolutionizing factor in sectors such as education, research, and customer support. Nevertheless, additional testing and refinement are necessary before it can be seamlessly integrated into these domains. GPT-4 Several major firms, including Stripe, are presently experimenting with GPT-4. OpenAI has also confirmed that the updated version is being utilized in the revamped Bing search service by Microsoft.

While details on GPT-4's technical features are scarce, OpenAI has opted not to divulge specific information to prevent giving competitors unnecessary insights. It's essential to note that GPT-4 is not without its limitations: its knowledge base only extends up to September 2021 and it can occasionally produce inaccuracies or fictional data. Currently, access to this model is exclusively granted to subscribers of the premium ChatGPT Plus service, and there's no announcement regarding a free version yet. The core distinctions between GPT-4 and GPT-3

GPT-4 has shown a reduction in factual and other errors compared to its predecessor, as confirmed by various assessments. Notably, it ranked in the top 10% of test-takers on the standardized U.S. bar exam, whereas GPT-3 landed in the bottom 10%.

This new model is capable of composing and refining texts—including songs, plays, and more—reaching levels of creativity that closely resemble human capabilities. It can also emulate a specific author's style or take on a different one per user request.

- The model can also interpret images, engaging with them in practical ways, such as proposing recipes based on ingredients visible in a photo. Another significant application is its potential to narrate surroundings for individuals with visual impairments. OpenAI has already begun collaborating with the 'Be My Eyes' organization to support this initiative.

- GPT-4 can handle over 25,000 words in a single instance (as opposed to the previous limit of 8,000 words), facilitating the creation and manipulation of extensive texts more effectively.

- The model's multilingual capabilities have significantly broadened. While it still performs optimally in English, it can generate text with a high degree of accuracy in an additional 25 languages, including Italian, Ukrainian, and Korean.

- GPT-4 is offered as part of the paid ChatGPT Plus subscription and is available via API, as the company actively seeks to monetize its innovations. It has already been adopted by organizations like Duolingo, Stripe, Morgan Stanley, Khan Academy, and even the government of Iceland. Recently, leading U.S. software firm Salesforce has also integrated the model into its operations.

- The Journey of Chatbots from T9 Era to GPT-1 and ChatGPT

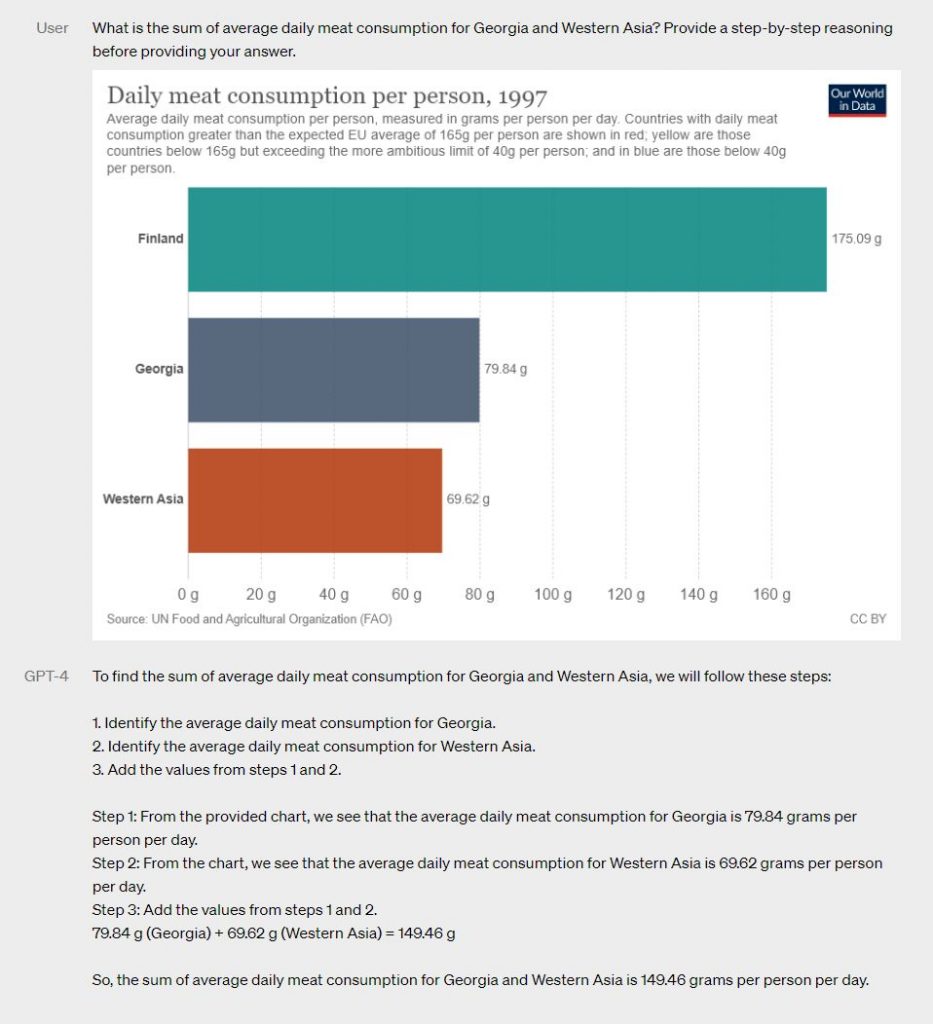

- GPT-4 Exceeds GPT-3.5 in Numerous Study Metrics

Read more about GPT models:

Disclaimer

In line with the Trust Project guidelines Jupiter DAO Unveils Ambitious Proposal for ‘Next Two Years: DAO Resolution’ Pushing for Progressive Autonomy and High-Level Financing