Did GPT-4 really ace the MIT exam questions with a perfect score? Research indicates otherwise, according to findings from the Metaverse Post.

In Brief

The findings from MIT's investigation revealed that GPT-4's claim of achieving perfect accuracy was somewhat inflated, largely due to its reliance on self-evaluation and the repetition of certain queries.

While GPT-4 demonstrated a commendable success rate, this figure can be misleading, as there were significant gaps regarding the timeline and specifics of the questions it faced.

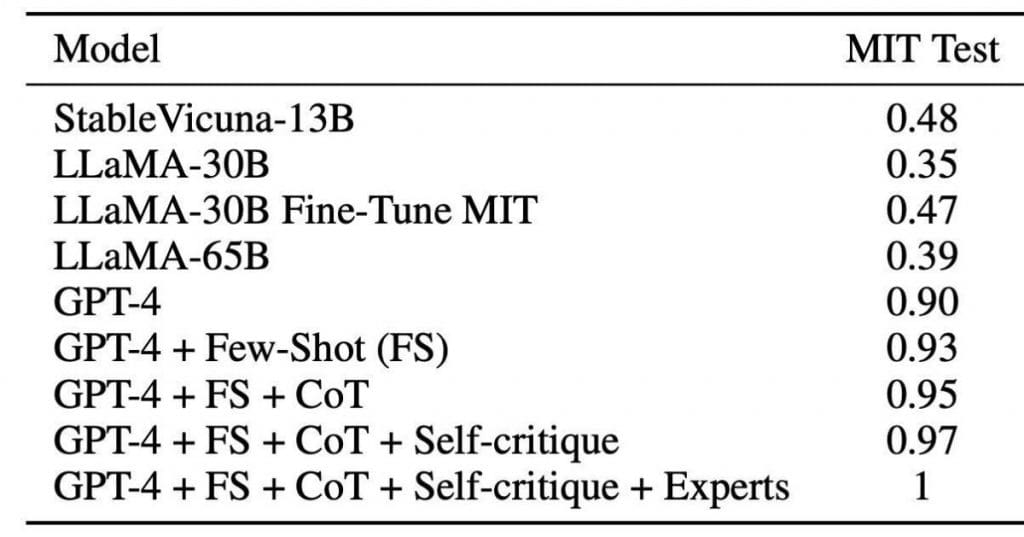

MIT researchers conducted the experiment The goal was to assess GPT-4's abilities, with researchers curious about whether it could graduate from their prestigious program by successfully completing its exams. The results were impressive, showing GPT-4's proficiency across diverse subjects such as engineering, law, and history.

They selected a comprehensive array of 30 courses that spanned topics from basic algebra to complex topology. In total, they presented 1,679 tasks, which equated to around 4,550 distinct questions. Approximately 10% of these questions were used for evaluation, while the remaining 90% served as contextual information. These leftover queries were helpful for building a database or training the model to find questions that connected closely with test prompts.

The researchers employed several techniques to assist GPT-4 in delivering accurate answers. These included:

- Chain of Reasoning: This technique involved prompting the model to articulate its thoughts step by step within the response.

- Coding Method: Instead of simply presenting the answer, GPT-4 was instructed to generate code that would produce the correct output.

- Critique Prompt: After GPT-4 offered an answer, a new prompt (up to three distinct ones) was introduced to critique that answer. This aimed to pinpoint mistakes and coach the model toward the right response, allowing for multiple revisions.

- Expert Prompting: A vital tactic involved incorporating a specific phrase at the beginning of the prompt to encourage GPT-4 to emulate a certain expert's thought process. For instance, phrases like 'Imagine you are an MIT Professor of Computer Science and Mathematics teaching Calculus' were crafted to guide the model toward reasonable guesses about which experts might excel in answering the questions.

The researchers then linked these methods into sequences, often using combinations of two or more prompts. The responses generated underwent thorough scrutiny, utilizing a unique evaluation method. GPT-4 was given a task, alongside the correct answer, and its own answer, challenged to determine if its response was accurate.

GPT-4 recorded an impressive 90% accuracy rate when addressing the 10% of questions set aside without the help of additional methods. Yet, when utilizing the aforementioned strategies, it achieved a perfect 100%, answering all questions correctly. Essentially, GPT-4 showed itself to be capable of handling every challenge, akin to receiving an MIT diploma.

This research represents a notable stride in showcasing the transformative potential of advanced AI. language models like GPT-4.

| Recommended : Discrepancies in GPT-4’s performance regarding the U.S. Bar Exam reveal contradictions in its claims. |

A Closer Look at the Hype Surrounding GPT-4: Investigating the Model’s Performance

In the midst of excitement surrounding GPT-4's remarkable achievements, a team of MIT researchers delved deeper into the claims presented and raised important questions regarding the reliability of the results. Their objective was to determine if the assertion about GPT-4 achieved a flawless 100% accuracy the accuracy in answering the exam questions held true.

Upon careful examination However, it became clear that the claim of GPT-4's flawless performance was somewhat overstated. Several key points surfaced that cast doubt on the credibility of the findings.

Initially, concerns were raised regarding the evaluation methodology itself. The original article mentioned the model being given a task along with the correct answer, subsequently assessing its own output. This self-evaluation technique brings into question the impartiality of the assessment, given that there was no external validation. It’s crucial to ascertain the model's ability to accurately judge solutions, which this study did not sufficiently address.

Furthermore, peculiarities emerged during more in-depth analysis. It emerged that certain questions were repeated, and when the model was directed to find similar questions, it often successfully provided correct answers. This tactic significantly enhanced its performance but raised doubts regarding the integrity of the evaluation. For instance, if the model was tasked with solving '2+2=?' after already being informed that '3+4=7' and '2+2=4', it’s hardly surprising that the model could produce the right answer.

Around 4% of the questions posed challenges to the language model, as they incorporated diagrams and graphs. Due to its reliance on text, the model struggled to deliver accurate responses to these inquiries. Unless the answers were explicitly provided within the prompt, the model had difficulty tackling these effectively.

It turned out that some of the supposed questions weren’t really questions at all. They appeared to be introductory statements or fragments of tasks, leading to the inclusion of irrelevant information in the set of questions during the evaluation. A crucial element that the original study failed to address was the absence of information regarding when or how the questions were presented. It remains uncertain if GPT-4 had previously encountered these tasks online or through other means before the study began. Even without referencing similar inquiries, the model achieved a 90% accuracy rate, raising concerns about possible external influences. .

These findings were uncovered after only a few hours of scrutinizing a small sample of the published questions, prompting speculation about what further inconsistencies might have emerged had the researchers released the full set of questions and answers, as well as details about the model’s generation process.

The claim that GPT-4 achieved a perfect 100% accuracy seems misleading. Caution should be exercised in interpreting the original study, as it should not be regarded as a definitive conclusion.

MIT Research Indicates a Stunning 35% Boost in Workplace Productivity with ChatGPT

| Recommended : Exploring Positional Bias and Assessing Model Rankings |

The rising trend of comparing different models, such as Vicuna, Koala, and Dolly, has gained traction, especially with the rise of GPT-4 as highlighted in earlier examples. However, it’s crucial to grasp the complexities involved in these comparisons for more accurate evaluations.

A distinctive comparative method has been established, employing a unique prompt that presents responses to the same question from both Model A and Model B. Evaluators are instructed to score the models on a scale of 1 to 8, wherein 1 indicates that Model A is significantly superior and 8 signals that Model B excels. Scores of 4-5 indicate a tie, with measures of 2-3 and 6-7 suggesting a 'better model' in varying degrees.

But what if we instructed the model to acknowledge this positional bias and refrain from excessively awarding scores? This strategy offers partial success but results in a shift in the opposite direction on the graph—though to a lesser extent.

A study by researchers at HuggingFace analyzed the responses from four different models to

The insights gleaned from this research present compelling observations worthy of noting: 329 different questions First, a comparison of the pairwise rankings for the four models found that the evaluations from GPT-4 closely aligned with the assessments from human evaluators. The system revealed notable disparities within the model rankings. This indicates that while the AI can discern between quality and subpar answers, it struggles in accurately ranking borderline scenarios that are closer to human judgment.

GPT-4 tended to rate the responses of other models (trained on outputs from GPT-4) higher than those provided by actual humans. This inconsistency raises doubts about the model's capacity to measure answer quality accurately, signaling the need for careful interpretation of AI evaluations. Elo rating A significant correlation (Pearson=0.96) was detected between the scores assigned by GPT-4 and the uniqueness of tokens in the responses. This finding underscores that the model's scoring does not necessarily correlate with the quality of the answer, highlighting the importance of exercising caution when depending solely on evaluations produced by models.

Assessing models necessitates a nuanced comprehension of potential biases and limitations. The existence of positional bias, along with the discrepancies in rankings, underscores the importance of employing a comprehensive methodology for evaluating models.

MIT: AI Has the Potential to Enhance Employee Productivity by 35%

Claims of GPT-4 Achieving Perfect Scores on MIT Exam Questions Are Misleading, Researchers Reveal - Metaverse Post

Exploring Model Evaluation Methods

ANNOUNCEMENT: GPT-4 Achieves Perfect Scores on MIT Exams? Researchers Disagree.

FTC's Attempt to Halt Microsoft-Activision Merger Fails in Court. Published: June 20, 2023, at 5:46 AM | Updated: June 20, 2023, at 10:24 AM. .

To enhance your experience in your preferred language, we sometimes rely on an auto-translation feature. Please be mindful that this may not always provide accurate translations; ensure you read carefully.

The examination of GPT-4's performance by MIT researchers uncovered that the claim of achieving 100% accuracy wasn't fully justified — largely due to self-appraisal and repetitive questioning.

While GPT-4 demonstrated a remarkable success rate, this can be misleading because there was insufficient data covering the timeline and detailed categorization of the questions asked.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Step-by-Step Reasoning: Encouraging the model to think critically and articulate its reasoning directly in the prompt.