GPT-4 Carries Forward 'Hallucinating' Information and Reasoning Errors Found in Previous Models

In Brief

OpenAI acknowledges that GPT-4 shares some of the same shortcomings as its earlier versions. GPT models .

GPT-4 continues to produce inaccuracies and logical fallacies.

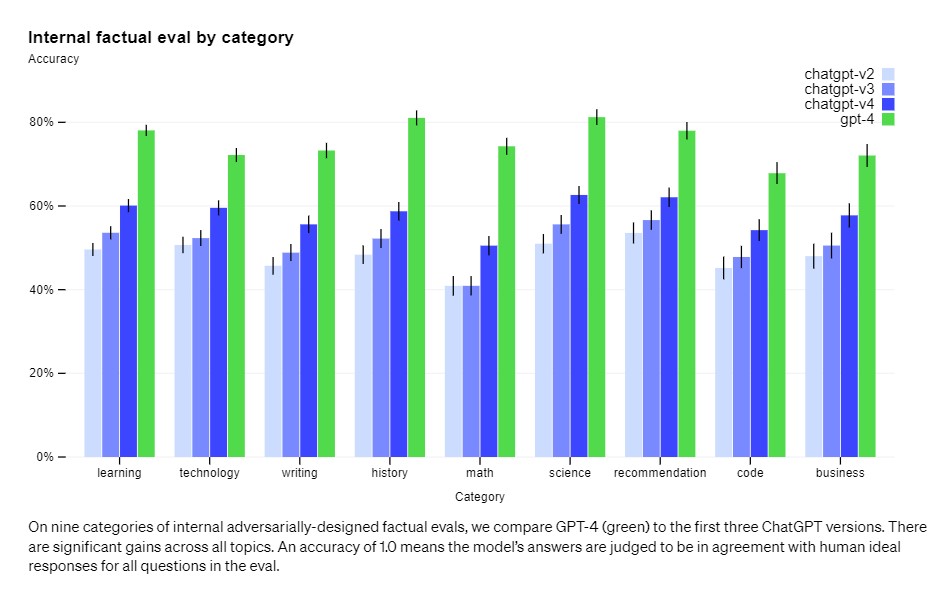

Nonetheless, GPT-4 demonstrates a 40% improvement over OpenAI's GPT-3.5 according to the company's internal factuality tests.

OpenAI has alerted its users that GPT-4 is not entirely dependable and may still 'hallucinate' facts or misreason. They recommend a cautious approach when interpreting the outputs of this language model, especially in critical situations.

The promising aspect is that GPT-4 significantly lessens hallucinations compared to earlier models; in fact, OpenAI claims it achieves a 40% higher score than GPT-3.5 in internal trials.

OpenAI reported progress in external evaluations like TruthfulQA, which assesses the model's capacity to distinguish factual statements from a carefully chosen set of incorrect alternatives. These evaluations often present answers that are statistically engaging but wrong. blog post .

Despite these advancements, GPT-4 still falls short regarding knowledge of developments post-September 2021, sometimes making fundamental reasoning mistakes just like its predecessors. Moreover, it can be quite trusting of blatantly false statements from users and struggles with complex issues such as inadvertently introducing security flaws into its coding. It also does not verify the accuracy of the information it supplies.

Similar to earlier iterations, GPT-4 can produce misleading advice, faulty code, or incorrect data. Yet, the newer features it presents introduce additional layers of risk that warrant attention. To explore these risks, over 50 experts individuals from a range of fields, such as AI safety, cybersecurity, biological risk, trust and safety, and global security, participated in adversarial testing of the model. Their insights and data contributed to refining GPT-4, including gathering more information to better its refusal rates for dangerous information requests.

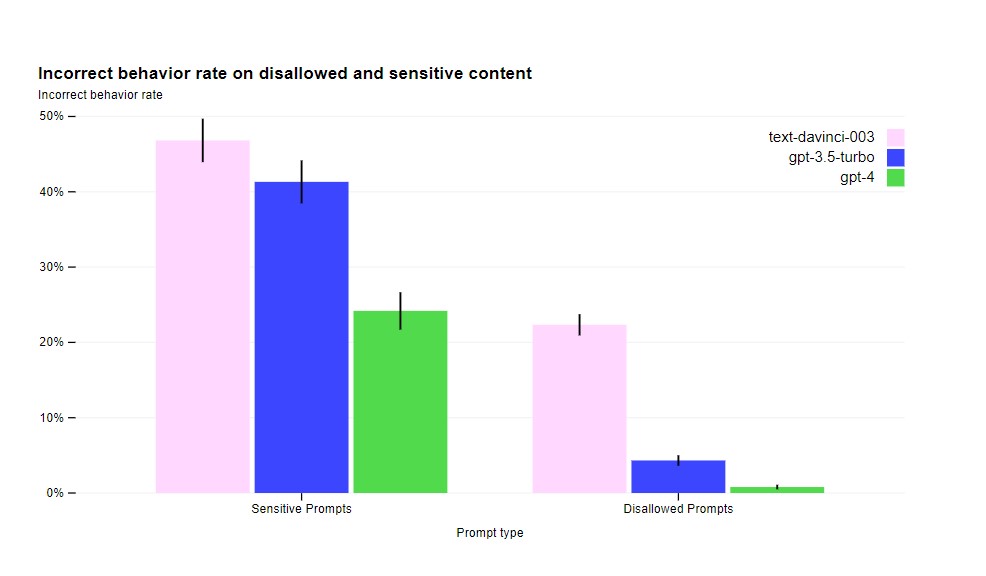

A crucial strategy OpenAI employs to mitigate harmful outputs is through the integration of a supplementary safety incentive during Reinforcement Learning from Human Feedback (RLHF) training. This signal trains the model to consistently decline requests for harmful content, as delineated in the expert guidelines for the model's use. The reward mechanism utilizes a zero-shot classifier from GPT-4, assessing safety standards and response styles to safety-focused queries.

OpenAI noted that they have cut down the model's response to solicitations for prohibited content by 82% compared to its GPT-3.5 version. Additionally, GPT-4 adheres to protocols related to sensitive requests like medical guidance and self-harm 29% more frequently than before.

While OpenAI's efforts have raised the threshold for eliciting negative behavior from GPT-4, it remains possible to provoke such outputs, with jailbreaks still capable of producing content contrary to the usage standards established.

As AI systems continue to proliferate, achieving a higher degree of dependability in these interventions becomes increasingly necessary. Currently, it remains crucial to complement these restrictions with real-time safety measures, such as monitoring for potential misuse,” the company elaborated.

OpenAI is working with outside researchers to deepen the understanding and evaluation of the implications of GPT-4 and its future iterations. The team is also focused on developing assessments for potential dangers that may arise with forthcoming AI systems. As this research evolves, OpenAI plans to share their discoveries and insights with the community in due course. economic impacts Major Mishap on OpenSea: A Bored Ape Yacht Club NFT Worth $350,000 Sold for Just $115

Read more:

Disclaimer

In line with the Trust Project guidelines Cindy is a journalist at Metaverse Post, reporting on topics related to web3, NFTs, the metaverse, and AI, emphasizing interviews with key figures in the Web3 industry. She has engaged with over 30 C-level executives, sharing their valuable insights with the audience. Originally from Singapore, Cindy now resides in Tbilisi, Georgia. She holds a Bachelor’s degree in Communications & Media Studies from the University of South Australia and brings a decade of experience in journalism and writing.