Google Unveils Groundbreaking Generative Image Dynamics That Bring Static Pictures to Life

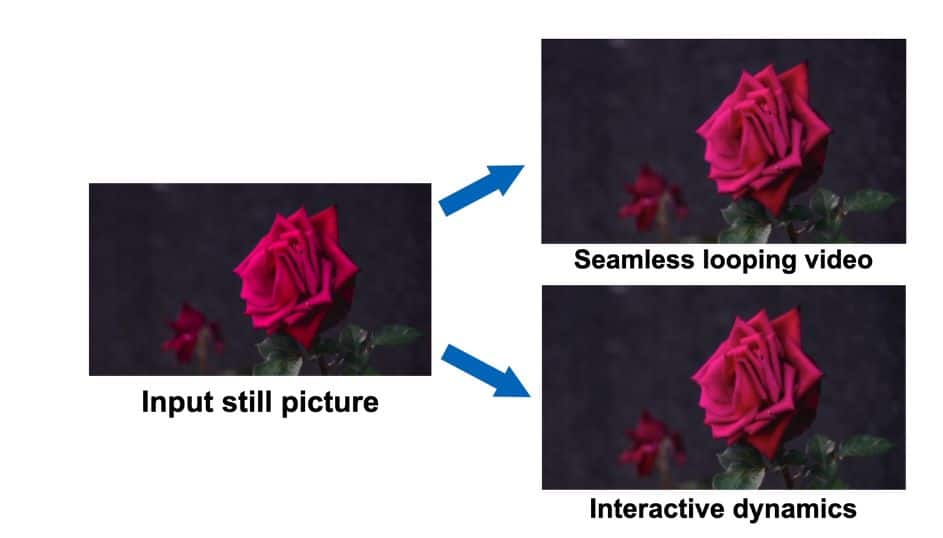

Google has unveiled a Generative Image Dynamics , an innovative technique facilitates the conversion of a single stationary image into an endlessly looping video or an engaging dynamic display, unlocking a multitude of practical uses.

At the heart of this innovative tech lies a sophisticated model that captures the essence of image-space dynamics. The goal here is to cultivate a deep understanding of how various objects within an image can react under diverse dynamic stimuli. This foundational knowledge is then applied to realistically simulate how object dynamics would respond to user interactions.

One of the standout aspects of this technology is its ability to produce videos that loop seamlessly. By harnessing an image-space prior focused on scene dynamics, the system developed by Google can predict and extend the movements of elements in a picture, turning it into an enchanting, perpetual video loop. This capability presents endless creative opportunities for designers and content creators alike.

This groundbreaking capability allows users to interact with elements within otherwise static images in a remarkably realistic manner. By effectively demonstrating how object dynamics respond to user-induced movements, Google's technology facilitates immersive and interactive encounters within visual content. This advancement holds the potential to transform metaverse spaces the way audiences connect with and experience imagery.

At the core of this development is an intricately trained model. Google's technology absorbs insights from an extensive compendium of motion trajectories gathered from actual video sequences showcasing organic, oscillating movements. These sequences feature scenes with natural elements like gently swaying trees, moving flowers, flickering candles, and billowing garments in the wind. This varied dataset empowers the model to grasp a wide spectrum of dynamic behaviors.

When presented with a single image , the trained model adopts a frequency-coordinated diffusion sampling method. This technique predicts a pixel-specific long-term motion representation within the Fourier domain, referred to as a neural stochastic motion texture. This representation is then transformed into comprehensive motion trajectories that can be utilized throughout an entire video. Together with an image-based rendering component, these trajectories pave the way for various practical applications.

In comparison to raw RGB pixel approaches, motion-based priors tap into more fundamental, lower-dimensional structures that effectively elucidate pixel value variations. This leads to more coherent long-term generation and offers finer control over animations than conventional methods that tend to rely on simpler techniques. image animation via raw video synthesis.

The crafted motion representation lends itself conveniently to numerous downstream uses, including the creation of seamless looping videos, the enhancement of generated motions, and the facilitation of user interactions, dynamic images simulating the effects of dynamics imposed by user-applied forces.

Read more related topics:

Disclaimer

In line with the Trust Project guidelines , it's important to emphasize that the content on this page is intended solely for informational purposes and should not be construed as legal, tax, investment, financial, or any other type of professional advice. It's crucial to only invest what you can afford to lose and seek independent financial counsel if you're uncertain. For more information, we recommend checking the terms and conditions along with the support resources from the issuer or advertiser. MetaversePost strives for accurate and impartial reporting, but please be aware that market conditions can shift without notice.