Gobi: OpenAI's Innovative Multimodal LLM Striving to Surpass Google’s Gemini

In Brief

Google's Gemini is captivating attention as a cutting-edge AI model thanks to its multimodal features.

This concept encapsulates the functionality of a model that can process various forms of input, including text, visuals, as well as audio and video.

OpenAI is determined to spearhead advancements in multimodal AI with Gobi, a model specially tailored and trained to excel in this domain.

Lately, there has been considerable discourse within the tech sector about Google’s Gemini, a groundbreaking model stepping into the exciting domain of multimodal AI. But just how does multimodality function in AI, and what fuels the current intrigue?

When we refer to multimodal AI, we essentially highlight a model's capacity to engage with diverse types of data, such as text, imagery, and other formats like audio and video. However, there are several techniques to achieve multimodality. One cost-effective method uses separate models for images and text, typically a Large Language Model (LLM) for textual data. A bridge is created to convert images into a text-friendly format that the LLM can process. While this technique has gained traction in open-source AI, its main drawback is that the LLM may struggle to fully comprehend the intricacies of different modalities; they could merely be seen as add-ons. LLM) An even bolder strategy entails creating a model designed from the beginning to interpret and function across multiple modalities at once. This endeavor seeks to provide the model with a comprehensive understanding of the environment, boosting its cognitive functions and enabling it to see cause-effect links more clearly.

This leads us to the forefront of AI advancements, where OpenAI is carefully positioning its strategies.

The primary tool they are developing is Gobi, a multimodal model conceived with this purpose in mind right from the start. Unlike its predecessors, Gobi represents a milestone in the evolution of versatile AI technology. lead the multimodal race Yet, there's an intriguing twist. Recent reports suggest that Gobi's training phase has not started, casting doubt on its launch schedule, which coincides with the anticipated debut of Gemini in autumn 2023. The race toward AI dominance in the multimodal space is intensifying. GPT-4 One question that arises is why the creation of a new model takes substantial time, especially when it seems like it should be a straightforward task of adding images. The complexity lies in the ethical implications surrounding AI and the risks of misuse. Incorporating visual comprehension capabilities invites potential challenges, such as the exploit of AI to bypass security measures or invade privacy. It appears OpenAI is thoroughly addressing these ethical responsibilities before they launch their groundbreaking technology.

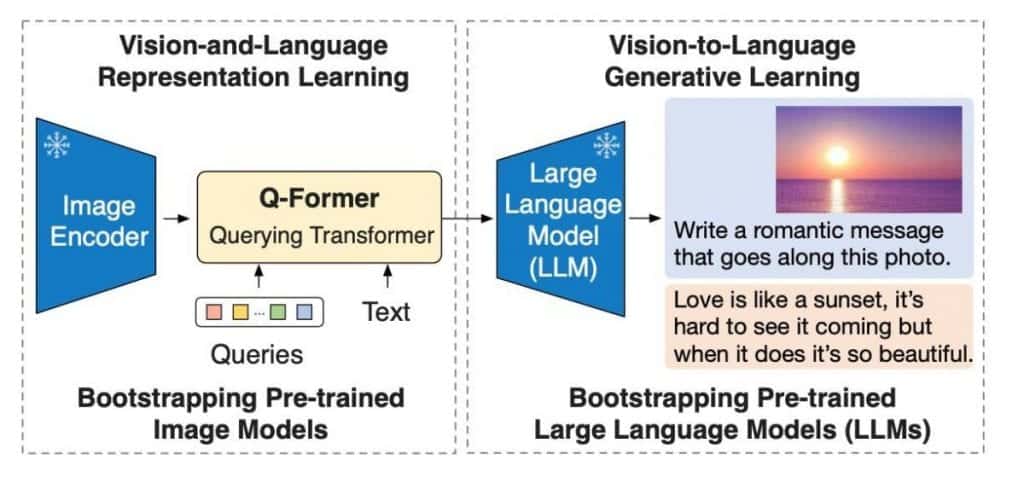

Many companies are currently engaged in developing future multimodal models. For example, Salesforce, a prominent player in the SaaS CRM market, has been delving into AI research aimed at minimizing resource consumption for their models. Their work encompasses LLMs and multimodal frameworks that interconnect various data types, including text, images, sounds, and videos. A fascinating illustration of multimodality is the ability to answer questions based on images. However, the primary hurdle rests in merging disparate signals from text and visuals. Existing methodologies typically demand extensive training of large models to establish either an alignment or connection between the two. Google’s Gemini Salesforce proposes a novel approach of reusing pre-existing models, keeping their weights static during training, and developing a compact grid to facilitate query generation between models. This method demands less training yet yields superior performance metrics compared to the leading existing techniques. Its brilliance lies in its straightforwardness and elegance.

is now available for users looking to explore their images. This approach is remarkable for its simplicity and effectiveness. facial recognition The Wurstchen V2 Model Outperforms Stable Diffusion XL with Exceptional Speed in High-Resolution Image Generation

Salesforce and Multimodal Models

Salesforce Increases Its Generative AI Fund to $500 Million, Launches AI Cloud Services and Accelerator Program

Please be aware that the information contained on this page is not designed to serve as legal, tax, investment, financial, or any other advisory. It's crucial only to invest what you can afford to lose and to consult an independent financial advisor if uncertain. For more details, we recommend reviewing the terms and conditions along with the support and help sections provided by the issuer or advertiser. MetaversePost is dedicated to delivering accurate and impartial news, but market landscapes can shift unexpectedly.

The article provides code for the proposed approach, and a collab version Damir leads the team, acting as a product manager and editor at Metaverse Post, delving into subjects like AI/ML, AGI, LLMs, the Metaverse, and related Web3 issues. His content garners substantial readership, engaging over a million users monthly. With a decade of expertise in SEO and digital marketing, Damir's insights are frequently featured in prominent outlets such as Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, amongst others. As a digital nomad, he traverses between the UAE, Turkey, Russia, and various CIS countries. Holding a bachelor’s degree in physics, he believes this background equips him with the analytical thinking necessary to navigate the rapidly evolving digital landscape.

Read more related topics:

Disclaimer

In line with the Trust Project guidelines Vanilla Introduces 10,000x Leverage Super Perpetuals on BNB Chain