by

Latest News

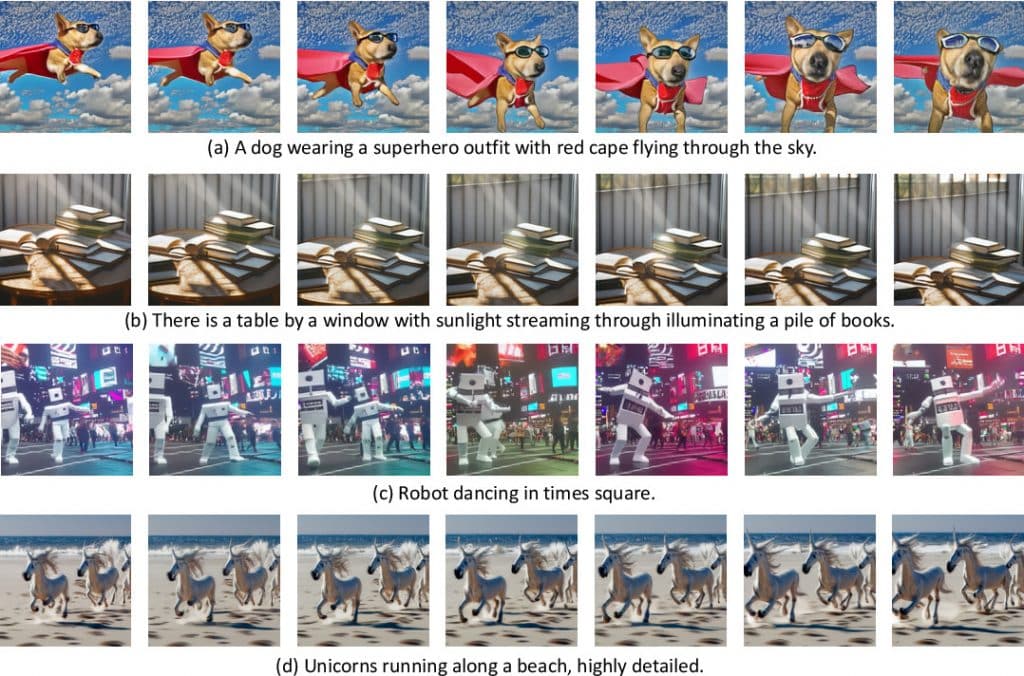

Text-to-video models interpret natural language prompts to craft videos. They analyze the context and semantics of the text, using advanced techniques such as deep learning or recurrent neural networks to produce corresponding video sequences. The field of text-to-video synthesis is evolving rapidly and demands significant data and computational power to train effectively. These models can be harnessed for various purposes, including assisting in filmmaking, creating entertaining clips, or producing promotional content.by Top 50 Text-to-Video AI Prompts for Simple Image Animation

| April 24, 2025 Getting to Grips with Text-to-Video AI Models |

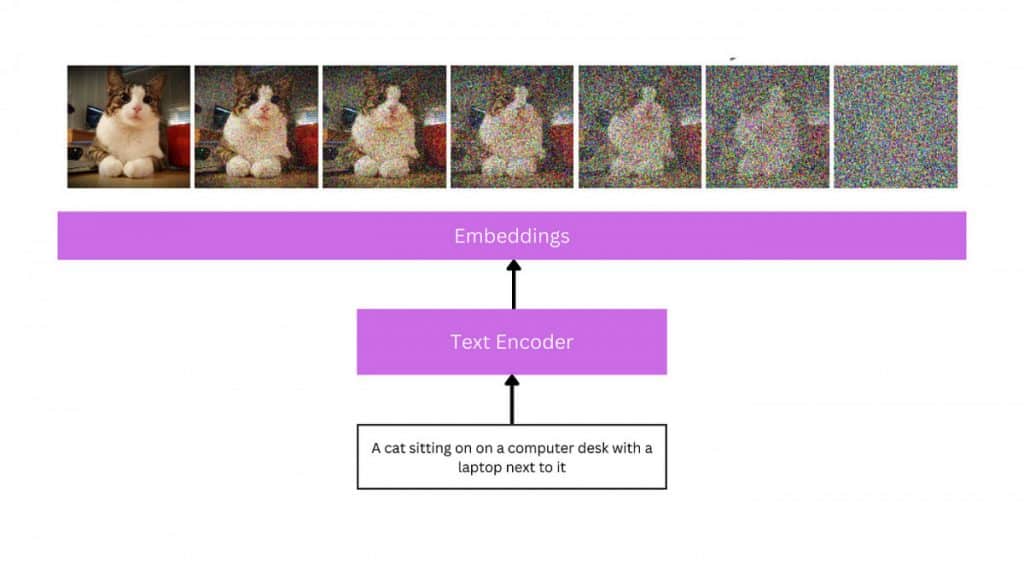

Much like the challenges of generating images from text, the art of producing videos in this manner is a relatively new area of study. Initial attempts focused primarily on generating frames with captions in an auto-regressive manner, using techniques based on GANs and VAEs. Despite laying a solid foundation for a new computer vision challenge, these early studies were often limited to producing low-resolution results, short clips, and showcasing only simple, isolated motions.

The next wave of research in text-to-video generation leveraged transformer architectures, inspired by the success of expansive pre-trained transformer models like GPT-3 and DALL-E in other domains. Innovations such as TATS introduced hybrid models that combine VQGAN for image generation with temporal transformers for frame sequencing, while Phenaki, Make-A-Video, NUWA, VideoGPT, and CogVideo all tapped into transformer-based frameworks. Particularly noteworthy, Phenaki allows the creation of lengthy videos based on a series of prompts or narratives, while NUWA-Infinity innovates with an autoregressive technique for limitless video and image synthesis from textual input. However, models like NUWA and Phenaki remain out of reach for the average user.

In the current landscape, many text-to-video models are built upon diffusion-based architectures, which have demonstrated remarkable capabilities in generating intricate, hyper-realistic images. This has prompted interest in applying diffusion methodologies to various fields, including audio, 3D modeling, and notably, video production. Leading this new generation of models are Video Diffusion Models (VDMs) that adapt diffusion techniques to the video context, alongside MagicVideo, which proposes a streamlined framework for creating video segments in a low-dimensional latent space—promising enhanced efficiency compared to VDM. Another standout is Tune-a-Video, which allows the fine-tuning of a pretrained text-to-image model using a single text-video pairing, enabling users to modify video content while keeping motion consistent.

Top 10+ Text-to-Video AI Generators That Are Free and Powerful

by

Alisa DavidsonApril 24, 2025 Latest Updates on Text-to-Video AI Models

Zeroscope is a free, open-source text-to-video tool that rivals Runway ML’s Gen-2.

- Its goal is to convert textual descriptions into vibrant visuals, offering enhanced resolution and a preferable 16:9 aspect ratio. Available in two versions, Zeroscope_v2 567w and Zeroscope_v2 XL, it requires 7.9 GB of VRAM and incorporates offset noise for better data distribution. As an open-source alternative to Runway’s Gen-2, Zeroscope presents a broader spectrum of realistic video outputs. VideoDirectorGPT represents a groundbreaking method for generating videos from text, merging Large Language Models (LLMs) with video scheduling to craft coherent and precise multi-scene videos.

- This approach utilizes LLMs as a guiding force in storytelling, generating scene-level narratives, item lists, and detailed frame layouts. The Layout2Vid module offers spatial control over object arrangements. Platforms like Yandex’s Masterpiece and Runway’s Gen-2 strive for accessibility and ease of use while enhancing content creation and dissemination across social media. Yandex has unveiled a new feature, Masterpiece, enabling users to create short videos of up to 4 seconds with a frame rate of 24 fps.

- This technology employs a cascaded diffusion approach to generate successive frames, allowing users to develop a diverse array of content. Masterpiece complements Yandex's existing features, which include image generation and text posting capabilities. The neural network crafts videos based on text descriptions, frame selections, and automated processes. This feature has quickly gained traction and is currently reserved for active users. Recent Social Media Updates on Text-to-Video AI Models

Converting images to videos through text prompts. AI art is advancing rapidly. 🤯

Alisa Davidson

April 24, 2025 News Report Victoria writes extensively on various technology subjects, including Web3.0, AI, and cryptocurrencies. Her vast experience enables her to produce insightful articles that resonate with a broad audience.

Technology

Cryptocurrencylistings.com Rolls Out CandyDrop to Simplify Crypto Acquisition and Boost User Engagement with Quality Projects

by