Gen-1: AI Innovatively Merges Prompts and Images to Produce New Video Content

In Brief

Gen-1 can create brand-new videos from pre-existing footage by integrating various prompts. prompts and images .

Moreover, it has the capability to generate completely original videos from the ground up.

The technique of transforming existing footage into new videos presents numerous exciting applications.

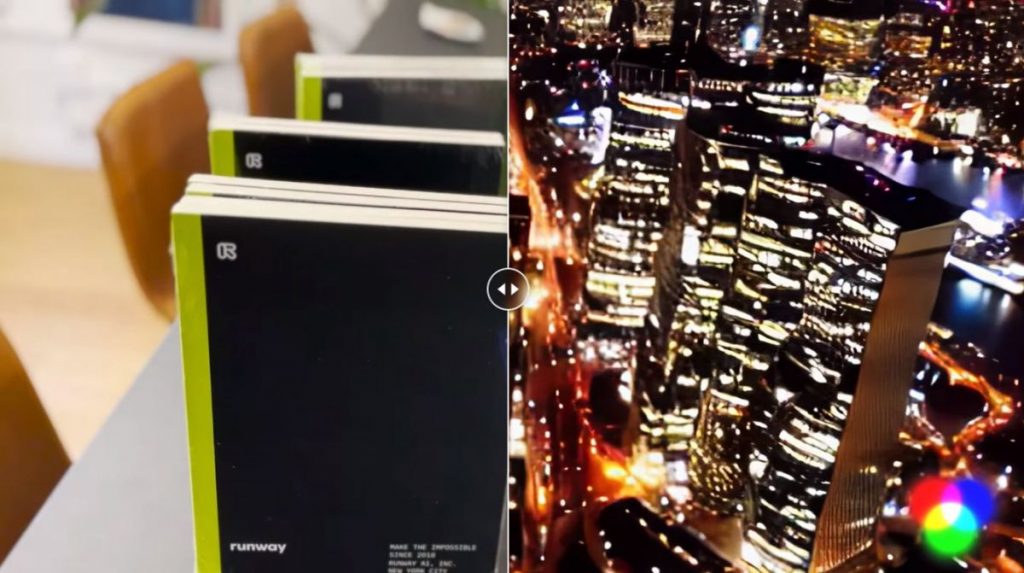

The AI startup RunWayML brings forth Gen-1, a groundbreaking neural network capable of generating new video content from what's already available by blending prompts and images. Previously, neural networks primarily performed style transfer—essentially copying the style from one image and applying it to another. This is how we get those mesmerizing videos where a serene landscape might be reimagined in the artistic flair of Van Gogh's 'Starry Night.' has announced Gen-1: AI Generates New Content by Blending Video Elements with Prompts and Images

Currently, the videos crafted by Gen-1 may be brief and simplistic. However, as the technology evolves, we can anticipate the emergence of more sophisticated and lifelike videos generated by AI. AI-created videos Research indicates that engaging with adult videos in virtual reality can enhance men’s reproductive health. Text-based guidance empowers users with powerful image creation and editing features. While these tools have been applied to video production, the current editing methods for tweaking existing content often require costly retraining for each unique input or run the risk of inconsistencies when manipulating image alterations across frames. .

What is Gen-1?

By combining the composition and stylistic elements of an image or text prompt with the structural components of an original video, the AI can synthesize new videos realistically and coherently. This approach simulates the effect of filming something new without actually capturing any new footage. generative diffusion models This method of video synthesis allows creators to generate content swiftly and efficiently, making it more affordable than traditional production methods.

Applying the style of an image or prompt across each frame of a video is an effective way to create coherence and a unified visual identity, enhancing the overall project.

Leveraging software and innovative design techniques, professionals can transform concept mockups into stunning, interactive displays that vividly realize the creator's vision.

With the advanced editing capabilities available, isolating specific segments of footage and enriching them with text prompts becomes a straightforward task.

Enhancing untextured renders can significantly elevate their authenticity, bringing 3D models to vibrant life.

Gen-1 also offers the flexibility to create entirely new videos from scratch, serving a variety of purposes such as reimagining existing cinematic works or crafting original films. This advancement is sure to thrill filmmakers and other creative individuals who are constantly seeking novel ways to expand their artistic horizons. With Gen-1, they gain access to a formidable new resource.

Applying an input image or prompt The potential uses of generating new videos from existing content are vast. For instance, it could allow creators to develop alternate versions of specific scenes that might be too costly or impractical to shoot anew.

Those interested can apply to experiment with the model while it is still in its beta phase.

Examining the Midjourney and Dall-E art styles with illustrative examples: 130 renowned techniques in AI painting.

The ability to generate new videos A collection of the top 50 prompts for generating images through text with AI art tools like Midjourney and DALL-E.

Text-to-3D Technology: Google has engineered a neural network capable of constructing 3D models derived from textual descriptions. here .

Read more about AI:

Disclaimer

In line with the Trust Project guidelines DeFAI Must Tackle the Cross-Chain Challenge to Realize Its Full Potential.