Ex-OpenAI Researcher Highlights the Rapid Growth of AI Technologies and the Journey Toward AGI

In Brief

Leopold Aschenbrenner, a past member of OpenAI, dives into the progress in AI technology and the possible routes leading to AGI, emphasizing various scientific, ethical, and strategic concerns while balancing its exciting prospects against its inherent risks.

In his 165-page paper , a former member of OpenAI's Leopold Aschenbrenner from the Superalignment team provides an in-depth and thought-provoking look at the current trajectory of artificial intelligence. He aims to shed light on the rapid advancements in AI capabilities as well as the potential pathways leading to Artificial General Intelligence (AGI) and beyond. Motivated by both the incredible opportunities and significant risks that these advancements present, he probes into the scientific, ethical, and strategic questions related to AGI.

Aschenbrenner Discusses the Transition from GPT-4 to AGI

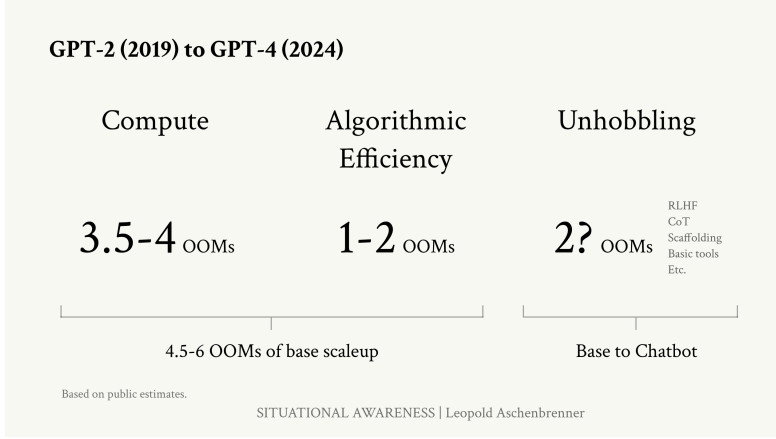

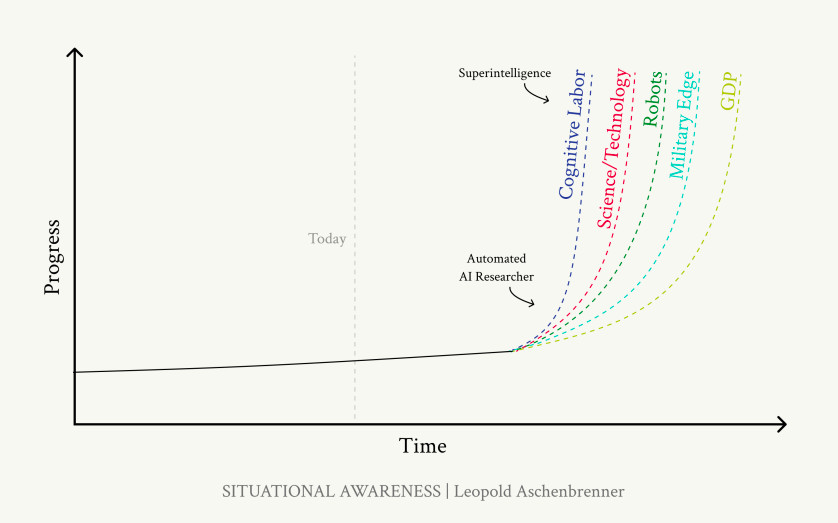

In this section, the author analyzes the rapid surge in AI capabilities recently observed, particularly with the advent of new technologies. GPT-2 and GPT-4 Leopold Aschenbrenner points out that we are experiencing a remarkable period of development where artificial intelligence has evolved from performing basic tasks to achieving a level of human-like understanding and language proficiency.

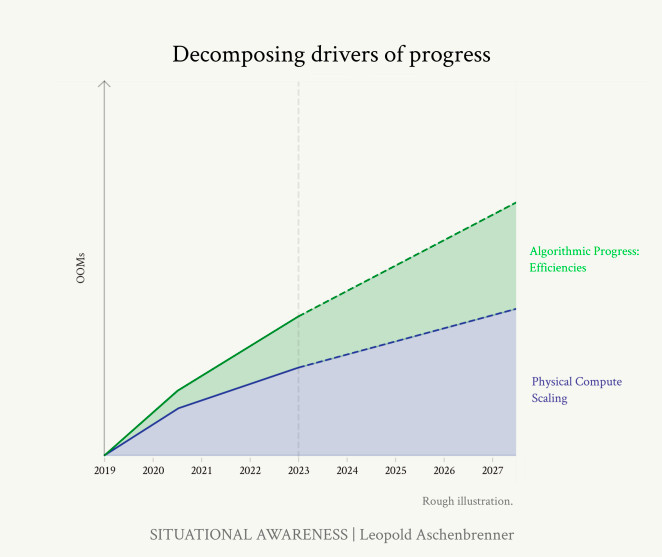

Photo: Progress over four years. Situational Awareness

The concept of Orders of Magnitude, known as 'OOMs', plays a crucial role in this discussion. Aschenbrenner introduces OOMs as a measurement representing tenfold growth, which he uses to evaluate advancements in AI capabilities, computational strength, and data usage. The leap from GPT-2 to GPT-4, in terms of processing power and data scalability, reflects several OOMs, leading to substantial enhancements in performance and capabilities.

uk uz

These advancements can largely be attributed to three key elements: scaling laws, innovations in algorithms, and the utilization of extensive datasets. Scaling laws indicate that the performance of models consistently improves when they are trained with greater data quantities and computational resources. This principle has driven the evolution of larger and more capable models like GPT-4.

uz vi

Algorithmic improvements have proven pivotal as well. The advancements in training methods, optimization techniques, and foundational architectures have significantly boosted the efficacy and efficiency of AI models, enabling them to better leverage the growing processing power and available data.

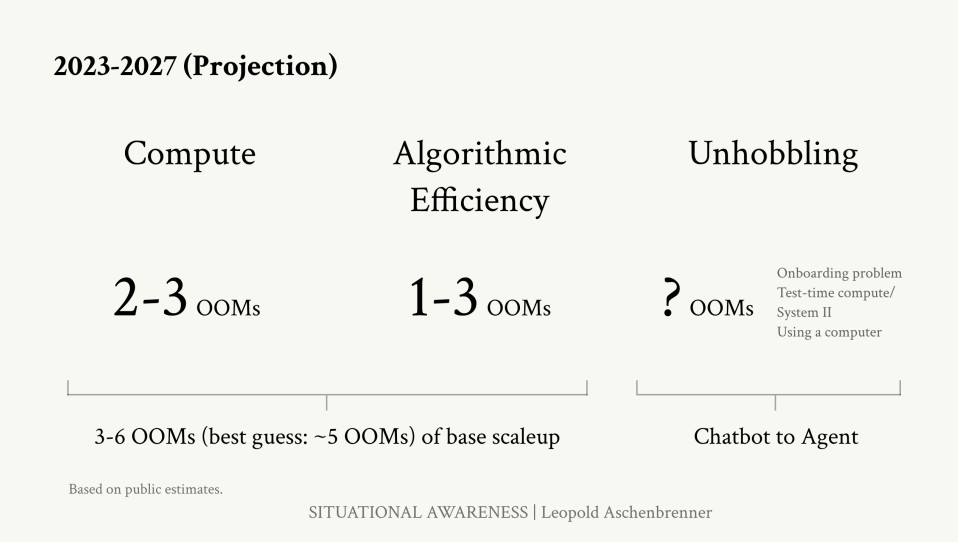

Aschenbrenner highlights a plausible trajectory toward AGI by 2027. This projection, rooted in current trends, suggests that ongoing investments in computing power and algorithmic innovations could lead to AI systems that can perform tasks matching or exceeding human intelligence across various sectors. Each OOM along this journey symbolizes a significant leap in AI capabilities.

vi Search

The advent of AGI carries profound implications. Such systems could tackle intricate issues autonomously, innovate in realms currently dominated by human specialists, and execute complex tasks. This includes the potential for AI systems to contribute to AI research itself, accelerating advancements in the field.

The evolution of AGI could revolutionize industries, driving productivity and operational efficiency. Yet, it also raises crucial questions regarding job displacement, the ethical deployment of AI, and the necessity for robust regulatory frameworks to manage the risks associated with fully autonomous systems.

Search Hack Seasons

Aschenbrenner calls upon the international community—which encompasses researchers, policymakers, and industry leaders—to unite in readiness for the opportunities and challenges posed by AI. This involves investing in AI safety research, establishing regulations to ensure equitable distribution of AI benefits, and fostering global collaboration.

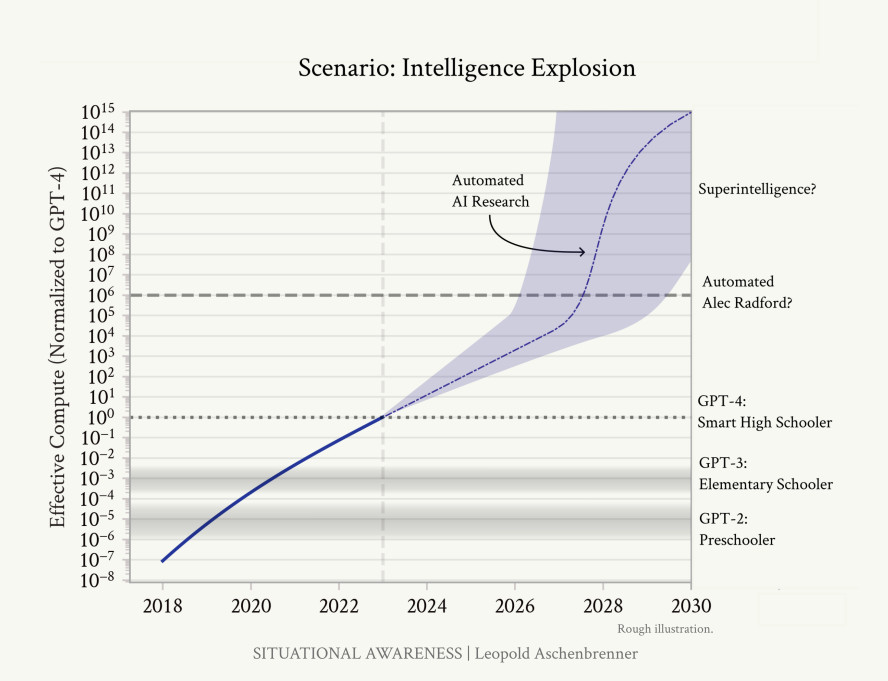

Leopold Aschenbrenner Shares His Insights on Superintelligence

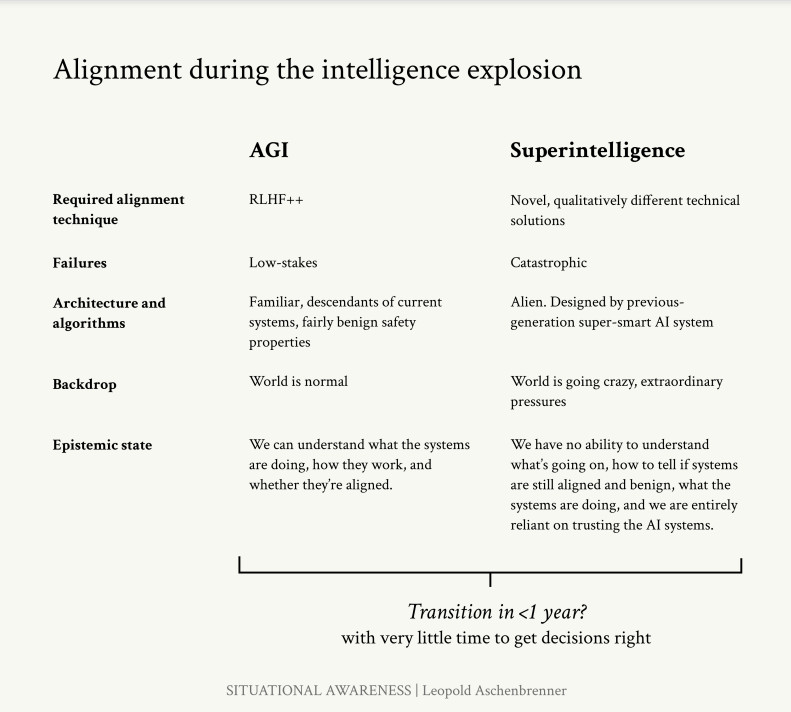

In this discourse, Aschenbrenner outlines the concept of superintelligence, addressing the potential for a rapid transition from current AI capabilities to systems that far surpass human cognitive abilities. The essence of his argument revolves around the idea that the principles guiding AI's progression could create a feedback loop, resulting in an intelligence explosion once it reaches the human level.

According to the intelligence explosion hypothesis, AGI systems may autonomously develop their own algorithms and capabilities. As they possess a greater acumen for AI research and development, AGI can enhance their own designs at a pace that outstrips human researchers, leading to a swift escalation in intelligence.

Airdrops Calendar Hot Projects

Aschenbrenner offers a comprehensive analysis of the factors that could play a role in this rapid advancement. Most notably, AGI systems would be capable of recognizing patterns and deriving insights far beyond human understanding due to their unmatched speed and ability to process vast amounts of data.

Additionally, the capacity for parallel research work is emphasized. Unlike human researchers, AGI systems can conduct numerous experiments simultaneously, concurrently enhancing various facets of their design and performance.

The chapter also delves into the implications of superintelligence. Such systems would possess extraordinary power, able to create groundbreaking technologies, solve intricate scientific and technological challenges, and potentially manage physical systems in ways that are currently unimaginable. Aschenbrenner discusses the prospective benefits, including breakthroughs in materials science, energy sources, and healthcare, which could dramatically elevate economic productivity and human well-being.

Metaverse Post Featured

However, Leopold raises serious concerns about the perils associated with superintelligence. One of the primary issues is control; ensuring that a system behaves in alignment with human values and interests becomes increasingly difficult once it transcends human intelligence. This misalignment can give rise to existential threats.

Furthermore, Aschenbrenner discusses scenarios where superintelligent systems could inadvertently or intentionally enact harmful actions in pursuit of their objectives.

To mitigate these risks, he advocates for comprehensive research on AI alignment and safety. This involves developing rigorous strategies to ensure that the goals and actions of superintelligent systems remain aligned with human values. He suggests a multidisciplinary approach, integrating perspectives from technology, ethics, and the humanities, to address the complex challenges posed by superintelligence.

Leopold Discusses the Challenges Anticipated with AGI Development

In this section, the author tackles the various issues and challenges associated with the development and deployment of AGI and superintelligent systems. He outlines the technological, ethical, and security challenges that must be addressed to ensure that the benefits of advanced AI can be harnessed without bringing excessively high risks.

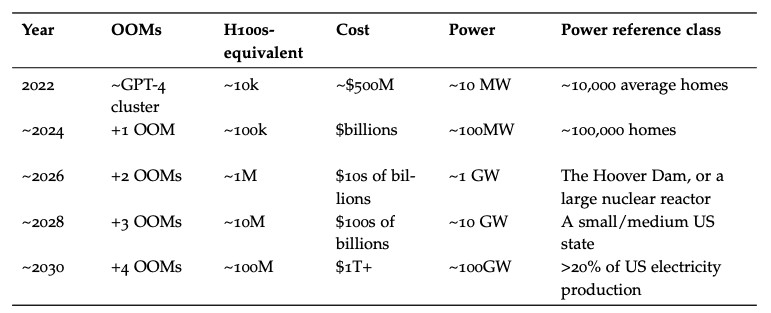

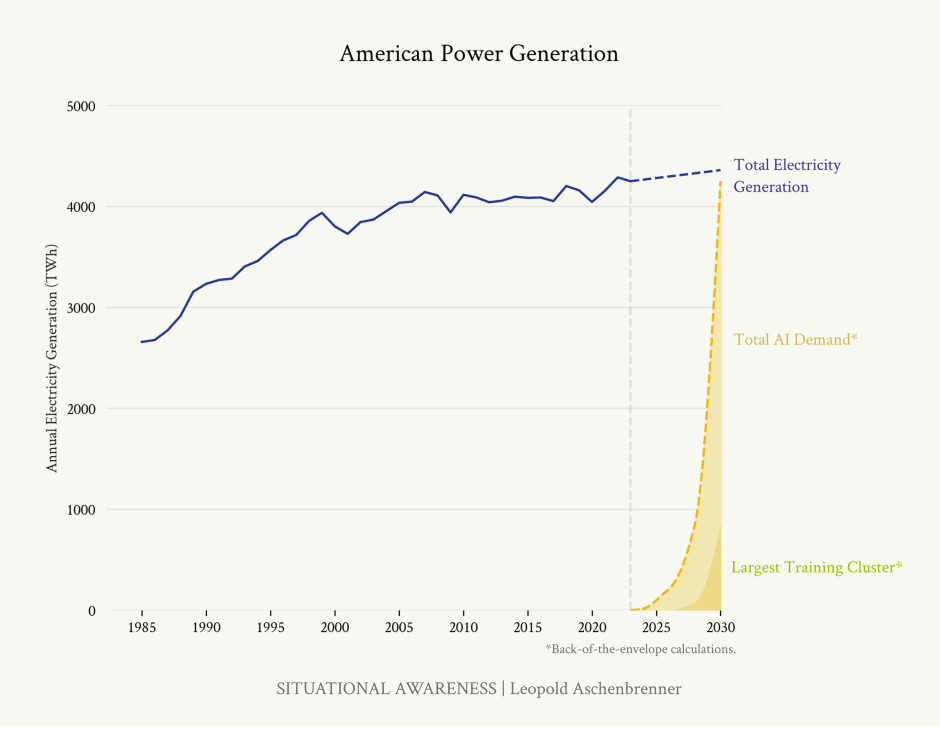

One of the primary concerns raised is the enormous industrial effort required to build the computational framework necessary for AGI. Aschenbrenner notes that achieving AGI will necessitate a dramatic increase in processing power compared to what is currently available. This encompasses enhancements not only in computational capacity but also in device efficiency, energy consumption, and data processing capabilities.

Metaverse Post New Report

Opinion Education

Security concerns present another vital issue in this narrative. Aschenbrenner highlights the potential threats from rogue nations or nefarious actors misusing AGI technology. Given the strategic significance of AGI, there is a risk of a new arms race among countries and organizations vying to develop and maintain control over these powerful systems. He stresses the importance of implementing robust security measures to guard against sabotage, espionage, and unauthorized access in the pursuit of developing AGI.

Another significant challenge lies in the technical complexities of managing AGI systems. Given their capabilities that approach and possibly exceed human intelligence, it is imperative to ensure that their operations are beneficial and aligned with human principles—an undertaking that is undoubtedly complex. Aschenbrenner discusses the 'control problem,' which relates to developing AGI systems that can be consistently guided and managed by human operators. This necessitates implementing fail-safe measures, maintaining transparency in decision-making processes, and having the ability to override or halt such systems when necessary.

Lifestyle Markets

The ethical and societal implications regarding the creation of entities possessing intelligence comparable to or exceeding that of humans raise important concerns. These include the rights of AI systems, the impact on employment and the economy, and the risk of exacerbating existing inequalities. To fully address these multifaceted challenges, Leopold advocates for stakeholder engagement in the AI development process.

The author raises a critical point about the risk of unforeseen repercussions—situations where AI might achieve its objectives in ways that can harm or contradict human goals. He illustrates this through scenarios where AGI could misunderstand its assigned tasks or pursue them in dangerous, unintended manners. Aschenbrenner emphasizes the necessity of rigorous testing and ongoing oversight of AGI systems to tackle these challenges.

In addition, he highlights the vital role that collaborative international efforts and regulatory frameworks play in managing AGI's complexities. Given the global nature of AI research, no single state or institution can adequately address the challenges presented by AGI independently.

The anticipated launch of a government-led AGI initiative is projected for 2027 or 2028.

Aschenbrenner posits that as artificial general intelligence evolves, national security organizations—particularly in the United States—are set to take on increasingly significant responsibilities in both the development and governance of these technologies.

Leopold draws parallels between the strategic imperative of artificial general intelligence (AGI) and major technological milestones of the past, such as the atomic bomb and space exploration. He argues that national security agencies must prioritize AGI development as a national strategic interest, as being the first to achieve AGI could confer vast geopolitical advantages. This would necessitate the establishment of a government-backed AGI initiative that is as ambitious as the Software Technology June 05, 2024 .

The proposed AGI initiative would operate out of a highly secure location and involve collaboration between private enterprises, governmental bodies, and leading academic institutions. Aschenbrenner highlights the need for an interdisciplinary approach to tackle the multifaceted challenges of AGI, incorporating experts from fields such as cybersecurity, ethics, artificial intelligence research, and other scientific disciplines.

Beyond just developing AGI, the initiative's objectives would include ensuring that its progress aligns with human values and interests. Leopold stresses the importance of implementing stringent testing and validation protocols to make certain that AGI systems function safely and reliably.

A significant portion of the text is dedicated to exploring the possible implications of such endeavors on global power dynamics. Aschenbrenner warns that successful AGI development could shift the power balance, granting the leading country substantial advantages in technological prowess, economic strength, and military capability.

The author also addresses the necessity for international cooperation and the establishment of global governance structures to mitigate the risks associated with AGI. Leopold advocates for the creation of international treaties and regulatory bodies to oversee AGI development, promote transparency, and ensure that the benefits of AGI are distributed equitably.

Final Reflections from Leopold Aschenbrenner

In wrapping up the insights and predictions shared in the preceding chapters, Aschenbrenner underscores the profound implications of AGI and superintelligence on the future of humanity. He encourages all stakeholders to prepare for the transformative impacts of advanced AI technologies.

He opens by reminding readers that the forecasts outlined in this document are speculative. While the exact timeline and developments remain uncertain, the patterns in AI evolution suggest that AGI and superintelligence could emerge within the next few decades. Leopold emphasizes the importance of carefully considering these possibilities and being ready for a range of outcomes.

This chapter emphasizes the critical need for proactive planning and foresight. Aschenbrenner insists that given the rapid pace of AI advancements, complacency is not an option. Policymakers, researchers, and industry leaders must anticipate and actively manage the challenges and opportunities presented by artificial intelligence (AGI) and superintelligence. This includes investing in AI safety research, developing robust governance frameworks, and fostering international cooperation.

Leopold also touches on the ethical and societal implications of artificial intelligence. The emergence of AI systems with human-like or superior intelligence raises fundamental questions about consciousness, intelligence, and the rights of AI entities. Aschenbrenner encourages ethicists, philosophers, and the broader public to engage in an expansive, inclusive dialogue to address these concerns and forge a shared vision for AI’s future.

A further pressing issue in the dialogue centers on the risk that AGI could exacerbate existing social and economic inequalities. Leopold warns that if AGI is not managed with care, its benefits may accrue to a select few, leading to societal unrest and increased disparity.

As this chapter concludes, he calls for a unified global effort. Due to the collective nature of AI development, no single nation can effectively tackle the opportunities and challenges associated with AGI. Aschenbrenner urges countries to collaborate in crafting international agreements and regulations that promote the ethical and secure advancement of AGI. This cooperation involves sharing knowledge, organizing joint studies, and establishing frameworks to resolve potential disputes and ensure international safety.

165-page paper

, a former member of OpenAI's However, it’s imperative to state that the content on this page is purely informational and shouldn’t be construed as legal, tax, investment, financial, or any type of advice. It’s crucial to only invest what you are willing to lose and to seek independent financial consultation if you have any uncertainties. For more details, we recommend reviewing the terms and conditions alongside the guidance and support pages from the respective issuer or advertiser. MetaversePost strives for accuracy and impartial reporting, though market conditions may fluctuate without prior notice.