Facebook Unveils Innovative Technique to Boost AI Transformer Efficiency

In Brief

Facebook has initiated an innovative approach to significantly enhance the capabilities of AI transformers, rooted in the transformer architecture.

The essence of this new technique lies in its ability to identify and merge similar patches amidst the varying blocks of processing, which effectively minimizes the overall computational load.

Facebook has developed a new method to significantly enhance AI transformer performance. The foundation of this technique rests on the transformer architecture and has been particularly tailored for lengthy texts such as books, articles, and blogs. The primary aim is to elevate the performance of transformers when dealing with extended sequences, optimizing them for better efficiency and effectiveness. The preliminary outcomes are quite encouraging, showcasing the potential of this new approach to enhance transformer-based models across different tasks. transformer-based models This innovative technique is poised to revolutionize numerous natural language processing applications, including language translation, summarization, and Q&A systems. Moreover, it is likely to pave the way for the creation of more advanced AI models capable of processing intricate and protracted texts.

Facebook has pioneered a novel technique to significantly elevate the efficiency of AI transformers

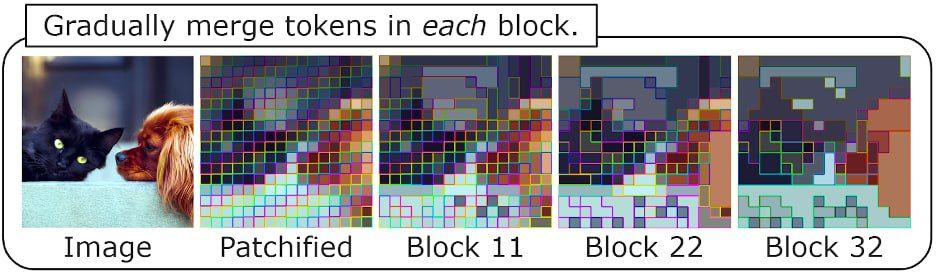

The new technique strategically identifies the most comparable patches in the processing gaps and combines them to simplify computational complexity. The proportion of merged tokens is adjustable; while higher values might speed up the process, they could also result in a decrease in quality. Experimental data indicates that around 40% of tokens can be merged with only a minimal quality decline of 0.1-0.4%, achieving a notable doubling in processing speed, thus utilizing less memory. This new approach presents a promising pathway toward lessening the computational strain associated with image processing, enabling swifter operations without reducing the final output's quality.

When we visualize the merged patches, it's evident that they are 1) situated close to one another and 2) represent the same object (as depicted in sections of the same color in the GIF). This ensures that no significant detail is compromised; the object remains within the model's focus. The later in the processing this merging occurs, the more tokens can be combined—this is due to the fact that these representations denote higher-level abstractions that effectively describe the image content.

They have rolled out the first 120B Galactica model, which has been trained on scientific literature to provide swifter and more accurate predictions. The mission of Galactica is to assist researchers in discerning key insights from extraneous information. transformer models Meta is developing a cutting-edge AI platform aimed at bolstering medical research and revamping avatars.

- Meta AI and Paperswithcode Sam Altman predicts that the level of intelligence across the universe will double every 18 months.

Read more related news:

Disclaimer

In line with the Trust Project guidelines Cryptocurrencylistings.com Launches CandyDrop to Streamline Crypto Acquisitions and Boost User Engagement with Quality Initiatives