Deci has launched DeciCoder, an innovative generative AI-driven foundational model aimed at code generation in numerous programming languages.

In Brief

Deci's DeciCoder is a cutting-edge generative AI foundational model created to facilitate and accelerate the process of coding.

With its advanced design encompassing 1 billion parameters and a context window of 2048 tokens, DeciCoder excels in producing diverse and high-quality code snippets in multiple programming languages.

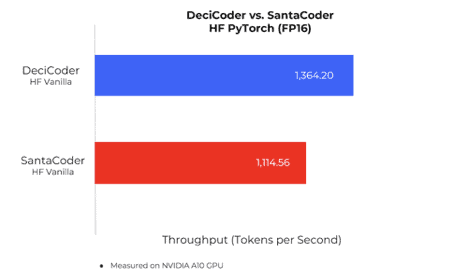

When it comes to performance, DeciCoder outmatches SantaCoder, providing quicker inference speeds on less costly hardware while maintaining high accuracy.

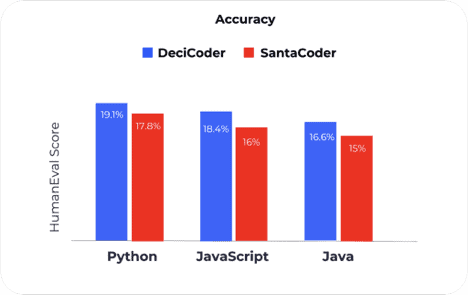

In terms of precision, it beats SantaCoder across the board for programming languages such as Python, JavaScript, and Java.

Deep learning company Deci DeciCoder, a breakthrough generative AI foundational model, can generate code for various programming languages. The company reports that it features 1 billion parameters and a substantial context window of 2048 tokens, allowing for high-quality and varied code creation.

According to Yonatan Geifman, CEO and co-founder of Deci, the expenses associated with model inference are a considerable challenge for generative AI applications focused on code generation. These costs are primarily influenced by the model's large size, processing needs, and the significant memory demands of robust large language models (LLMs). Consequently, swift generation often requires expensive, high-end hardware.

Geifman shared with Metaverse Post, \"To combat these steep costs and lower inference expenses by four times, we need to develop more efficient models. These models must allow for rapid inference on budget-friendly hardware without compromising accuracy. That is precisely what DeciCoder accomplishes, making it a standout product.\"

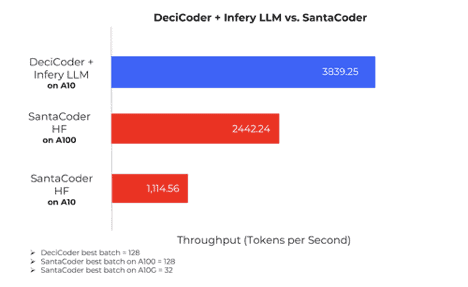

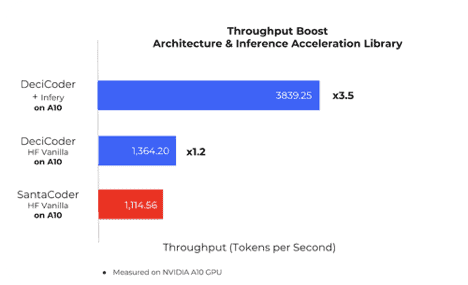

The firm stated that when utilizing NVIDIA's A10G, a more budget-friendly hardware option, DeciCoder's inference speed is faster than that of SantaCoder, the leading model in the 1-billion parameter category, which operates on the pricier A100. In fact, DeciCoder on the A10G is 3.5 times quicker than SantaCoder on the same model and 1.6 times faster than SantaCoder on the A100.

Geifman stresses that DeciCoder also maintains exceptional accuracy across the three programming languages it was trained on: Python, JavaScript, and Java.

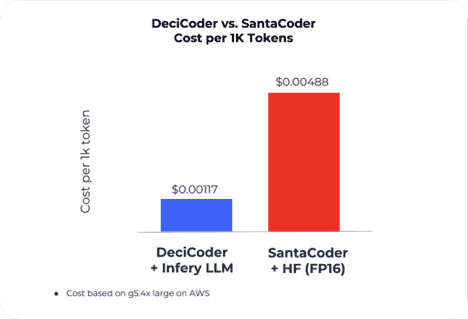

He added that using Deci’s Infery tool significantly cuts inference costs: achieving a 71.4% reduction in expenses per 1,000 tokens when compared to SantaCoder’s performance on the HuggingFace Inference Endpoint.

DeciCoder enables businesses to lower their computational expenses during inference by permitting them to shift their code generation tasks to more affordable hardware while retaining speed and accuracy, or alternatively, allows for the generation of more code in less GPU time.

Geifman shared.

In addition, when used alongside Infery, Deci’s inference acceleration library, on an A10G GPU, DeciCoder reportedly assists in reducing the carbon footprint, with the company claiming an annual reduction of 324 kg CO2 emissions per model instance relative to SantaCoder on identical hardware.

Pushing the Envelope in Code Generation with Remarkable Performance Metrics

Geifman elaborated that two key technological advancements contribute to DeciCoder’s superior performance and lower memory usage: its innovative model architecture and the use of Deci’s inference acceleration library.

"Deci's architecture is born from our proprietary Neural Architecture Search technology, AutoNAC, which has enabled the creation of several high-efficiency foundational models in both computer vision and natural language processing,\" he explained. \"The model's inherent design lends DeciCoder enhanced throughput and accuracy. Although DeciCoder is based on the transformer architecture like SantaCoder and OpenAI's GPT models, it differentiates itself through its unique implementation of Grouped Query Attention (GQA).\"

Whereas GPT-3, SantaCoder, and Starcoder utilize Multi-Query Attention over Multi-Head Attention to expedite efficiency, this comes at the risk of compromising quality and precision compared to Multi-Head Attention.

Deci's GQA provides a superior equilibrium between efficiency and accuracy compared to Multi-Query Attention. It achieves similar efficiency while also delivering a notable improvement in precision.

This disparity is particularly visible when contrasting DeciCoder with SantaCoder, both deployed on HuggingFace Inference Endpoints. DeciCoder achieves 22% greater throughput and exhibits enhanced accuracy, as illustrated in the accompanying charts.

Deci has announced that its LLM inference acceleration library, Infery, leverages cutting-edge proprietary engineering methods developed by the company's research and engineering teams to improve inference speeds.

The company asserts that these advancements lead to a further increase in throughput, applicable to any LLM, not just Deci's. Moreover, Infery is designed for ease of use, enabling developers to implement complex, advanced techniques with just a few lines of code.

Harnessing AutoNAC for the Ideal Combination of Precision and Speed

Geifman mentioned that historically, the pursuit of the \"ideal\" neural network architecture has been a laborious manual process. While this approach can yield results, it's often exhaustive and may not always identify the most efficient neural networks.

"The AI community has acknowledged the potential of Neural Architecture Search (NAS) as a revolutionary tool for automating the development of superior neural networks, but traditional NAS methods require substantial computational resources, limiting their use to a select few organizations with vast resources,\"

Geifman told Metaverse Post.

Deci asserts that its AutoNAC feature simplifies NAS processes by providing a computation-efficient method for generating NAS-driven algorithms, thus making previously unattainable advancements feasible.

The company describes AutoNAC as an algorithm that inputs specific dataset characteristics, model tasks, performance goals, and inference environments, producing an optimal neural network that achieves the best balance of accuracy and inference speed according to the stated criteria.

Beyond object-detection models such as Yolo-NAS , AutoNAC has already developed transformer-based models tailored for NLP tasks ( DeciBert ) and computer vision tasks ( NAS SegFormer ).

The company shared that the launch of DeciCoder marks the beginning of a series of eagerly awaited releases showcasing Deci's Generative AI capabilities, scheduled to arrive in the coming weeks.

Developers can now access DeciCoder and its pre-trained weights under the permissive Apache 2.0 License, providing extensive usage rights and positioning the model for genuine, commercial use.

Read more:

- LLaMa with 7 Billion Parameters Achieves Stunning Inference Speed on Apple M2 Max Chip

- GPT-4’s Leaked Information Reveals Its Enormous Scale and Admirable Architecture

- ChatGPT Triumphs Over Chinese Chatbot Ernie in All AI Benchmarks

Disclaimer

In line with the Trust Project guidelines , please keep in mind that the information presented on this page is not intended to serve as legal, tax, investment, financial, or any other type of advice. It is crucial to only invest amounts that you can afford to lose and seek independent financial counsel if you have any uncertainties. For further information, we recommend reviewing the terms and conditions along with the help sections provided by the issuer or advertiser. MetaversePost is committed to ensuring accurate and impartial reporting; however, market conditions may shift without prior notice.