ControlNet Enhances Your Ability to Create Flawless Hands Using Stable Diffusion 1.5

In Brief

ControlNet offers an intuitive way to refine Stable Diffusion.

Its functionality can be harnessed to create superior models for better management of SD.

Being open-source, ControlNet can integrate seamlessly with WebUIs to realize your creative vision. Stable Diffusion .

A key hurdle for text-to-image AI generators has been the accurate rendering of hands. Despite the stunning quality of most images, hands often come out looking unnatural, with issues like extra fingers and oddly bent joints. Fortunately, the new ControlNet system is here to assist Stable Diffusion in producing remarkably realistic hands.

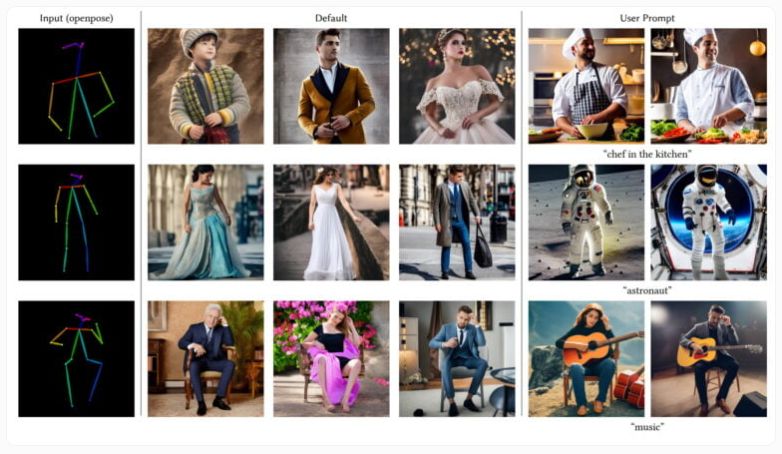

ControlNet represents a significant advancement in technology, enabling you to use sketches, outlines, and depth or normal maps to direct neural networks within Stable Diffusion 1.5. This means that with the right guidance, achieving nearly perfect hands on any custom 1.5 model is now within reach. You can see ControlNet as a game-changing resource, providing users with precise control over their creations.

To create impeccable hands, utilize the A1111 extension alongside ControlNet, particularly its Depth module. Start by snapping a few close-up selfies of your hands and upload those to the txt2img tab in ControlNet's UI. Then, craft a simple, imaginative prompt like 'fantasy artwork, Viking man displaying his hands up close' and delve into the capabilities of ControlNet. By experimenting with the Depth module, the A1111 extension, and the txt2img function, you're likely to achieve stunningly realistic hands.

| Recommended post: Shutterstock acknowledges and rewards artists who contribute to the generative AI landscape. |

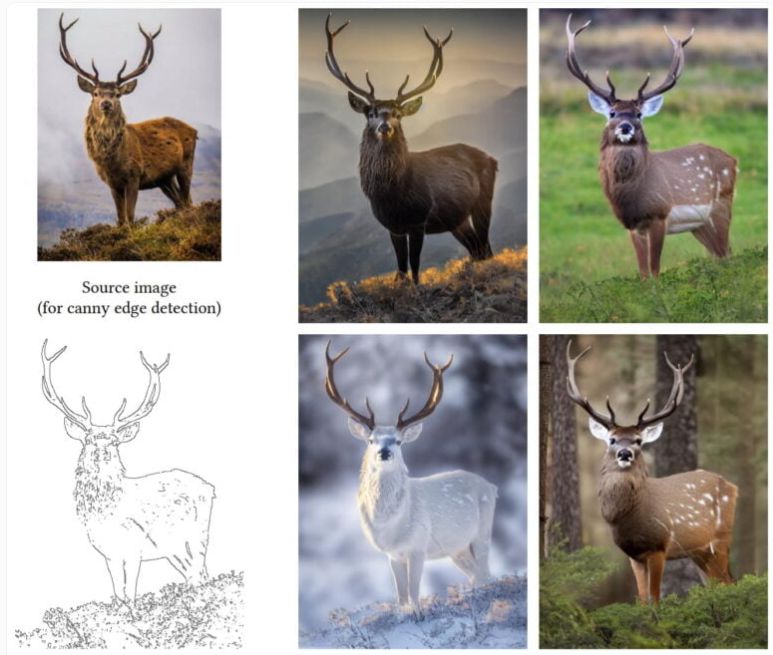

ControlNet transforms any given image into depth, normal maps, or sketches that can serve as models later on. You can also choose to upload your own depth maps or sketches, thus granting you the utmost flexibility when constructing a 3D scene, allowing you to concentrate more on the aesthetics and the quality of the finished product.

We highly recommend checking out the insightful piece recently published by Aitrepreneur. ControlNet tutorial ControlNet dramatically enhances the control over image-to-image functionalities within Stable Diffusion.

While it can generate images from text, it also has the ability to create graphics based on existing templates. This image-to-image workflow is often employed to create new visuals from scratch using certain templates.

Although Stable Diffusion Version 2.0 introduces the ability to utilize depth data from images as a framework, but the degree of control in this process remains somewhat limited. The older version, 1.5, is still widely favored due to the vast array of custom models available, among other factors. enhance generated photos ControlNet captures each block's weights from Stable Diffusion into both a trainable version and a locked variant. The locked version maintains the original diffusion model's production capabilities, while the trainable one allows for the acquisition of new conditions for image synthesis through fine-tuning with small datasets.

While Stable Diffusion The use of ControlNet significantly heightens the control over Stable Diffusion's image-to-image capabilities.

It works seamlessly with all ControlNet models, providing much deeper oversight over generative AI processes. The team showcases various samples, featuring diverse poses of individuals and numerous interior shots shaped by the arrangement of the model, along with various bird images.

Stable Diffusion Google Maps might soon unveil the most lifelike Metaverse experience ever.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Blum Commemorates Its One Year Anniversary With Prestigious 'Best GameFi App' and 'Best Trading App' Awards at Blockchain Forum 2025