Top 10 Graphics Cards for ML/AI: Leading GPUs for Deep Learning

Selecting the right graphics card is pivotal for achieving peak performance during the processing of massive datasets and executing concurrent computations. This becomes particularly crucial when dealing with deep learning tasks, where the requirements for matrix and tensor operations are extensive. Lately, there has been a noticeable surge in the popularity of specialized AI chips, like TPUs and FPGAs.

Essential Attributes for Machine Learning Graphics Cards

When it comes to choosing a graphics card for machine learning applications, you should consider several vital attributes:

- Computing Power:

The quantity of cores or processors has a direct effect on the parallel processing efficiency of the graphics card. More cores equate to quicker and more effective computations. - GPU Memory Capacity:

Having sufficient memory capacity is critical for managing large datasets and intricate models. Efficient data storage and access are key to reaching optimal performance. - Support for Specialized Libraries:

Ensuring that your hardware is compatible with specialized libraries like CUDA or ROCm can dramatically speed up model operations. Utilizing hardware-optimized features simplifies computations and boosts general effectiveness. training processes Graphics cards equipped with rapid memory and broad memory bus configurations provide excellent performance during model training, ensuring quick and uninterrupted data processing. - High-Performance Support:

Framework Compatibility for Machine Learning: - It's vital to ensure that the chosen graphics card works smoothly with the machine learning frameworks and development tools in use. Compatibility is key to seamless integration and maximized resource utilization.

Comparison Chart for Graphics Cards in ML/AI

The NVIDIA Tesla V100 is a formidable Tensor Core GPU engineered specifically for AI and machine learning tasks as well as High Performance Computing (HPC). Utilizing the groundbreaking Volta architecture, this graphics card provides exceptional performance, achieving a staggering 125 trillion floating-point operations per second (TFLOPS). In the following sections, we'll delve into the significant advantages and factors to consider regarding the Tesla V100.

| Graphics Card | Memory, GB | CUDA Cores | Tensor Cores | Price, USD |

|---|---|---|---|---|

| Tesla V100 | 16/32 | 5120 | 640 | 14,999 |

| Tesla A100 | 40/80 | 7936 | 432 | 10,499 |

| Quadro RTX 8000 | 48 | 4608 | 576 | 7,999 |

| A 6000 Ada | 48 | 18176 | 568 | 6,499 |

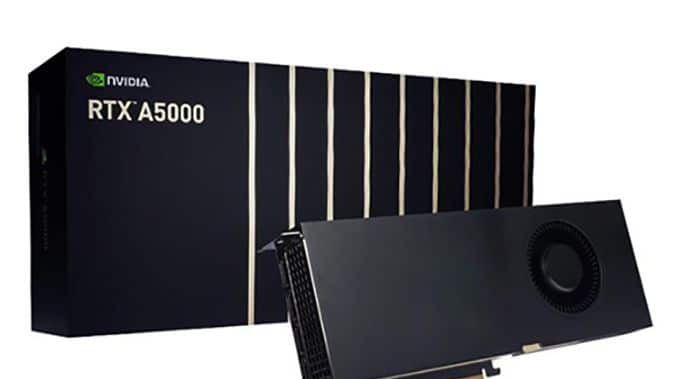

| RTX A 5000 | 24 | 8192 | 256 | 1,899 |

| RTX 3090 TI | 24 | 10752 | 336 | 1,799 |

| RTX 4090 | 24 | 16384 | 512 | 1,499 |

| RTX 3080 TI | 12 | 10240 | 320 | 1,399 |

| RTX 4080 | 16 | 9728 | 304 | 1,099 |

| RTX 4070 | 12 | 7680 | 184 | 599 |

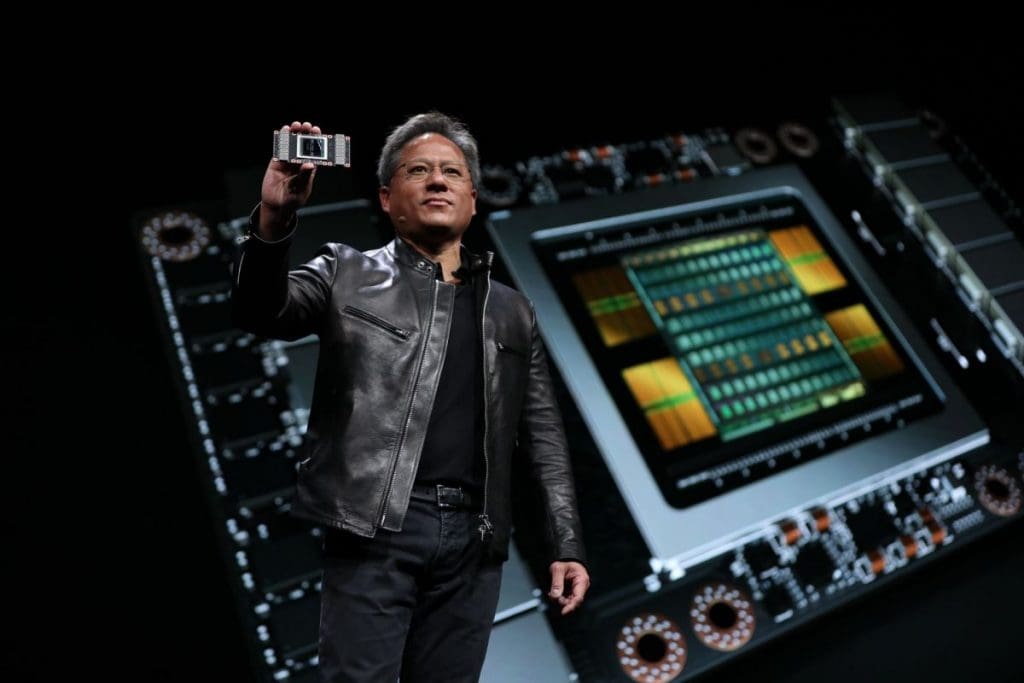

NVIDIA Tesla V100

Powered by the Volta architecture and featuring 5120 CUDA cores, the Tesla V100 delivers stellar performance on machine learning problems. Its capability to manage large datasets and execute sophisticated calculations at impressive speeds is critical for running effective machine learning operations.

Pros of Tesla V100:

- High Performance:

With 16 GB of HBM2 memory, the Tesla V100 handles significant data volumes efficiently throughout the model training phase. This benefit is particularly beneficial for large datasets, allowing for smooth data manipulation. Furthermore, its 4096-bit memory bus width facilitates rapid data transfer between the processor and memory, enhancing the processing efficiency for machine learning models during both training and inference. - Large Memory Capacity:

The Tesla V100 includes numerous deep learning technologies, such as Tensor Cores, which significantly accelerate floating-point calculations. This improvement leads to substantial reductions in model training time, thus boosting overall performance. - Deep Learning Technologies:

One of the remarkable aspects of the Tesla V100 is its adaptability to both desktop and server environments. It integrates seamlessly with a variety of machine learning frameworks, including TensorFlow, PyTorch, Caffe, among others, allowing developers to utilize their preferred tools for model creation and training. - Flexibility and Scalability:

Though being a high-tier solution, the NVIDIA Tesla V100 comes with a hefty price tag of $14,447 which may represent a considerable expense for individuals or small teams focused on machine learning. This cost should be carefully assessed in relation to overall budget and project needs.

Considerations for Tesla V100:

- High Cost:

Considering the Tesla V100's significant performance capabilities, it requires an adequate power supply and generates considerable heat. Therefore, implementing sufficient cooling solutions is necessary to maintain ideal operating temperatures, which may lead to increased energy use and costs. - Power Consumption and Cooling:

To fully harness the Tesla V100's abilities, an infrastructure that includes a robust processor and enough RAM is necessary for efficient operation. - Infrastructure Requirements:

The NVIDIA A100, which features the innovative Ampere architecture, signifies a substantial evolution in GPU tech for machine learning use cases. It boasts exceptional performance, a large memory capacity, and NVLink support, allowing data scientists and researchers to efficiently tackle complex tasks. data processing and model training .

Conclusion:

However, careful consideration of its high cost, power needs, and software compatibility is essential prior to embracing the NVIDIA A100. Its advancements pave the way for accelerated model training and inference, advancing the machine learning landscape. Designed for rigorous machine learning tasks, the NVIDIA A100, utilizing cutting-edge Ampere technology, exemplifies a remarkable graphics card development. With outstanding performance and adaptability, the A100 represents a significant technological leap. We will explore in detail the advantages and factors one should contemplate regarding the NVIDIA A100. Featuring an impressive number of 4608 CUDA cores, the NVIDIA A100 exhibits exceptional computing capabilities. This enhanced processing power accelerates machine learning tasks, resulting in quicker model training and inference.

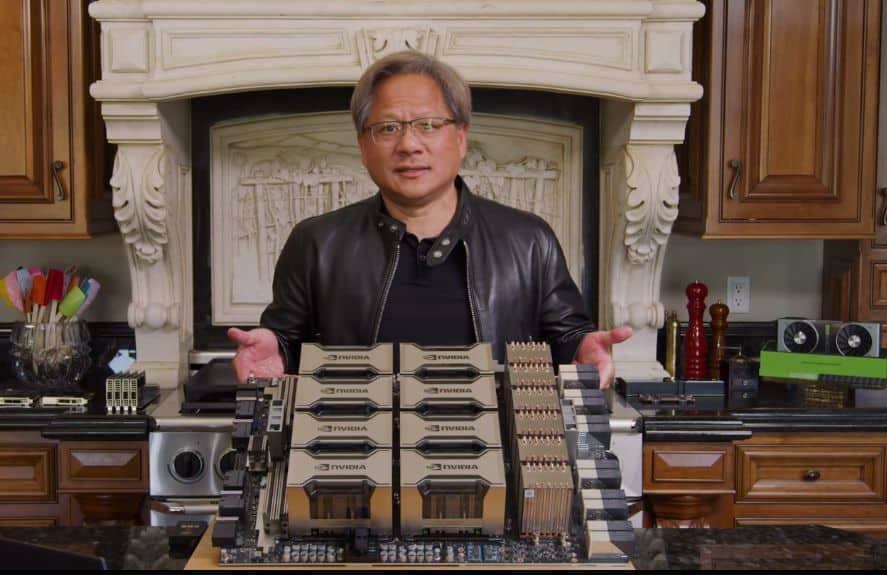

NVIDIA Tesla A100

The NVIDIA A100 graphics card is equipped with 40 GB of HBM2 memory, allowing efficient processing of extensive datasets. This ample memory is particularly beneficial when handling complex, large-scale workloads, ensuring smooth and effective data operations.

Pros of NVIDIA A100:

- High Performance:

The integration of NVLink technology enables multiple A100 graphics cards to be efficiently combined within a single system, supporting parallel computing. This significant increase in parallelism enhances performance and accelerates model training, contributing to more productive machine learning processes. - Large Memory Capacity:

As one of the market's most powerful graphics cards, the NVIDIA A100 comes with a price tag of $10,000, which may be a significant investment for individuals or companies looking to adopt this technology. deep learning model training Maximizing the capabilities of the NVIDIA A100 requires a significant power supply, which might increase energy use and necessitate thoughtful power management strategies, especially for large-scale deployments. - Support for NVLink Technology:

To achieve optimal results, proper software and drivers are essential for the A100. It's crucial to note that some machine learning applications and frameworks may not be fully optimized for this specific graphics card model, so compatibility should be assessed when integrating the A100 into existing workflows.

Considerations for NVIDIA A100:

- High Cost:

The Tesla V100, with its advanced Volta architecture and state-of-the-art features, is an impressive Tensor Core GPU crafted for AI, HPC, and machine learning tasks. Its outstanding performance, ample memory, deep learning capabilities, and flexibility make it an attractive choice for organizations and researchers engaged in advanced machine learning initiatives. However, factors such as cost, power usage, and infrastructure needs must be scrutinized to ensure a worthwhile investment. With the Tesla V100, the potential for breakthroughs in AI and machine learning is closer than ever, enabling researchers to exceed the limits of innovation. - Power Consumption:

The Quadro RTX 8000 is a robust graphics card tailored specifically for professionals seeking superior rendering capabilities. With its advanced features and high-end specifications, this graphics card caters to a variety of applications, ranging from data visualization and computer graphics to machine learning. In this section, we will delve into the unique features and benefits of the Quadro RTX 8000. data centers . - Software Compatibility:

The Quadro RTX 8000 is powered by a capable GPU with 5120 CUDA cores, granting unparalleled strength for demanding rendering applications. Its remarkable computational abilities empower professionals to create intricate models with realistic shadows, reflections, and refractions, yielding lifelike results.

Conclusion:

One of the key features of the Quadro RTX 8000 is its hardware-accelerated ray tracing capability. This technology allows for the generation of photorealistic images and dynamic lighting effects. For those engaged in areas like data visualization, computer graphics, or machine learning, this capability significantly enhances the realism and visual quality of their projects. data scientists With a large allocation of 48GB of GDDR6 graphics memory, the Quadro RTX 8000 efficiently manages computations, especially when operating with extensive machine learning models and datasets. Professionals can execute intricate computations and process significant volumes of data without sacrificing performance or efficiency.

NVIDIA Quadro RTX 8000

Top 10 Graphics Cards for Machine Learning and AI: Leading GPUs for Deep Learning practical benefits Choosing the right graphics card is essential for unlocking peak performance when it comes to managing extensive datasets and executing parallel operations.

Pros of Quadro RTX 8000:

- High Performance:

The Ultimate Guide: Top 10 Graphics Cards for Machine Learning and AI immersive visual experiences . - Ray Tracing Support:

FTC's Appeal to Prevent Microsoft-Activision Merger Denied Published: July 18, 2023 at 7:12 AM | Updated: July 18, 2023 at 7:12 AM To enhance your experience in your native language, we occasionally utilize an auto-translation tool. Please keep in mind that the translation may not always be accurate, so read carefully. - Large Memory Capacity:

Selecting the right graphics card is critical for ensuring peak performance while processing large datasets and performing parallel computations. This is especially true for tasks that involve training deep neural networks, where intensive processing of matrices and tensors is required. Lately, there has been a noticeable increase in the popularity of specialized AI chips, including TPUs and FPGAs. storage and retrieval of data Essential Features of Graphics Cards for Machine Learning - Library and Framework Support:

When you're on the hunt for a graphics card suited for machine learning, there are several key specifications to consider:

Considerations for Quadro RTX 8000:

- High Cost:

The quantity of cores or processors directly influences the card's ability to process tasks in parallel. A greater number of cores usually indicates quicker and more efficient computations.

Conclusion:

Having a generous memory capacity is vital for managing vast datasets and intricate models effectively. Efficient data storage and access are crucial for reaching optimal performance levels.

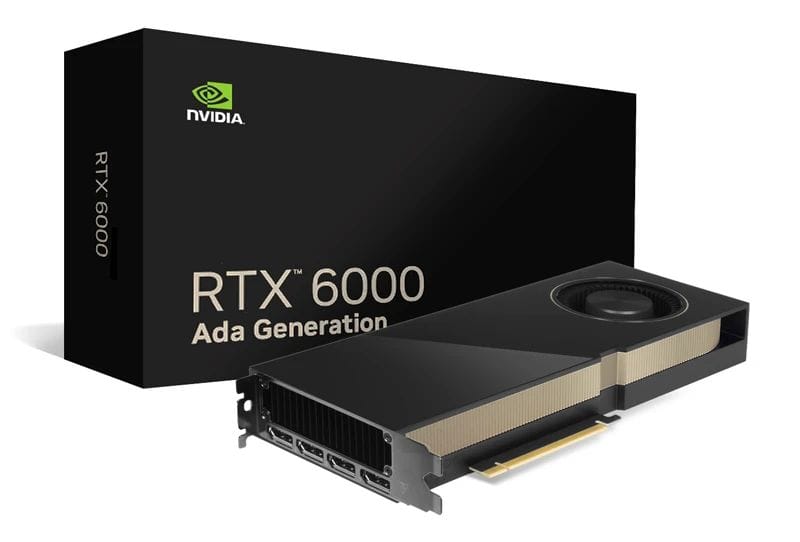

NVIDIA RTX A6000 Ada

Compatibility with specialized software libraries such as CUDA or ROCm can greatly speed up model training and execution. Tapping into hardware-specific enhancements helps streamline processes and boosts overall efficiency.

Pros of RTX A6000 Ada:

- High Performance:

Graphics cards that come with high-speed memory and broad memory bus designs provide exceptional performance during model training sessions. These aspects ensure that data is processed swiftly and without hitches. - Large Memory Capacity:

Framework Compatibility for Machine Learning: - Low Power Consumption:

It's crucial to confirm that the graphics card you choose integrates smoothly with the machine learning frameworks and tools you plan to use. Compatibility guarantees that resources are utilized effectively without any hitches.

Considerations for RTX A6000 Ada:

- High Cost:

Comparison Chart: Graphics Cards for ML and AI

Conclusion:

The NVIDIA Tesla V100 emerges as an impressive Tensor Core GPU designed for AI, high-performance computing (HPC), and machine learning tasks. Utilizing the innovative Volta architecture, this graphics card delivers phenomenal performance, boasting an astounding 125 trillion floating-point operations per second (TFLOPS). In the following segments, we'll delve into the key advantages and important considerations of the Tesla V100.

NVIDIA RTX A5000

Harnessing the substantial power of the Volta architecture along with its 5120 CUDA cores, the Tesla V100 excels in performing machine learning tasks. Its proficiency in managing large datasets and executing intricate calculations at remarkable speeds is vital for maintaining efficient workflows in machine learning.

Pros of RTX A5000:

- High Performance:

With a robust 16 GB of HBM2 memory, the Tesla V100 facilitates efficient processing of significant data volumes throughout model training phases. This feature proves invaluable when dealing with expansive datasets, enabling smooth data manipulation. Additionally, the 4096-bit video memory bus width allows for high-speed data transfers between the processor and graphics memory, further enhancing the efficacy of model training and inference. - AI Hardware Acceleration Support:

The Tesla V100 packs various deep learning technologies, including Tensor Cores that optimize floating-point computations. This acceleration can lead to considerable reductions in model training durations, thereby improving overall performance. - Large Memory Capacity:

The flexibility of the Tesla V100 is evident as it seamlessly integrates into both desktop and server setups. It works harmoniously with a variety of machine learning frameworks like TensorFlow, PyTorch, Caffe, among others, allowing developers the flexibility to select their preferred tools for model building and training. - Machine Learning Framework Support:

However, with its professional-grade capabilities, the NVIDIA Tesla V100 comes with a premium price tag. Costing around $14,447, it may represent a significant investment, especially for individual researchers or smaller machine learning teams. Budgeting around this pricing is crucial when evaluating overall project needs.

Considerations for RTX A5000:

- Power Consumption and Cooling:

Given the robust performance of the Tesla V100, it requires a substantial power supply and can generate significant heat. Thus, effective cooling solutions must be implemented to maintain optimal operating temperatures, which can escalate energy usage and associated costs.

Conclusion:

To maximize the performance potential of the Tesla V100, it’s vital to have a compatible system that includes a powerful processor and adequate RAM to ensure smooth operation.

NVIDIA RTX 4090

The NVIDIA A100, engineered with the groundbreaking Ampere technology, signifies a major advancement in GPU capabilities tailored for machine learning applications. With remarkable performance attributes, expansive memory, and NVLink support, the A100 empowers data scientists and researchers to tackle intricate machine learning challenges efficiently and accurately. However, the considerable cost, energy consumption, and software compatibility should be thoroughly assessed before making the leap to adopt the NVIDIA A100. The innovations around the A100 offer new opportunities for accelerating model training and inference, paving avenues for further breakthroughs in machine learning. neural networks The NVIDIA A100, utilizing the advanced Ampere architecture, is a standout graphics card crafted to meet the rigorous demands of machine learning endeavors. Providing exceptional performance and versatility, the A100 marks a significant step forward in GPU technology, and this article will highlight its key benefits and important considerations.

Pros of the NVIDIA RTX 4090:

- Outstanding Performance:

Armed with a substantial number of CUDA cores (4608), the NVIDIA A100 produces remarkable performance metrics. Its enhanced computational capability enables expedited workflows in machine learning, leading to quicker model training and inference stages.

Considerations for the NVIDIA RTX 4090:

- Cooling Challenges:

Accompanying the NVIDIA A100 is a hefty 40 GB of HBM2 memory, which aids in efficiently managing massive datasets during model training. This formidable memory capacity is incredibly beneficial for working with large and complex datasets, fostering smooth and uninterrupted data processing. - Configuration Limitations:

The NVLink technology incorporated in the A100 allows for the seamless pairing of multiple NVIDIA A100 graphics cards into a unified system, promoting parallel processing. This increased parallelism notably boosts performance and speeds up model training, enhancing overall productivity in machine learning workflows.

Conclusion:

As one of the most advanced and capable graphics cards on the market, the NVIDIA A100 comes with a premium cost. Priced at approximately $10,000, it could be a hefty investment for individual users or organizations considering this technology.

NVIDIA RTX 4080

Making the most of the NVIDIA A100’s capabilities calls for a significant power supply infrastructure. This requirement can lead to higher energy costs and necessitates strategic power management, especially when this card is deployed in extensive operations.

Pros of the RTX 4080:

- High Performance:

For optimal performance, the NVIDIA A100 must operate on suitable software and drivers. It’s important to be aware that while many machine learning programs support this GPU model, not all frameworks might be fully optimized for it. Compatibility factors must be carefully evaluated when integrating the NVIDIA A100 into current machine learning operations. - Competitive Pricing:

Boasting the Volta architecture and innovative features, the Tesla V100 stands out as a remarkable Tensor Core GPU designed for AI, HPC, and machine learning tasks. Its powerful performance, substantial memory, deep learning capabilities, and flexibility make it an attractive option for organizations and researchers delving into cutting-edge machine learning projects. Yet, potential users should consider factors like cost, energy requirements, and infrastructure prerequisites to ensure their investment is fitting. With the Tesla V100, the possibility of breakthroughs in AI and machine learning is not just attainable, encouraging innovation and exploration. The Quadro RTX 8000 emerges as a formidable graphics card specifically crafted for professionals seeking outstanding rendering performance. With cutting-edge features and high-performance specifications, this graphics card caters to various applications, including data visualization, computer graphics, and machine learning. This article will delve into the distinctive features and benefits of the Quadro RTX 8000. .

Considerations for the RTX 4080:

- SLI Limitation:

The Quadro RTX 8000 is equipped with a robust GPU and an impressive array of 5120 CUDA cores, delivering unparalleled performance for high-demand rendering tasks. Its superior computational prowess allows professionals to create intricate models showcasing realistic shadows, reflections, and refractions, thus achieving stunning visual realism.

Conclusion:

A highlight feature of the Quadro RTX 8000 is its hardware-accelerated ray tracing capability. This technology offers the chance to create stunning photorealistic images and authentic lighting effects. For professionals involved in data visualization, graphics creation, or machine learning, this feature significantly elevates the realism and visual quality of their projects.

NVIDIA RTX 4070

The Quadro RTX 8000 is equipped with a generous 48GB of GDDR6 graphics memory. This extensive memory capacity supports workloads that require handling significant volumes of data, particularly when dealing with large-scale machine learning models and datasets. Professionals can carry out intricate computations and manage large data amounts while still maintaining peak performance and efficiency.

Pros of the NVIDIA RTX 4070:

- High Performance:

Top 10 Graphics Cards for Machine Learning and AI: Premier GPUs for Deep Learning | Metaverse Post - Low Power Consumption:

Choosing the right graphics card is pivotal for maximizing performance when it comes to analyzing extensive datasets and executing parallel processes. - Cost-Effective Solution:

Top 10 Graphics Cards for Machine Learning and AI: Premier GPUs for Deep Learning

Considerations for the NVIDIA RTX 4070:

- Limited Memory Capacity:

FTC's Attempt to Prevent Microsoft-Activision Merger Fails - Published: July 18, 2023 at 7:12 am | Updated: July 18, 2023 at 7:12 am

To enhance your experience in your native language, we sometimes utilize an automatic translation plugin. It's important to be aware that automatic translations may not always be accurate, so please read carefully.

Conclusion:

Choosing an appropriate graphics card is vital for achieving peak performance when dealing with large datasets and performing parallel computations. This is especially important in the context of training deep neural networks, where intensive matrix and tensor processing is essential. Recently, we’ve seen a surge in the popularity of specialized AI chips, such as TPUs and FPGAs.

NVIDIA GeForce RTX 3090 TI

Essential Features for Machine Learning Graphics Cards

Pros of the NVIDIA GeForce RTX 3090 TI:

- High Performance:

When selecting a graphics card for machine learning applications, there are several key attributes to consider: - Hardware Learning Acceleration:

The number of cores or processors on the graphics card significantly affects its ability to handle parallel processing tasks. A greater number of cores generally enables quicker and more efficient computations. - Large Memory Capacity:

Sufficient memory capacity is essential for managing large datasets and intricate models effectively. The ability to store and access large amounts of data efficiently is crucial for optimal performance.

Compatibility with specialized libraries like CUDA or ROCm can greatly enhance the efficiency of model training. Utilizing hardware-optimized settings accelerates computations and boosts overall performance.

- Power Consumption:

Graphics cards equipped with fast memory and wide memory buses provide superior performance during model training, ensuring that data processing is both quick and efficient. - Compatibility and Support:

Framework Compatibility for Machine Learning: It's imperative to ensure that the graphics card you select is compatible with the machine learning frameworks and developer tools you plan to use. This compatibility guarantees smooth integration and the best use of resources.

Conclusion:

Comparison of Graphics Cards for ML/AI

NVIDIA GeForce RTX 3080 TI

NVIDIA’s Tesla V100 is a formidable Tensor Core GPU engineered for AI, High-Performance Computing (HPC), and machine learning applications. Utilizing the state-of-the-art Volta architecture, this graphics card delivers stellar performance, achieving a remarkable 125 trillion floating-point operations per second (TFLOPS). In this discussion, we’ll delve into the significant advantages and factors to ponder regarding the Tesla V100.

Pros of the NVIDIA GeForce RTX 3080 TI:

- Powerful Performance:

With its impressive 5120 CUDA cores, the Tesla V100 capitalizes on the Volta architecture, providing outstanding performance for machine learning applications. This GPU's capacity to process large datasets and execute complex calculations rapidly is crucial for efficient machine learning operations. - Hardware Learning Acceleration:

The Tesla V100 comes equipped with 16 GB of HBM2 memory, facilitating the effective processing of large data volumes during model training. This is especially useful when handling extensive datasets, allowing for hassle-free data manipulation. Additionally, with a 4096-bit video memory bus width, data transfer between the processor and video memory occurs at high speeds, further enhancing model training and inference performance. - Relatively Affordable Price:

Equipped with advanced deep learning technologies like Tensor Cores, the Tesla V100 significantly accelerates floating-point operations. This reduction in training time directly contributes to superior model performance overall. - Ray Tracing and DLSS Support:

Versatility shines through with the Tesla V100, as its design is compatible with both desktop and server setups. It works seamlessly with a variety of machine learning frameworks, including TensorFlow, PyTorch, and Caffe, giving developers the flexibility to choose their favorite tools for model training and development.

On the professional end, the NVIDIA Tesla V100 comes with a substantial price of $14,447, making it a serious investment for individuals or small teams in the machine learning landscape. It’s crucial to factor in this cost when planning your budget and evaluating your needs.

- Limited Memory:

Given the Tesla V100’s powerful performance, it requires a robust power supply and generates significant heat. Implementing adequate cooling solutions is essential to maintain optimal operating temperatures, which in turn can increase energy usage and overall costs.

Conclusion:

To harness the full potential of the Tesla V100, you will need a compatible infrastructure that includes a potent processor and enough RAM to support efficient operations.

Wrap It Up

The NVIDIA A100, leveraging the innovative Ampere architecture, signifies a remarkable advancement in GPU technology for machine learning applications. Featuring impressive performance attributes, expansive memory, and support for NVLink, the A100 aids data scientists and researchers in tackling sophisticated machine learning challenges with heightened efficiency and accuracy. However, it’s essential to carefully assess the high price, power demands, and software compatibility before making the leap to use the A100. With its groundbreaking features, this graphics card opens up exciting opportunities for faster model training and inference, which could lead to further progress in the machine learning domain.

The NVIDIA A100, which is constructed on the advanced Ampere architecture, serves as a noteworthy graphics card tailored for the requirements of machine learning. It offers remarkable performance and adaptability, marking a substantial progression in GPU technology. This article will dive into the significant advantages and considerations related to the NVIDIA A100.

Currently, NVIDIA Featuring a robust number of CUDA cores (4608), the NVIDIA A100 excels in delivering stellar performance. Its enhanced processing capacity accelerates machine learning operations, significantly reducing the time needed for model training and inference.

Boasting 40 GB of HBM2 memory, the NVIDIA A100 effectively handles copious data volumes during machine learning tasks. This generous memory capacity proves beneficial when grappling with intricate and extensive datasets, enabling seamless and efficient data processing.

The incorporation of NVLink technology allows multiple NVIDIA A100 graphics cards to be interconnected within a single system, enhancing parallel processing capabilities. This level of parallelism leads to marked performance improvements and expedites model training, thus contributing to more streamlined machine learning processes.

FAQs

As one of the market's most potent and sophisticated graphics cards, the NVIDIA A100 does come with a higher price tag at around $10,000. This investment might be considerable for individuals or enterprises considering its adoption.

To fully leverage the NVIDIA A100 graphics card, a robust power supply is essential. This might result in higher energy usage and necessitate effective power management strategies, particularly in larger deployments.

Tasks involving deep neural network To achieve peak performance, the NVIDIA A100 requires specific software and drivers. However, it's important to keep in mind that certain machine learning applications or frameworks may not fully support this particular GPU model. Compatibility issues should be weighed when incorporating the NVIDIA A100 into existing workflows.

With its advanced Volta architecture and cutting-edge capabilities, the Tesla V100 emerges as an outstanding Tensor Core GPU crafted for AI, HPC, and machine learning functionalities. Its remarkable performance potential, large memory footprint, deep learning features, and flexibility present a compelling option for organizations and researchers engaged in next-level machine learning initiatives. Nevertheless, factors like cost, power use, and infrastructure requirements merit careful consideration to ensure a wise investment. The Tesla V100 holds the promise for significant strides in AI and machine learning, empowering developers and researchers to push the frontiers of innovation.

The Quadro RTX 8000 is a powerful graphics card crafted for professionals who demand exceptional rendering capabilities. With its sophisticated features and high-performance specifications, this GPU caters to a variety of applications, ranging from data visualization to computer graphics and machine learning. In this narrative, we will delve into the unique traits and benefits of the Quadro RTX 8000.

Featuring a potent GPU and an impressive 5120 CUDA cores, the Quadro RTX 8000 delivers unmatched performance for intensive rendering tasks. Its robust computational power allows experts to render complex models with lifelike shadows, reflections, and refractions, enhancing the overall realism of their outputs.

One of the hallmark features of the Quadro RTX 8000 is its hardware-accelerated ray tracing capability. This technology paves the way for the generation of stunningly photorealistic images and true-to-life lighting effects. For those in fields such as data visualization, computer graphics, or machine learning, this capability elevates the quality and visual detail of their work significantly.

The Quadro RTX 8000 is equipped with a generous 48GB of GDDR6 memory. This ample memory allows for efficient processing, especially when dealing with large-scale machine learning models and datasets. It enables professionals to conduct complex computations and manage substantial quantities of data without compromising performance quality.

The Quadro RTX 8000 is designed to work smoothly with well-known machine learning libraries and frameworks, such as TensorFlow, PyTorch, CUDA, and cuDNN, among others. This compatibility means that users can easily integrate it into their existing processes. By harnessing the immense capabilities of the Quadro RTX 8000 alongside their favorite tools, professionals can efficiently develop and refine their machine learning models. AI When it comes to high-performance graphics accelerators, the Quadro RTX 8000 is on the pricier side, retailing at around $8,200. This high cost might deter individual users and smaller enterprises from adopting this powerful tool.

- The Quadro RTX 8000 sets a high standard in the realm of professional graphics rendering. With its cutting-edge GPU, support for ray tracing, substantial memory capabilities, and seamless compatibility with mainstream machine learning tools and frameworks, this graphics card enables users to produce visually captivating and true-to-life models along with intricate visualizations and simulations. While its steep price could be a barrier for some, the numerous benefits make it an invaluable resource for professionals in search of unrivaled performance and memory resources. The Quadro RTX 8000 allows professionals to unleash their creativity and expand the horizons of their work across data visualization, computer graphics, and machine learning.

- For professionals seeking a powerful graphics solution that also prioritizes energy efficiency, the RTX A6000 Ada is an attractive choice. Thanks to its cutting-edge features—including the Ada Lovelace architecture, high-performance CUDA cores, and generous VRAM—the RTX A6000 Ada provides meaningful benefits for numerous professional applications. In this article, we will examine the standout characteristics and advantages of the RTX A6000 Ada.

- Leveraging the Ada Lovelace architecture, the RTX A6000 Ada features third-gen RT cores, fourth-gen Tensor Cores, and next-gen CUDA cores. These architectural innovations translate to remarkable performance, allowing professionals to handle demanding tasks with relative ease. The card's impressive 48GB of VRAM ensures that it can efficiently manage extensive datasets during model training.

Disclaimer

In line with the Trust Project guidelines With its substantial 48GB memory allocation, the RTX A6000 Ada guarantees efficient processing of vast data sets. This abundant memory empowers professionals to tackle intricate machine learning models and significant data sets without sacrificing speed or efficiency. The ability to handle extensive data loads drastically improves the speed and accuracy of model training.