The ability of AI to pull up private images raises significant privacy concerns in our daily lives.

In Brief

AI systems are capable of retaining specific data and images, which presents various challenges and risks.

It might lead to new legal disputes.

Many groundbreaking technologies often capture our imagination, but they also face legal challenges. This is evident in the ongoing lawsuit against the developers of the StableDiffusion model. being sued StableDiffusion is a fully open-source AI platform that allows users to generate images based on textual descriptions. This model can create stunningly realistic images or interpret styles from well-known artists.

It's clear that many artists are opposed to this trend; for instance, ArtStation, a popular community for creators, held an event to voice their concerns. “No AI” strike Artists argue that the datasets used by AI models, particularly those trained on copyrighted works, should not be utilized without proper permission. For instance, consider if someone captured a candid moment of you at a gathering and shared it online – an AI could then analyze this image and incorporate it into its training data. images The models in question can memorize certain images and data, which raises serious ethical and security concerns. This means that someone with malicious intent could use AI to recreate images from social contexts and exploit them in harmful ways. This is particularly unsettling considering that the training dataset includes over 5 billion images, many of which may include private content that was publicly accessible at one time. Unfortunately, there have been instances of private images being misused in the past, such as a situation in 2013 where sensitive photographs were used inappropriately on a medical clinic's website.

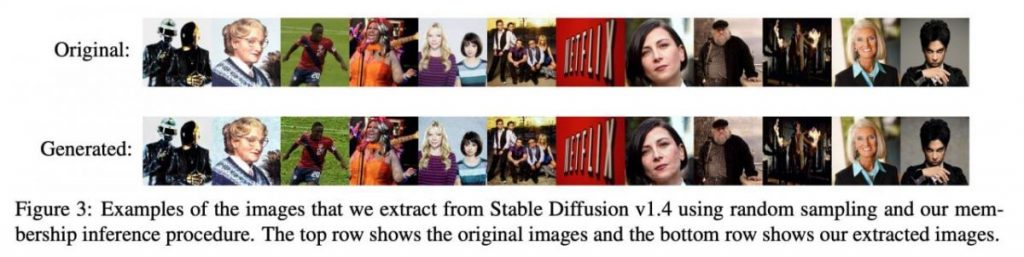

A recent analysis compared the original images with those generated by AI, revealing that, while there was some distortion, many of the generated images bore close resemblance to the training images. Such findings are critical when discussing the implications of these models in a legal context, as jurors will need to consider whether these models effectively 'remember' or replicate copyrighted materials without authorization. StableDiffusion’ That said, it's premature to assert that these models only replicate knowledge without creativity. As previously noted, while only 100 unique images were generated during a process involving 5 billion training instances, the creators sorted and noted the most common prompts without mainly producing duplicates. doctor snapped a picture of a patient A list of the top 10 mobile applications for generating AI art in 2023, applicable for both Android and iOS devices.

Here is an article Here are the best 10 guides and tutorials for crafting prompts for text-to-image models, including Midjourney, Stable Diffusion, and DALL-E.

Please be aware that the information contained on this page is not intended to serve as legal, financial, or investment advice. It’s essential to only invest funds that you are willing to risk. Always seek professional advice if you're uncertain about financial decisions. For more detailed information, reviewing the terms of service and support pages provided by the platform is advisable. MetaversePost strives to maintain accuracy in reporting, but please be aware that market conditions can change suddenly.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines Binance has launched a new fund account solution designed to eliminate barriers for fund managers looking to enter the exchange market.